Read Anywhere Pointed: Layout-aware GUI Screen Reading with Tree-of-Lens Grounding

Yue Fan, Lei Ding, Ching-Chen Kuo, Shan Jiang, Yang Zhao, Xinze Guan, Jie Yang, Yi Zhang, Xin Eric Wang

2024-06-28

Summary

This paper talks about a new system called the Tree-of-Lens (ToL) agent, which helps users read and understand graphical user interfaces (GUIs) by providing detailed descriptions based on where the user clicks on the screen. It aims to improve how screen reading tools interpret and explain the layout of information on digital devices.

What's the problem?

Current screen reading tools are often rigid and do not effectively help users understand the layout of information on screens. When users click on a point in a GUI, these tools struggle to provide meaningful context about what is displayed, making it hard for visually impaired users or those needing assistance to navigate digital interfaces. There is a need for smarter models that can interpret both content and layout information.

What's the solution?

To address this issue, the authors developed the ToL agent, which uses a new method called ToL grounding. This agent analyzes the coordinates of where a user clicks and the screenshot of the GUI to create a Hierarchical Layout Tree. This tree helps the agent understand not just what is in that area of the screen but also how different elements are arranged and related to each other. The ToL agent generates natural language descriptions that explain both the content and layout, making it easier for users to comprehend what they see.

Why it matters?

This research is important because it enhances accessibility for users interacting with GUIs. By providing detailed descriptions that include layout information, the ToL agent can significantly improve how people understand and navigate digital environments. This advancement could benefit many applications, especially for individuals with visual impairments, by making technology more user-friendly and inclusive.

Abstract

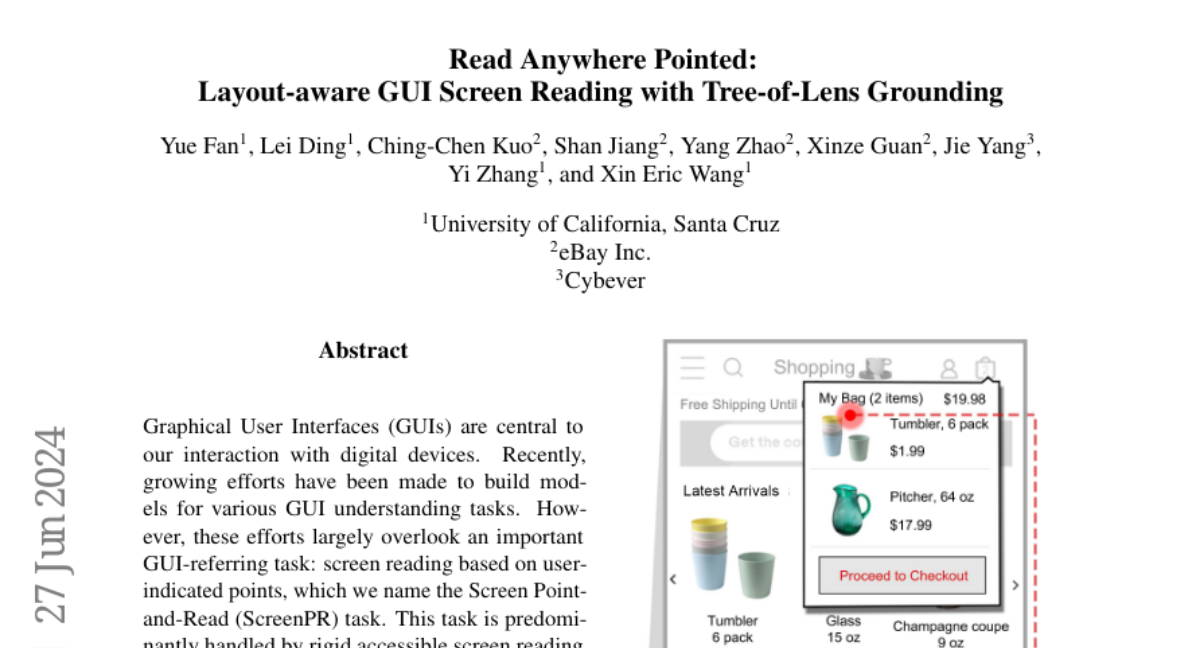

Graphical User Interfaces (GUIs) are central to our interaction with digital devices. Recently, growing efforts have been made to build models for various GUI understanding tasks. However, these efforts largely overlook an important GUI-referring task: screen reading based on user-indicated points, which we name the Screen Point-and-Read (SPR) task. This task is predominantly handled by rigid accessible screen reading tools, in great need of new models driven by advancements in Multimodal Large Language Models (MLLMs). In this paper, we propose a Tree-of-Lens (ToL) agent, utilizing a novel ToL grounding mechanism, to address the SPR task. Based on the input point coordinate and the corresponding GUI screenshot, our ToL agent constructs a Hierarchical Layout Tree. Based on the tree, our ToL agent not only comprehends the content of the indicated area but also articulates the layout and spatial relationships between elements. Such layout information is crucial for accurately interpreting information on the screen, distinguishing our ToL agent from other screen reading tools. We also thoroughly evaluate the ToL agent against other baselines on a newly proposed SPR benchmark, which includes GUIs from mobile, web, and operating systems. Last but not least, we test the ToL agent on mobile GUI navigation tasks, demonstrating its utility in identifying incorrect actions along the path of agent execution trajectories. Code and data: screen-point-and-read.github.io