RepLiQA: A Question-Answering Dataset for Benchmarking LLMs on Unseen Reference Content

Joao Monteiro, Pierre-Andre Noel, Etienne Marcotte, Sai Rajeswar, Valentina Zantedeschi, David Vazquez, Nicolas Chapados, Christopher Pal, Perouz Taslakian

2024-06-19

Summary

This paper introduces RepLiQA, a new dataset designed to test how well large language models (LLMs) can answer questions using information they haven't seen before. It aims to provide a more accurate evaluation of these models' abilities.

What's the problem?

Many LLMs are trained on large amounts of data from the internet, which often includes content that overlaps with the benchmarks used to evaluate their performance. This can lead to misleading results because if a model has already seen the information in the test, it might just be recalling it instead of truly understanding or reasoning about it. Therefore, there is a need for a dataset that tests models on completely new information.

What's the solution?

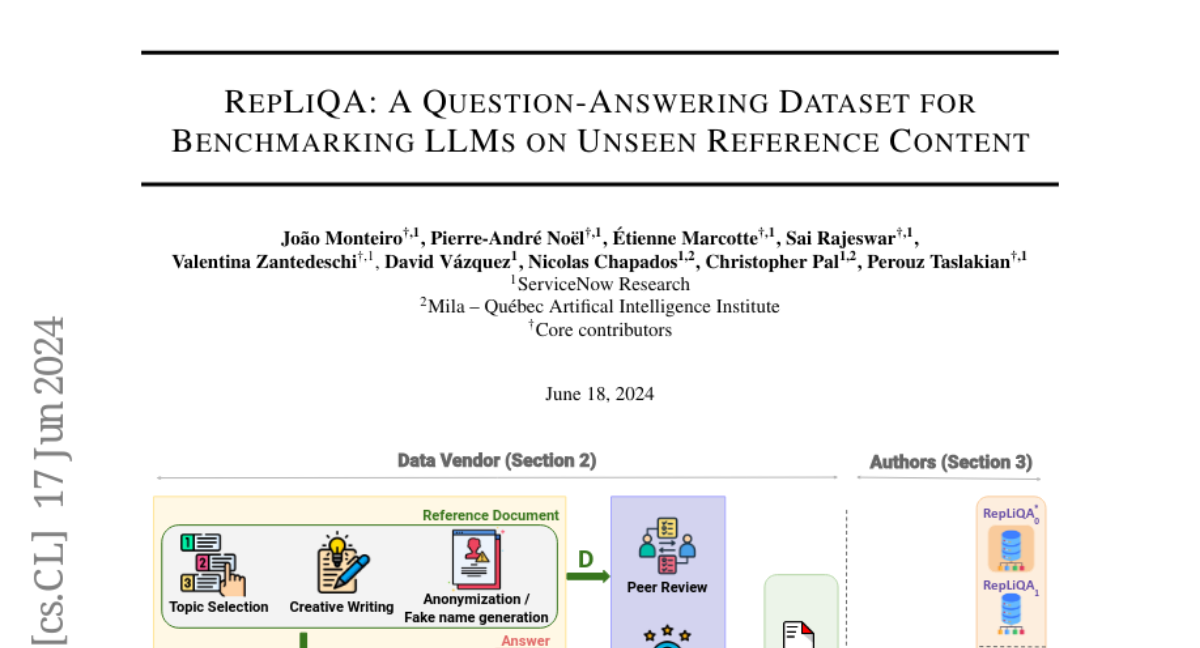

To solve this problem, the authors created RepLiQA, which includes question-answer pairs based on reference documents that are not available online. Each entry consists of a document written by humans about imaginary scenarios, a question related to that document, and the correct answer derived from it. This setup ensures that models can only provide accurate answers if they can find relevant information within the given document. The dataset includes five different test sets, with four being completely new and not previously exposed to LLMs.

Why it matters?

This research is important because it offers a more rigorous way to evaluate LLMs by challenging them with unseen content. By using RepLiQA, researchers can better understand how well these models can comprehend and reason about new information rather than relying on memorized data. This could lead to improvements in developing more capable and reliable AI systems for various applications, such as question answering and information retrieval.

Abstract

Large Language Models (LLMs) are trained on vast amounts of data, most of which is automatically scraped from the internet. This data includes encyclopedic documents that harbor a vast amount of general knowledge (e.g., Wikipedia) but also potentially overlap with benchmark datasets used for evaluating LLMs. Consequently, evaluating models on test splits that might have leaked into the training set is prone to misleading conclusions. To foster sound evaluation of language models, we introduce a new test dataset named RepLiQA, suited for question-answering and topic retrieval tasks. RepLiQA is a collection of five splits of test sets, four of which have not been released to the internet or exposed to LLM APIs prior to this publication. Each sample in RepLiQA comprises (1) a reference document crafted by a human annotator and depicting an imaginary scenario (e.g., a news article) absent from the internet; (2) a question about the document's topic; (3) a ground-truth answer derived directly from the information in the document; and (4) the paragraph extracted from the reference document containing the answer. As such, accurate answers can only be generated if a model can find relevant content within the provided document. We run a large-scale benchmark comprising several state-of-the-art LLMs to uncover differences in performance across models of various types and sizes in a context-conditional language modeling setting. Released splits of RepLiQA can be found here: https://huggingface.co/datasets/ServiceNow/repliqa.