REPOEXEC: Evaluate Code Generation with a Repository-Level Executable Benchmark

Nam Le Hai, Dung Manh Nguyen, Nghi D. Q. Bui

2024-06-21

Summary

This paper introduces RepoExec, a new benchmark designed to evaluate how well code generation models can create executable and correct code at the repository level.

What's the problem?

Many existing models that generate code, known as CodeLLMs, have not been thoroughly tested for their ability to produce code that works correctly in real-world situations. Current benchmarks often overlook important aspects like whether the generated code can run successfully or if it meets the specific needs of developers. This lack of evaluation can lead to unreliable code generation, making it difficult for developers to trust these models.

What's the solution?

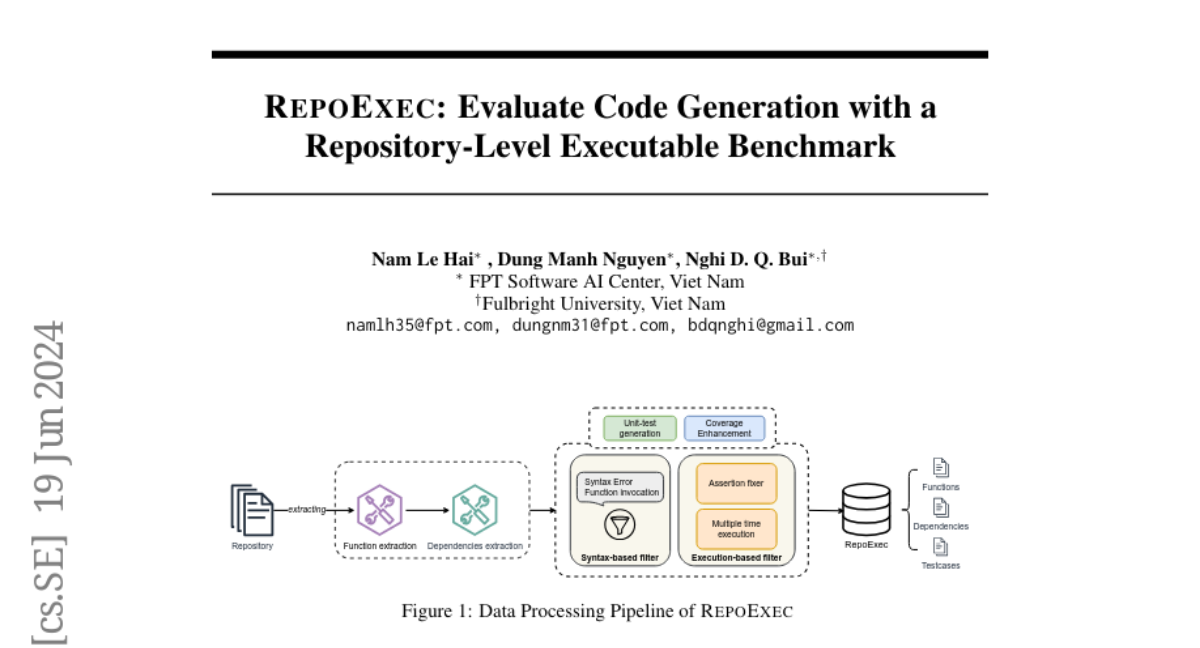

RepoExec addresses these issues by focusing on three key areas: ensuring that the generated code is executable, creating automated tests that check the functionality of the code, and managing complex interactions between different files in a project. The researchers conducted experiments where they provided specific code dependencies to the model and assessed how well it integrated them into its generated code. They also developed a new instruction-tuned dataset that emphasizes understanding code dependencies, which helps improve the model's performance in generating correct and functional code.

Why it matters?

This research is important because it establishes a more effective way to evaluate code generation models, ensuring they produce reliable and usable code for developers. By focusing on real-world applicability and functionality, RepoExec can help improve the quality of AI-generated code, making it more trustworthy for software development tasks. This advancement could lead to better tools for programmers and enhance productivity in coding projects.

Abstract

The ability of CodeLLMs to generate executable and functionally correct code at the repository-level scale remains largely unexplored. We introduce RepoExec, a novel benchmark for evaluating code generation at the repository-level scale. RepoExec focuses on three main aspects: executability, functional correctness through automated test case generation with high coverage rate, and carefully crafted cross-file contexts to accurately generate code. Our work explores a controlled scenario where developers specify necessary code dependencies, challenging the model to integrate these accurately. Experiments show that while pretrained LLMs outperform instruction-tuned models in correctness, the latter excel in utilizing provided dependencies and demonstrating debugging capabilities. We also introduce a new instruction-tuned dataset that focuses on code dependencies and demonstrate that CodeLLMs fine-tuned on our dataset have a better capability to leverage these dependencies effectively. RepoExec aims to provide a comprehensive evaluation of code functionality and alignment with developer intent, paving the way for more reliable and applicable CodeLLMs in real-world scenarios. The dataset and source code can be found at~https://github.com/FSoft-AI4Code/RepoExec.