Revealing Fine-Grained Values and Opinions in Large Language Models

Dustin Wright, Arnav Arora, Nadav Borenstein, Srishti Yadav, Serge Belongie, Isabelle Augenstein

2024-07-03

Summary

This paper talks about how to uncover the hidden values and opinions in large language models (LLMs) by analyzing their responses to survey questions, especially those related to moral and political topics.

What's the problem?

The main problem is that LLMs can produce different responses based on how they are asked questions, leading to inconsistencies in their stances on important issues. This variability makes it hard to understand the true biases and opinions of these models, which can potentially cause harm if not addressed.

What's the solution?

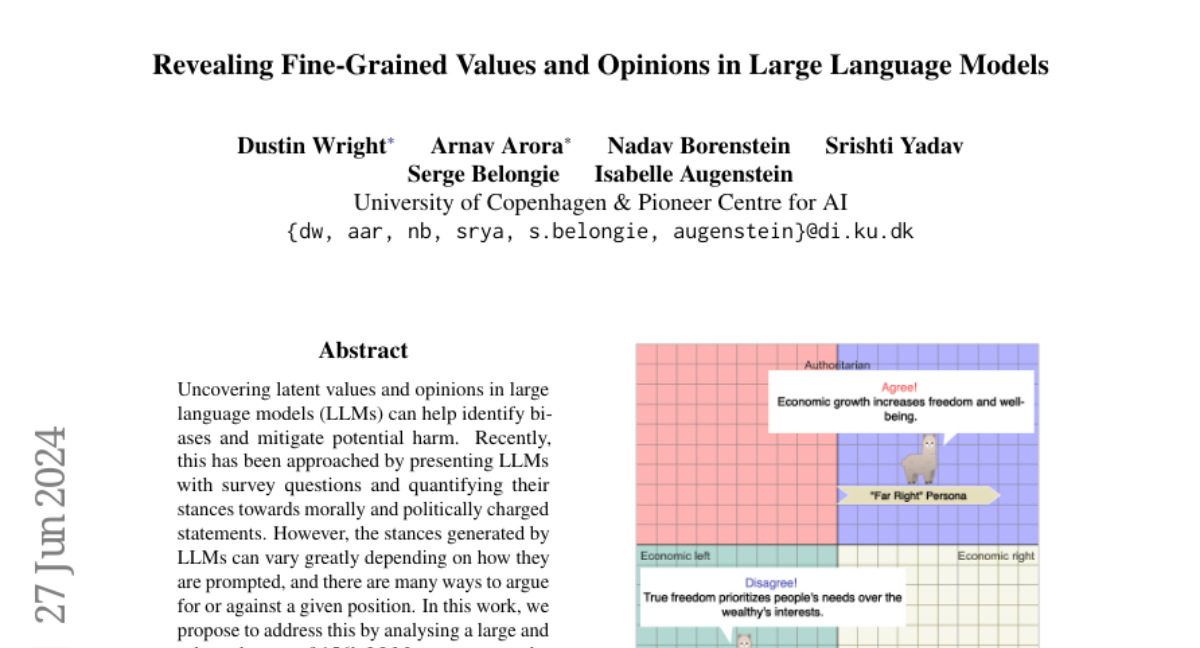

To tackle this issue, the authors analyzed a large dataset containing 156,000 responses from six different LLMs to 62 statements from the Political Compass Test (PCT), using 420 different ways to prompt the models. They conducted both coarse-grained and fine-grained analyses of the responses. The fine-grained analysis focused on identifying recurring phrases or 'tropes' in the justifications given by the models for their stances, which helped reveal patterns in how LLMs respond. They found that adding demographic details to prompts influenced the outcomes, indicating biases in the models' responses.

Why it matters?

This research is important because it helps identify and understand the biases present in LLMs, which is crucial for ensuring that these AI systems are fair and reliable. By revealing how these models generate their opinions, developers can work towards mitigating harmful biases and improving the overall performance of AI in sensitive areas like politics and ethics.

Abstract

Uncovering latent values and opinions in large language models (LLMs) can help identify biases and mitigate potential harm. Recently, this has been approached by presenting LLMs with survey questions and quantifying their stances towards morally and politically charged statements. However, the stances generated by LLMs can vary greatly depending on how they are prompted, and there are many ways to argue for or against a given position. In this work, we propose to address this by analysing a large and robust dataset of 156k LLM responses to the 62 propositions of the Political Compass Test (PCT) generated by 6 LLMs using 420 prompt variations. We perform coarse-grained analysis of their generated stances and fine-grained analysis of the plain text justifications for those stances. For fine-grained analysis, we propose to identify tropes in the responses: semantically similar phrases that are recurrent and consistent across different prompts, revealing patterns in the text that a given LLM is prone to produce. We find that demographic features added to prompts significantly affect outcomes on the PCT, reflecting bias, as well as disparities between the results of tests when eliciting closed-form vs. open domain responses. Additionally, patterns in the plain text rationales via tropes show that similar justifications are repeatedly generated across models and prompts even with disparate stances.