Robust Multimodal Large Language Models Against Modality Conflict

Zongmeng Zhang, Wengang Zhou, Jie Zhao, Houqiang Li

2025-07-14

Summary

This paper talks about how multimodal large language models, which process both images and text, sometimes make mistakes called hallucinations when information from different sources conflicts with each other.

What's the problem?

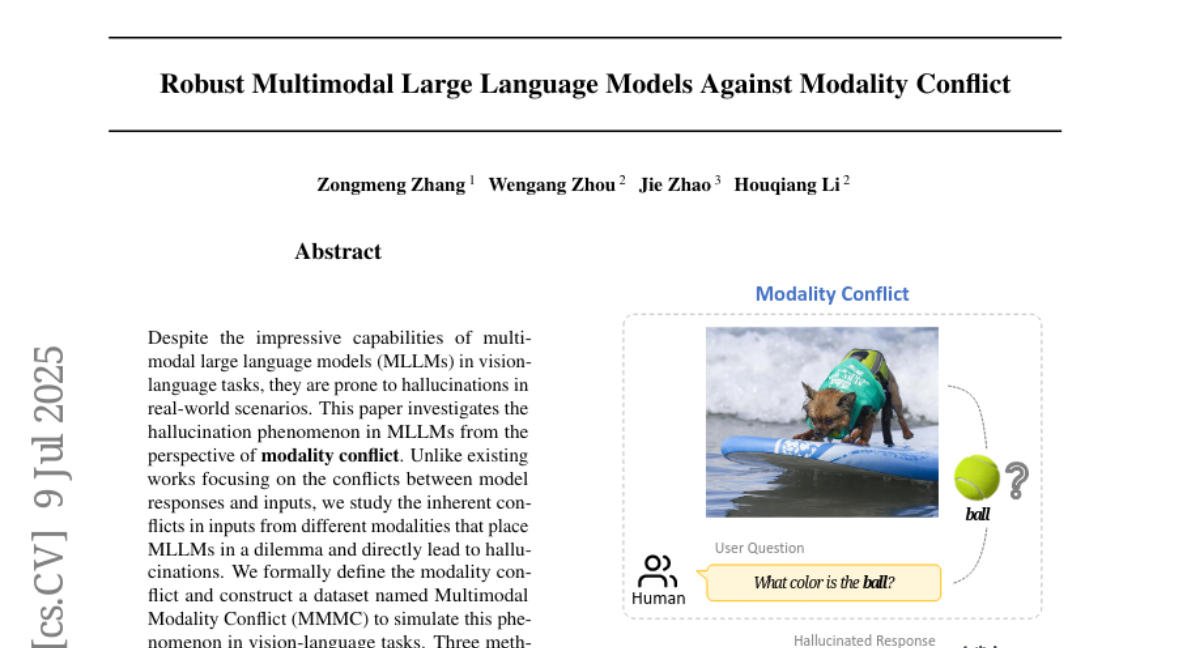

The problem is that when the details in an image conflict with the text input, the model gets confused and creates wrong or nonsensical answers because it can’t properly handle the conflicting information from different modes.

What's the solution?

The researchers studied this issue by defining what modality conflict means and created a special dataset to test it. They then tried three ways to fix the problem: changing prompts, fine-tuning the model with more data, and using reinforcement learning. They found that reinforcement learning worked best to reduce these hallucinations.

Why it matters?

This matters because reducing hallucinations makes multimodal AI systems more accurate and reliable, which is really important for applications like education, healthcare, and any area where dependable AI understanding is needed.

Abstract

Modality conflict in multimodal large language models leads to hallucinations, and reinforcement learning is found to be the most effective method to mitigate this issue.