SAeUron: Interpretable Concept Unlearning in Diffusion Models with Sparse Autoencoders

Bartosz Cywiński, Kamil Deja

2025-02-03

Summary

This paper talks about a new way to make AI image generators safer by teaching them to 'forget' how to create harmful or unwanted content. The researchers developed a method called SAeUron that uses special tools called sparse autoencoders to identify and remove specific concepts from these AI models.

What's the problem?

AI models that create images from text descriptions (called diffusion models) are really good at making all kinds of pictures. But sometimes they accidentally make harmful or inappropriate images, which is a big safety concern. Previous attempts to fix this problem worked okay, but it was hard to understand exactly how they changed the AI's knowledge.

What's the solution?

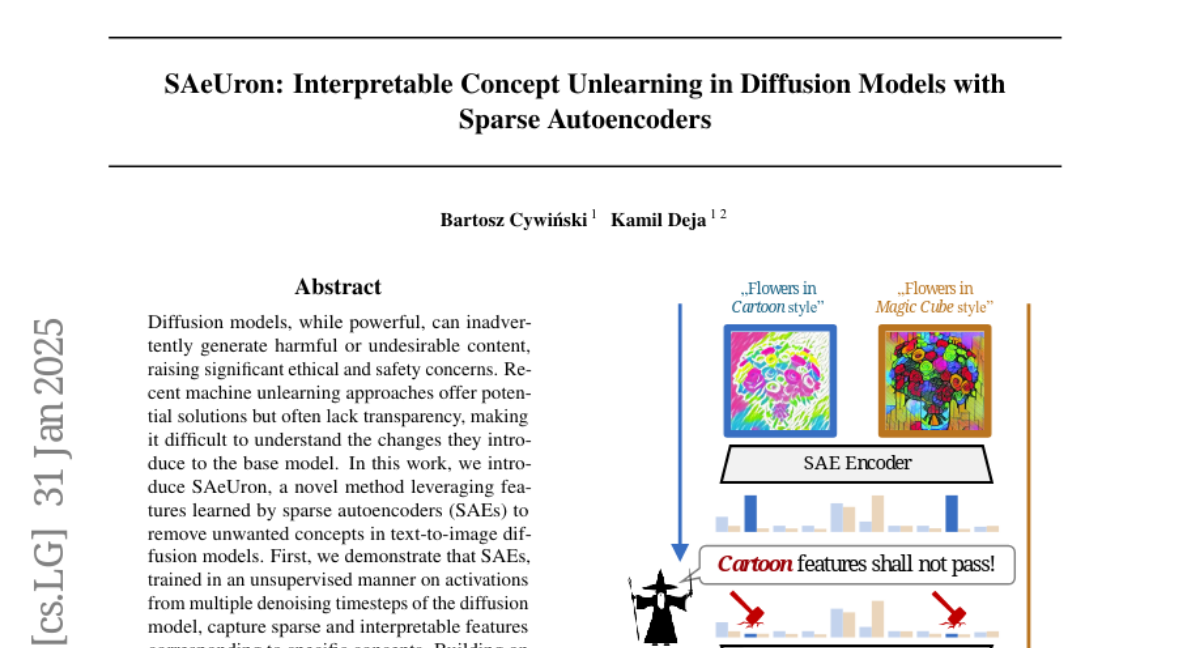

The researchers created SAeUron, which uses something called sparse autoencoders to look at how the AI thinks about different concepts. They figured out how to identify specific features in the AI's 'brain' that correspond to unwanted concepts. Then, they developed a way to carefully remove or block these features without messing up the AI's ability to make good images of other things. They tested their method on different types of unwanted content and found it worked really well, even when someone tried to trick the AI into making bad content.

Why it matters?

This matters because it makes AI image generators safer to use without losing their overall abilities. It's like teaching a really talented artist to avoid drawing certain things without forgetting how to draw everything else. This could help make AI tools more trustworthy and less likely to cause harm or offense. Plus, because we can understand how it works, it's easier for researchers to improve and for companies to feel confident using these safer AI models in real-world applications.

Abstract

Diffusion models, while powerful, can inadvertently generate harmful or undesirable content, raising significant ethical and safety concerns. Recent machine unlearning approaches offer potential solutions but often lack transparency, making it difficult to understand the changes they introduce to the base model. In this work, we introduce SAeUron, a novel method leveraging features learned by sparse autoencoders (SAEs) to remove unwanted concepts in text-to-image diffusion models. First, we demonstrate that SAEs, trained in an unsupervised manner on activations from multiple denoising timesteps of the diffusion model, capture sparse and interpretable features corresponding to specific concepts. Building on this, we propose a feature selection method that enables precise interventions on model activations to block targeted content while preserving overall performance. Evaluation with the competitive UnlearnCanvas benchmark on object and style unlearning highlights SAeUron's state-of-the-art performance. Moreover, we show that with a single SAE, we can remove multiple concepts simultaneously and that in contrast to other methods, SAeUron mitigates the possibility of generating unwanted content, even under adversarial attack. Code and checkpoints are available at: https://github.com/cywinski/SAeUron.