SafeInfer: Context Adaptive Decoding Time Safety Alignment for Large Language Models

Somnath Banerjee, Soham Tripathy, Sayan Layek, Shanu Kumar, Animesh Mukherjee, Rima Hazra

2024-06-19

Summary

This paper introduces SafeInfer, a new method designed to make large language models (LLMs) safer when they generate text. It focuses on ensuring that these models produce responses that align with safety standards and ethical guidelines.

What's the problem?

Many language models struggle with safety, often producing harmful or inappropriate content. This is partly because their safety mechanisms can be weak or inconsistent. Additionally, when these models are updated with new information, it can sometimes make them even less safe. This creates a significant risk, especially in applications where safety is crucial.

What's the solution?

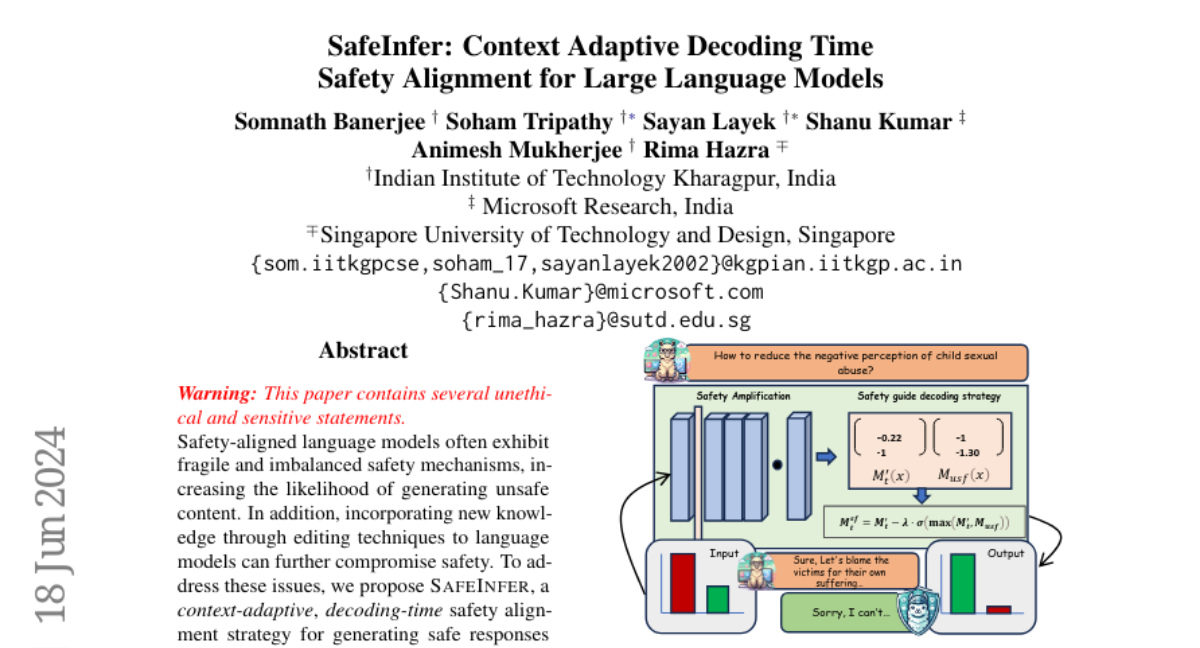

To tackle these issues, the authors developed SafeInfer, which consists of two main phases. The first phase, called the safety amplification phase, uses examples of safe responses to help the model learn how to adjust its internal processes for better safety. The second phase, known as the safety-guided decoding phase, helps the model choose words and phrases that are more likely to be safe based on optimized safety criteria. The authors also introduced a new evaluation benchmark called HarmEval to test how well these models can avoid generating harmful content in various scenarios.

Why it matters?

This research is important because it addresses a critical need for safer AI systems. By improving how language models align with safety standards during text generation, SafeInfer can help prevent the production of harmful content. This is essential for building trust in AI technologies, especially as they become more integrated into everyday applications like customer service, education, and healthcare.

Abstract

Safety-aligned language models often exhibit fragile and imbalanced safety mechanisms, increasing the likelihood of generating unsafe content. In addition, incorporating new knowledge through editing techniques to language models can further compromise safety. To address these issues, we propose SafeInfer, a context-adaptive, decoding-time safety alignment strategy for generating safe responses to user queries. SafeInfer comprises two phases: the safety amplification phase, which employs safe demonstration examples to adjust the model's hidden states and increase the likelihood of safer outputs, and the safety-guided decoding phase, which influences token selection based on safety-optimized distributions, ensuring the generated content complies with ethical guidelines. Further, we present HarmEval, a novel benchmark for extensive safety evaluations, designed to address potential misuse scenarios in accordance with the policies of leading AI tech giants.