Segment Any Motion in Videos

Nan Huang, Wenzhao Zheng, Chenfeng Xu, Kurt Keutzer, Shanghang Zhang, Angjoo Kanazawa, Qianqian Wang

2025-03-31

Summary

This paper is about teaching AI to identify and separate moving objects in videos, similar to how humans can easily spot movement.

What's the problem?

AI often struggles to accurately identify moving objects in videos due to things like blurry motion, complex movements, and distractions in the background.

What's the solution?

The researchers developed a new method that combines long-range tracking of motion with semantic (meaning-based) features and uses a smart prompting strategy to create detailed masks of the moving objects.

Why it matters?

This work matters because it can help AI better understand videos, which is useful for applications like self-driving cars, video editing, and surveillance.

Abstract

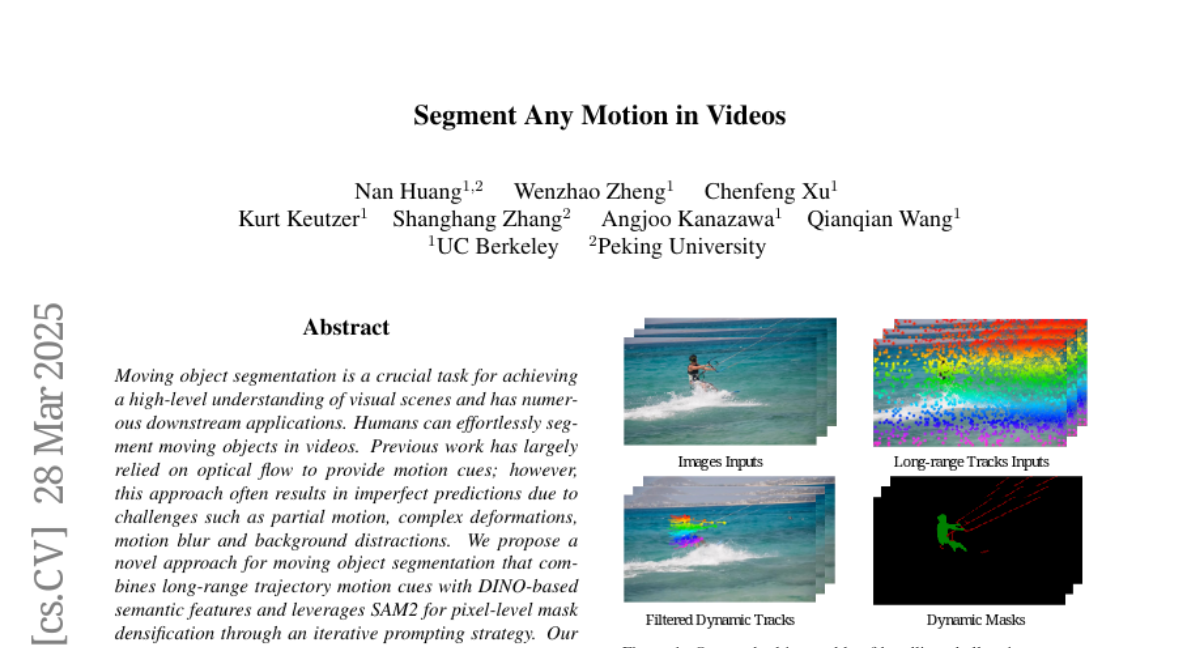

Moving object segmentation is a crucial task for achieving a high-level understanding of visual scenes and has numerous downstream applications. Humans can effortlessly segment moving objects in videos. Previous work has largely relied on optical flow to provide motion cues; however, this approach often results in imperfect predictions due to challenges such as partial motion, complex deformations, motion blur and background distractions. We propose a novel approach for moving object segmentation that combines long-range trajectory motion cues with DINO-based semantic features and leverages SAM2 for pixel-level mask densification through an iterative prompting strategy. Our model employs Spatio-Temporal Trajectory Attention and Motion-Semantic Decoupled Embedding to prioritize motion while integrating semantic support. Extensive testing on diverse datasets demonstrates state-of-the-art performance, excelling in challenging scenarios and fine-grained segmentation of multiple objects. Our code is available at https://motion-seg.github.io/.