Segment Any Text: A Universal Approach for Robust, Efficient and Adaptable Sentence Segmentation

Markus Frohmann, Igor Sterner, Ivan Vulić, Benjamin Minixhofer, Markus Schedl

2024-06-26

Summary

This paper introduces Segment Any Text (SaT), a new model designed to improve how text is segmented into sentences. It aims to be robust, efficient, and adaptable across different types of text, even when punctuation is missing.

What's the problem?

Traditional methods for segmenting text into sentences often rely heavily on punctuation and specific rules, which can lead to problems when punctuation is missing or when the text comes from different domains (like song lyrics or legal documents). These methods can struggle with accuracy and efficiency, making it hard to adapt to various types of content.

What's the solution?

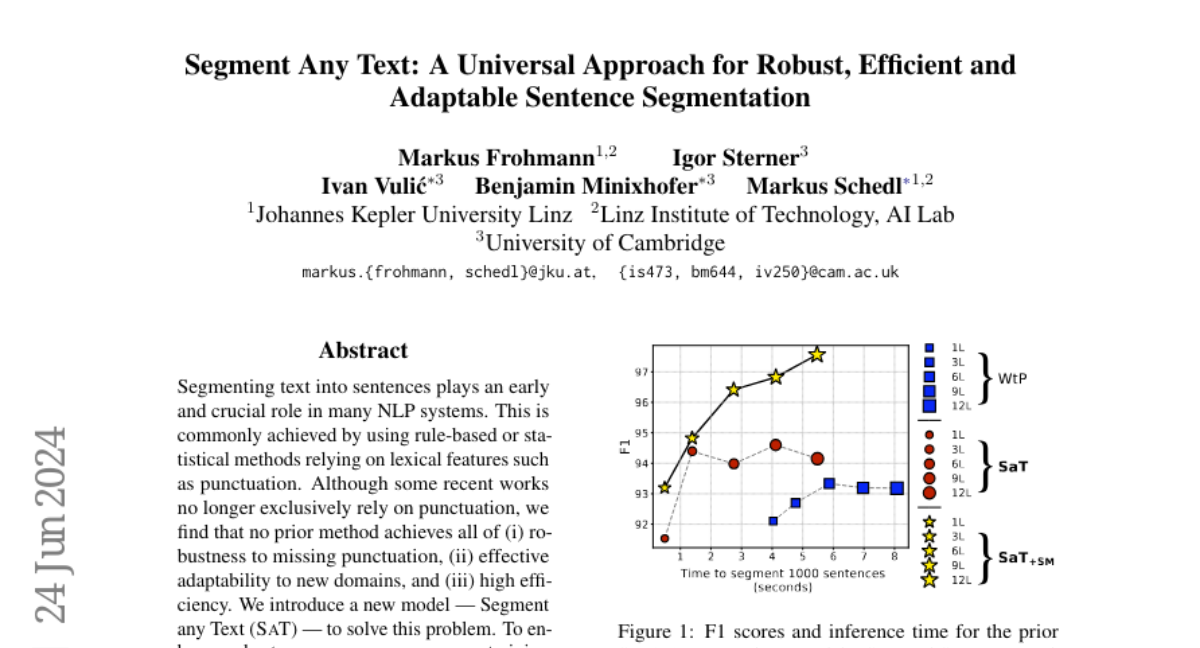

The authors developed SaT to address these issues by enhancing the model's robustness and adaptability. They introduced a new pretraining method that reduces reliance on punctuation and added a fine-tuning stage that allows the model to perform well in different contexts. Additionally, they made architectural changes that significantly increase the speed of the model. SaT has been tested across multiple domains and languages, showing superior performance compared to existing models, especially in cases where the text is poorly formatted.

Why it matters?

This research is important because it provides a more effective way to segment text into sentences, which is a crucial step in many natural language processing (NLP) applications. By improving how models handle diverse types of text, SaT can enhance various technologies, such as chatbots, translation services, and any system that relies on understanding written language.

Abstract

Segmenting text into sentences plays an early and crucial role in many NLP systems. This is commonly achieved by using rule-based or statistical methods relying on lexical features such as punctuation. Although some recent works no longer exclusively rely on punctuation, we find that no prior method achieves all of (i) robustness to missing punctuation, (ii) effective adaptability to new domains, and (iii) high efficiency. We introduce a new model - Segment any Text (SaT) - to solve this problem. To enhance robustness, we propose a new pretraining scheme that ensures less reliance on punctuation. To address adaptability, we introduce an extra stage of parameter-efficient fine-tuning, establishing state-of-the-art performance in distinct domains such as verses from lyrics and legal documents. Along the way, we introduce architectural modifications that result in a threefold gain in speed over the previous state of the art and solve spurious reliance on context far in the future. Finally, we introduce a variant of our model with fine-tuning on a diverse, multilingual mixture of sentence-segmented data, acting as a drop-in replacement and enhancement for existing segmentation tools. Overall, our contributions provide a universal approach for segmenting any text. Our method outperforms all baselines - including strong LLMs - across 8 corpora spanning diverse domains and languages, especially in practically relevant situations where text is poorly formatted. Our models and code, including documentation, are available at https://huggingface.co/segment-any-text under the MIT license.