Self-MoE: Towards Compositional Large Language Models with Self-Specialized Experts

Junmo Kang, Leonid Karlinsky, Hongyin Luo, Zhen Wang, Jacob Hansen, James Glass, David Cox, Rameswar Panda, Rogerio Feris, Alan Ritter

2024-06-20

Summary

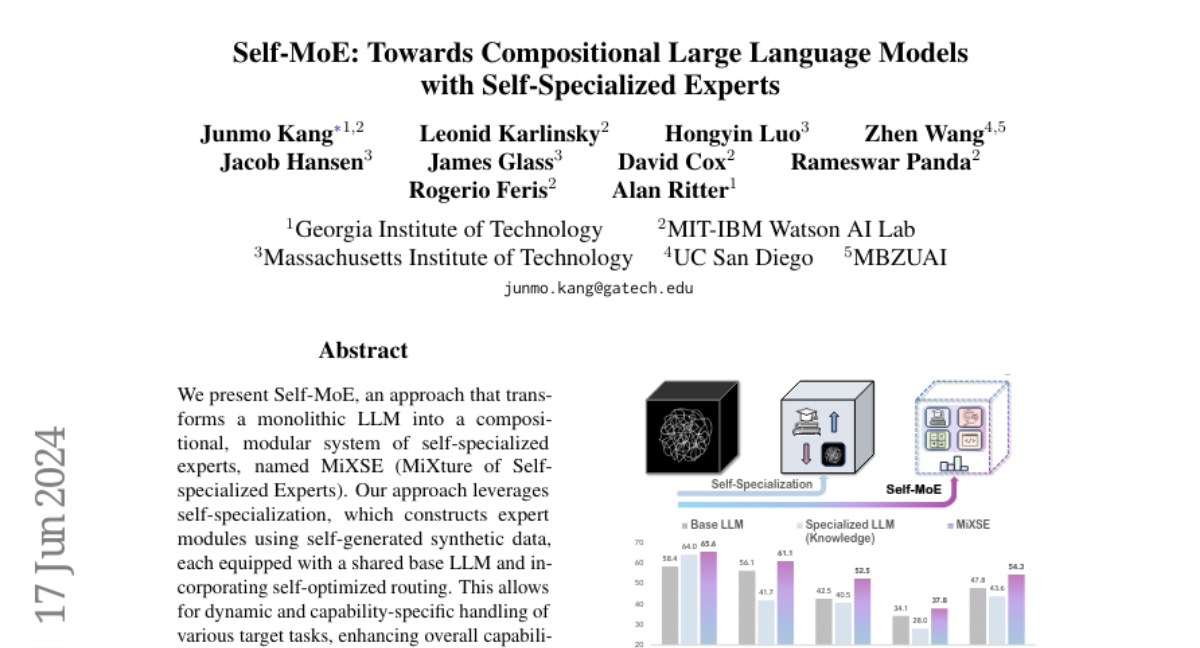

This paper introduces Self-MoE, a new method for transforming large language models (LLMs) into systems of specialized experts called MiXSE. This approach allows the model to better handle different tasks by creating expert modules that focus on specific areas without needing a lot of human-labeled data.

What's the problem?

Traditional LLMs are often designed as single, large models that can struggle to adapt to different tasks efficiently. They usually require extensive human-labeled data to improve their performance in specific areas, which can be time-consuming and expensive. This makes it challenging for these models to specialize in various tasks while maintaining good performance across the board.

What's the solution?

To solve this problem, the authors developed Self-MoE, which creates smaller expert modules from a base LLM using self-generated synthetic data. This means that instead of relying on human annotations, the model generates its own training data to help each expert specialize in different tasks. The system also includes a routing mechanism that helps determine which expert to use for each input, allowing for more flexible and efficient task handling. The results showed that Self-MoE significantly improves performance in areas like knowledge, reasoning, math, and coding compared to traditional methods.

Why it matters?

This research is important because it offers a way to make LLMs more adaptable and efficient without needing extensive resources. By transforming monolithic models into modular systems of specialized experts, Self-MoE can enhance the capabilities of AI in various applications. This could lead to more effective AI tools that can learn and perform multiple tasks better than before, making them more useful in real-world scenarios.

Abstract

We present Self-MoE, an approach that transforms a monolithic LLM into a compositional, modular system of self-specialized experts, named MiXSE (MiXture of Self-specialized Experts). Our approach leverages self-specialization, which constructs expert modules using self-generated synthetic data, each equipped with a shared base LLM and incorporating self-optimized routing. This allows for dynamic and capability-specific handling of various target tasks, enhancing overall capabilities, without extensive human-labeled data and added parameters. Our empirical results reveal that specializing LLMs may exhibit potential trade-offs in performances on non-specialized tasks. On the other hand, our Self-MoE demonstrates substantial improvements over the base LLM across diverse benchmarks such as knowledge, reasoning, math, and coding. It also consistently outperforms other methods, including instance merging and weight merging, while offering better flexibility and interpretability by design with semantic experts and routing. Our findings highlight the critical role of modularity and the potential of self-improvement in achieving efficient, scalable, and adaptable systems.