Self-Training with Direct Preference Optimization Improves Chain-of-Thought Reasoning

Tianduo Wang, Shichen Li, Wei Lu

2024-07-30

Summary

This paper discusses a new method called Direct Preference Optimization (DPO) that improves how language models (LMs) learn to solve mathematical reasoning problems. It enhances their reasoning abilities by allowing them to learn from their own outputs, making the training process more efficient and effective.

What's the problem?

Training language models for tasks like mathematical reasoning usually requires high-quality data from human experts, which can be expensive and time-consuming to obtain. Existing methods often rely on larger, more powerful models to generate this data, but this approach can be costly and unstable. Additionally, these models may not always produce reliable results because they can behave unpredictably.

What's the solution?

To overcome these challenges, the authors propose using self-training, where models learn from their own outputs instead of needing extensive external data. They enhance this self-training process by incorporating DPO, which helps guide the models toward better reasoning by using preference data. This allows the models to improve their performance on various mathematical reasoning tasks without needing as much training data from large proprietary models. The experiments showed that this method significantly boosts the reasoning capabilities of smaller language models.

Why it matters?

This research is important because it provides a more cost-effective and scalable way to train language models for complex reasoning tasks. By improving how these models learn, DPO can lead to better performance in applications that require mathematical reasoning, such as educational tools, automated tutoring systems, and other AI-driven solutions that assist with problem-solving.

Abstract

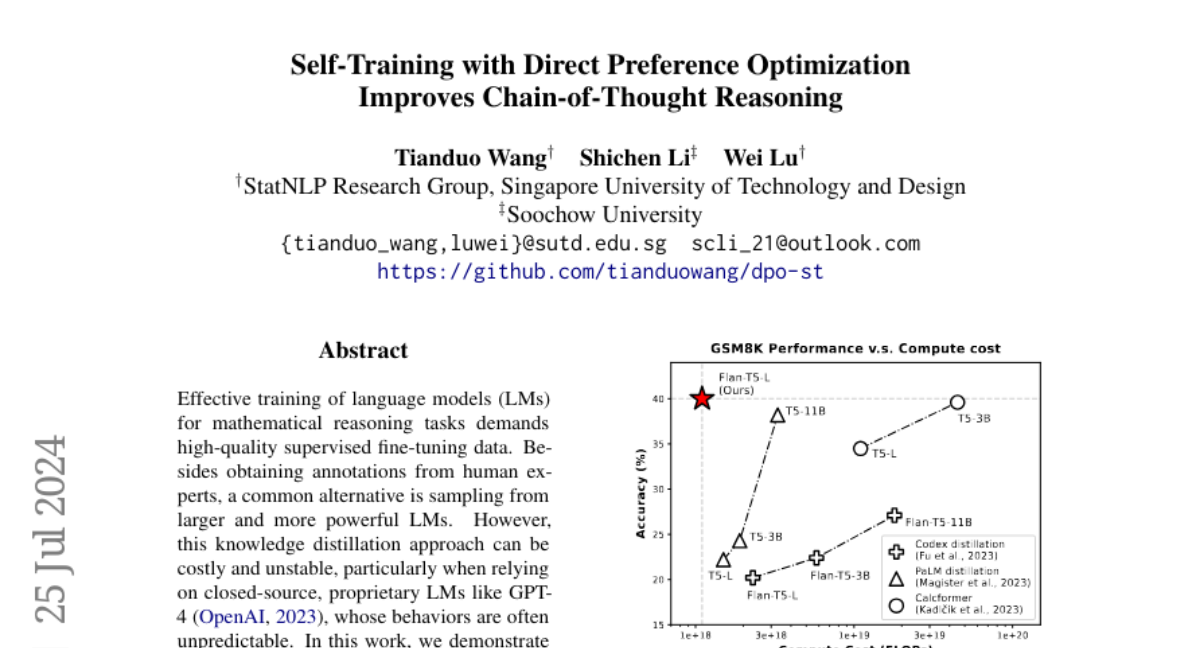

Effective training of language models (LMs) for mathematical reasoning tasks demands high-quality supervised fine-tuning data. Besides obtaining annotations from human experts, a common alternative is sampling from larger and more powerful LMs. However, this knowledge distillation approach can be costly and unstable, particularly when relying on closed-source, proprietary LMs like GPT-4, whose behaviors are often unpredictable. In this work, we demonstrate that the reasoning abilities of small-scale LMs can be enhanced through self-training, a process where models learn from their own outputs. We also show that the conventional self-training can be further augmented by a preference learning algorithm called Direct Preference Optimization (DPO). By integrating DPO into self-training, we leverage preference data to guide LMs towards more accurate and diverse chain-of-thought reasoning. We evaluate our method across various mathematical reasoning tasks using different base models. Our experiments show that this approach not only improves LMs' reasoning performance but also offers a more cost-effective and scalable solution compared to relying on large proprietary LMs.