SemiEvol: Semi-supervised Fine-tuning for LLM Adaptation

Junyu Luo, Xiao Luo, Xiusi Chen, Zhiping Xiao, Wei Ju, Ming Zhang

2024-10-22

Summary

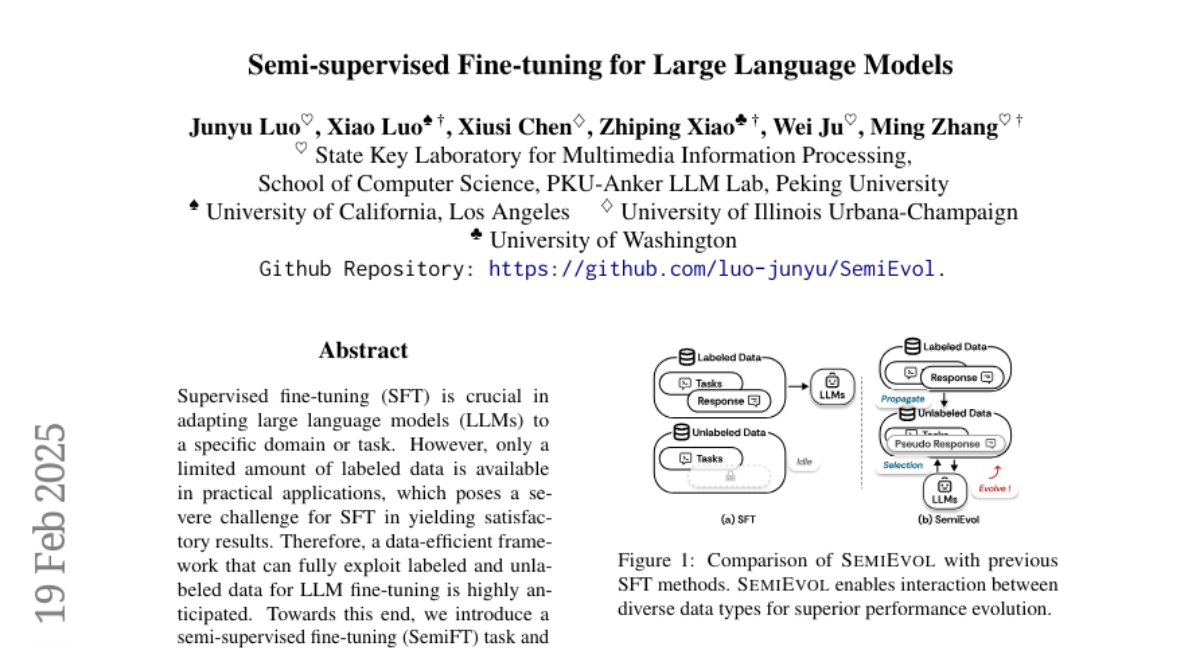

This paper introduces SemiEvol, a semi-supervised fine-tuning framework designed to improve the adaptation of large language models (LLMs) using both labeled and unlabeled data.

What's the problem?

When training large language models, having enough labeled data is essential for good performance. However, in many real-world situations, there is often not enough labeled data available, which makes it difficult for traditional supervised fine-tuning methods to work effectively. This lack of data can lead to models that don’t perform well on specific tasks.

What's the solution?

To solve this problem, the authors developed SemiEvol, which uses a semi-supervised approach to make better use of both labeled and unlabeled data. The framework works by first propagating knowledge from the labeled data to the unlabeled data using two methods: in-weight and in-context techniques. Then, it selects high-quality pseudo-responses through collaborative learning. This allows the model to learn more effectively even with limited labeled data. The authors tested SemiEvol on various datasets and found that it significantly improved model performance compared to traditional methods.

Why it matters?

This research is important because it provides a way to enhance the training of language models without needing a lot of labeled data. By making better use of available resources, SemiEvol can help improve AI applications in fields like customer service, education, and content generation, where high-quality language understanding is crucial.

Abstract

Supervised fine-tuning (SFT) is crucial in adapting large language models (LLMs) to a specific domain or task. However, only a limited amount of labeled data is available in practical applications, which poses a severe challenge for SFT in yielding satisfactory results. Therefore, a data-efficient framework that can fully exploit labeled and unlabeled data for LLM fine-tuning is highly anticipated. Towards this end, we introduce a semi-supervised fine-tuning framework named SemiEvol for LLM adaptation from a propagate-and-select manner. For knowledge propagation, SemiEvol adopts a bi-level approach, propagating knowledge from labeled data to unlabeled data through both in-weight and in-context methods. For knowledge selection, SemiEvol incorporates a collaborative learning mechanism, selecting higher-quality pseudo-response samples. We conducted experiments using GPT-4o-mini and Llama-3.1 on seven general or domain-specific datasets, demonstrating significant improvements in model performance on target data. Furthermore, we compared SemiEvol with SFT and self-evolution methods, highlighting its practicality in hybrid data scenarios.