Sentence-wise Speech Summarization: Task, Datasets, and End-to-End Modeling with LM Knowledge Distillation

Kohei Matsuura, Takanori Ashihara, Takafumi Moriya, Masato Mimura, Takatomo Kano, Atsunori Ogawa, Marc Delcroix

2024-08-02

Summary

This paper introduces a method called sentence-wise speech summarization (Sen-SSum), which creates text summaries from spoken documents by processing them one sentence at a time. It combines automatic speech recognition with summarization techniques to make the process efficient and effective.

What's the problem?

Creating summaries from spoken content is challenging because traditional methods often process entire recordings at once, which can be slow and inefficient. This makes it hard to quickly generate concise summaries that capture the main points of a speech or lecture. Additionally, existing models may not perform well when trying to summarize audio directly without a clear structure.

What's the solution?

The authors propose Sen-SSum, which generates summaries in a sentence-by-sentence manner. They developed two datasets, Mega-SSum and CSJ-SSum, to evaluate their approach. The study compares two types of models: cascade models that first transcribe speech into text and then summarize it, and end-to-end (E2E) models that aim to do both tasks in one step. While E2E models are more efficient, they initially performed worse than cascade models. To improve E2E model performance, the authors introduced knowledge distillation, where the E2E model learns from pseudo-summaries created by the cascade model, leading to better results.

Why it matters?

This research is important because it enhances the ability to quickly and accurately summarize spoken content, making it useful for applications like meeting notes, lecture summaries, and more. By improving how machines understand and process speech, Sen-SSum can help people access information faster and more efficiently.

Abstract

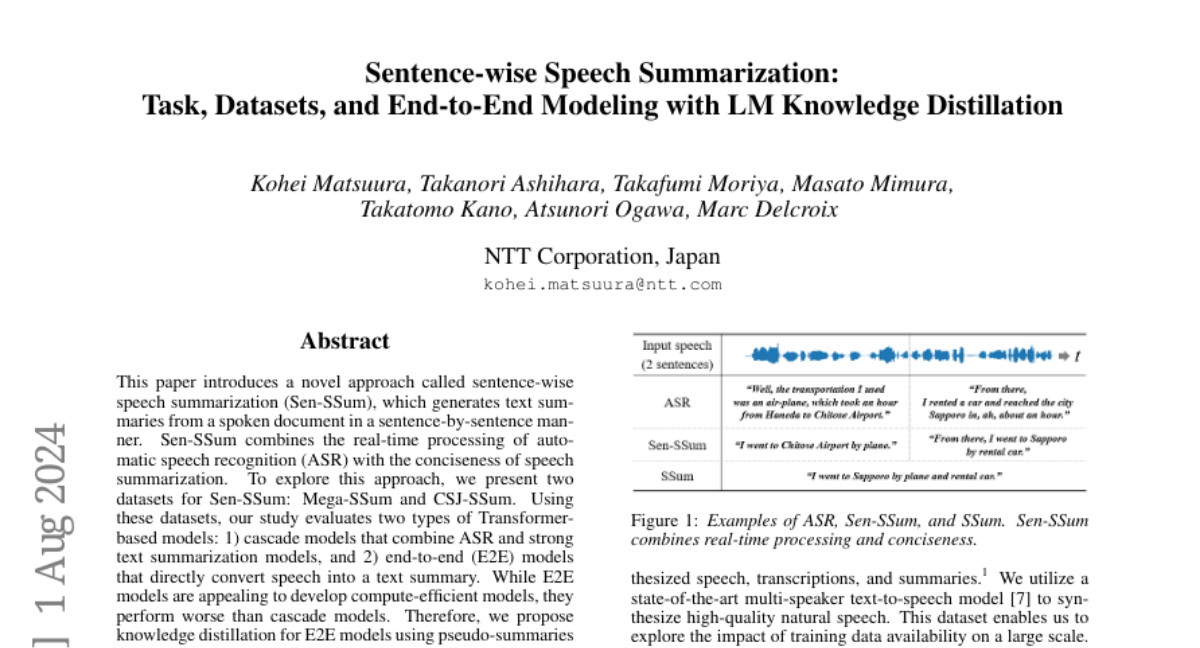

This paper introduces a novel approach called sentence-wise speech summarization (Sen-SSum), which generates text summaries from a spoken document in a sentence-by-sentence manner. Sen-SSum combines the real-time processing of automatic speech recognition (ASR) with the conciseness of speech summarization. To explore this approach, we present two datasets for Sen-SSum: Mega-SSum and CSJ-SSum. Using these datasets, our study evaluates two types of Transformer-based models: 1) cascade models that combine ASR and strong text summarization models, and 2) end-to-end (E2E) models that directly convert speech into a text summary. While E2E models are appealing to develop compute-efficient models, they perform worse than cascade models. Therefore, we propose knowledge distillation for E2E models using pseudo-summaries generated by the cascade models. Our experiments show that this proposed knowledge distillation effectively improves the performance of the E2E model on both datasets.