SlowFast-VGen: Slow-Fast Learning for Action-Driven Long Video Generation

Yining Hong, Beide Liu, Maxine Wu, Yuanhao Zhai, Kai-Wei Chang, Lingjie Li, Kevin Lin, Chung-Ching Lin, Jianfeng Wang, Zhengyuan Yang, Yingnian Wu, Lijuan Wang

2024-10-31

Summary

This paper introduces SlowFast-VGen, a new system designed to generate long action-driven videos by combining slow and fast learning techniques.

What's the problem?

Current video generation models mainly focus on slow learning, which means they learn from a lot of data over time. However, they often ignore the fast learning aspect, which is important for quickly remembering new experiences. This lack of fast learning can lead to inconsistencies in longer videos, especially when the model tries to generate frames that are far apart in time, resulting in awkward or unrealistic transitions between scenes.

What's the solution?

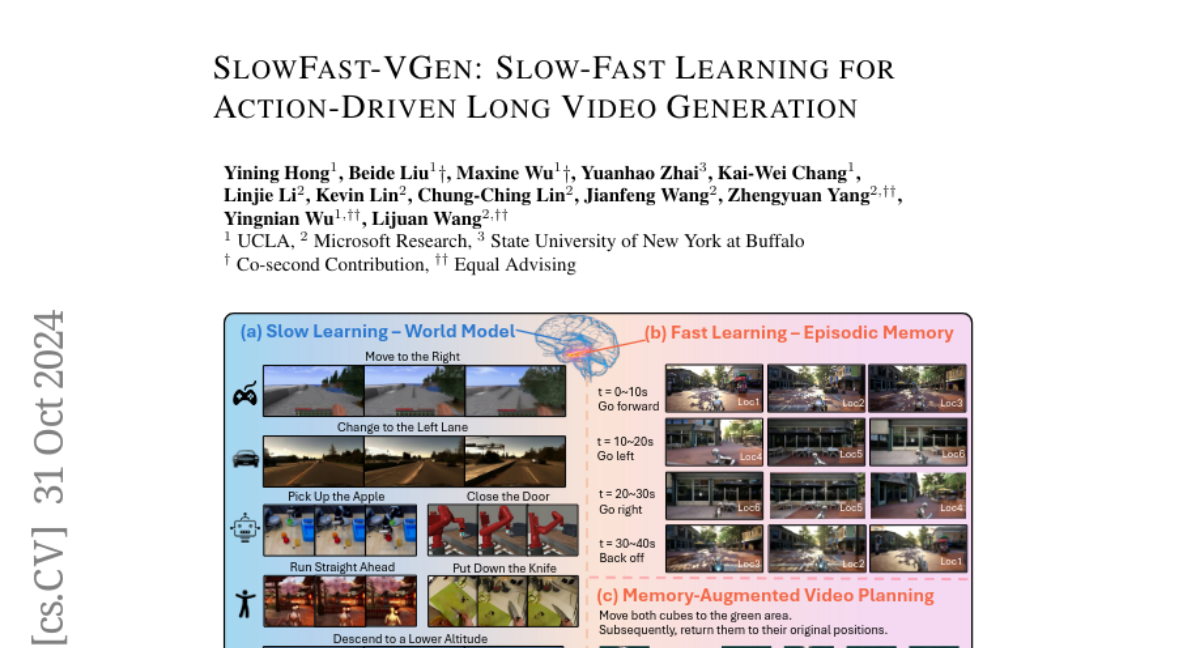

To solve this issue, the authors developed SlowFast-VGen, which uses a dual-speed learning approach. It combines a slow learning method that captures general world dynamics with a fast learning strategy that updates the model based on recent inputs. This allows the model to remember important details from previous experiences while still understanding the broader context. They also created a large dataset of 200,000 videos with action annotations to help train the model effectively. The results showed that SlowFast-VGen performs better than previous models in generating coherent and realistic long videos.

Why it matters?

This research is significant because it improves how AI can create long videos that look natural and flow well. By integrating both slow and fast learning methods, SlowFast-VGen can enhance video generation for applications like filmmaking, gaming, and virtual reality, making it easier to produce high-quality content that engages viewers.

Abstract

Human beings are endowed with a complementary learning system, which bridges the slow learning of general world dynamics with fast storage of episodic memory from a new experience. Previous video generation models, however, primarily focus on slow learning by pre-training on vast amounts of data, overlooking the fast learning phase crucial for episodic memory storage. This oversight leads to inconsistencies across temporally distant frames when generating longer videos, as these frames fall beyond the model's context window. To this end, we introduce SlowFast-VGen, a novel dual-speed learning system for action-driven long video generation. Our approach incorporates a masked conditional video diffusion model for the slow learning of world dynamics, alongside an inference-time fast learning strategy based on a temporal LoRA module. Specifically, the fast learning process updates its temporal LoRA parameters based on local inputs and outputs, thereby efficiently storing episodic memory in its parameters. We further propose a slow-fast learning loop algorithm that seamlessly integrates the inner fast learning loop into the outer slow learning loop, enabling the recall of prior multi-episode experiences for context-aware skill learning. To facilitate the slow learning of an approximate world model, we collect a large-scale dataset of 200k videos with language action annotations, covering a wide range of scenarios. Extensive experiments show that SlowFast-VGen outperforms baselines across various metrics for action-driven video generation, achieving an FVD score of 514 compared to 782, and maintaining consistency in longer videos, with an average of 0.37 scene cuts versus 0.89. The slow-fast learning loop algorithm significantly enhances performances on long-horizon planning tasks as well. Project Website: https://slowfast-vgen.github.io