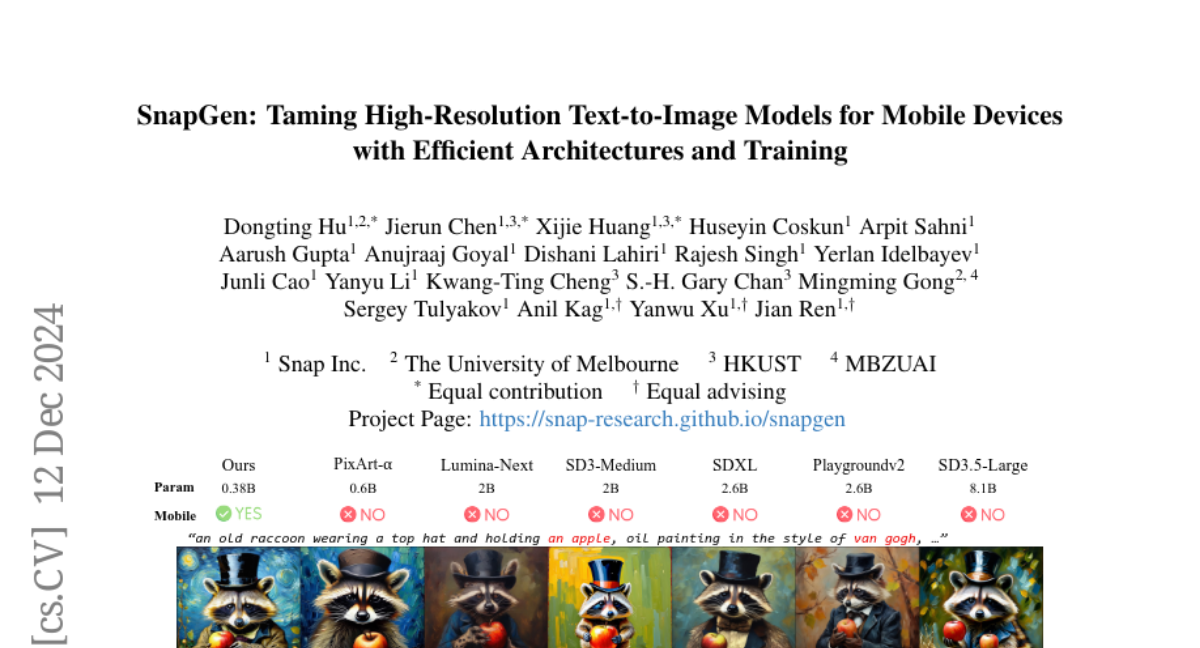

SnapGen: Taming High-Resolution Text-to-Image Models for Mobile Devices with Efficient Architectures and Training

Dongting Hu, Jierun Chen, Xijie Huang, Huseyin Coskun, Arpit Sahni, Aarush Gupta, Anujraaj Goyal, Dishani Lahiri, Rajesh Singh, Yerlan Idelbayev, Junli Cao, Yanyu Li, Kwang-Ting Cheng, S. -H. Gary Chan, Mingming Gong, Sergey Tulyakov, Anil Kag, Yanwu Xu, Jian Ren

2024-12-13

Summary

This paper presents SnapGen, a new model designed to create high-quality images from text on mobile devices quickly and efficiently.

What's the problem?

Current text-to-image models are often too large and slow to work effectively on mobile devices. They generate low-quality images and take a long time to produce results, which makes them impractical for everyday use.

What's the solution?

SnapGen tackles these issues by developing a smaller and faster model that can generate high-resolution images (1024x1024 pixels) in about 1.4 seconds on mobile devices. The authors achieve this by optimizing the model's architecture to reduce its size and improve speed, using techniques like knowledge distillation from larger models to enhance training quality. They also integrate adversarial guidance to streamline the generation process, allowing for quick and efficient image creation.

Why it matters?

This research is significant because it makes powerful text-to-image generation accessible on mobile devices, enabling users to create high-quality images quickly. By improving the efficiency of these models, SnapGen opens up new possibilities for applications in art, design, and social media, allowing more people to harness the power of AI in their creative processes.

Abstract

Existing text-to-image (T2I) diffusion models face several limitations, including large model sizes, slow runtime, and low-quality generation on mobile devices. This paper aims to address all of these challenges by developing an extremely small and fast T2I model that generates high-resolution and high-quality images on mobile platforms. We propose several techniques to achieve this goal. First, we systematically examine the design choices of the network architecture to reduce model parameters and latency, while ensuring high-quality generation. Second, to further improve generation quality, we employ cross-architecture knowledge distillation from a much larger model, using a multi-level approach to guide the training of our model from scratch. Third, we enable a few-step generation by integrating adversarial guidance with knowledge distillation. For the first time, our model SnapGen, demonstrates the generation of 1024x1024 px images on a mobile device around 1.4 seconds. On ImageNet-1K, our model, with only 372M parameters, achieves an FID of 2.06 for 256x256 px generation. On T2I benchmarks (i.e., GenEval and DPG-Bench), our model with merely 379M parameters, surpasses large-scale models with billions of parameters at a significantly smaller size (e.g., 7x smaller than SDXL, 14x smaller than IF-XL).