SparseFlex: High-Resolution and Arbitrary-Topology 3D Shape Modeling

Xianglong He, Zi-Xin Zou, Chia-Hao Chen, Yuan-Chen Guo, Ding Liang, Chun Yuan, Wanli Ouyang, Yan-Pei Cao, Yangguang Li

2025-03-31

Summary

This paper is about a new way to create detailed 3D models that can have any shape, even with holes or complex insides.

What's the problem?

Existing methods for creating 3D models often struggle with complex shapes or lose detail, or they take a lot of computing power.

What's the solution?

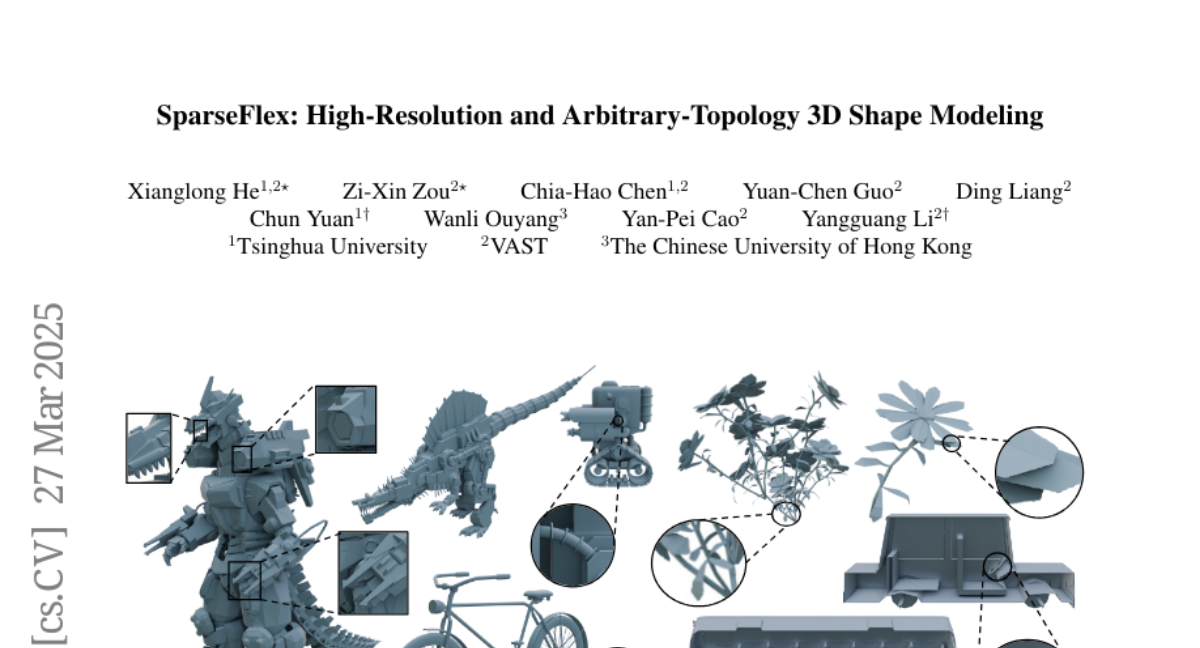

The researchers developed a system called SparseFlex that uses a special structure to focus on the surfaces of the model, making it more efficient and able to handle complex shapes at high resolution.

Why it matters?

This work matters because it can lead to better 3D models for things like design, manufacturing, and virtual reality.

Abstract

Creating high-fidelity 3D meshes with arbitrary topology, including open surfaces and complex interiors, remains a significant challenge. Existing implicit field methods often require costly and detail-degrading watertight conversion, while other approaches struggle with high resolutions. This paper introduces SparseFlex, a novel sparse-structured isosurface representation that enables differentiable mesh reconstruction at resolutions up to 1024^3 directly from rendering losses. SparseFlex combines the accuracy of Flexicubes with a sparse voxel structure, focusing computation on surface-adjacent regions and efficiently handling open surfaces. Crucially, we introduce a frustum-aware sectional voxel training strategy that activates only relevant voxels during rendering, dramatically reducing memory consumption and enabling high-resolution training. This also allows, for the first time, the reconstruction of mesh interiors using only rendering supervision. Building upon this, we demonstrate a complete shape modeling pipeline by training a variational autoencoder (VAE) and a rectified flow transformer for high-quality 3D shape generation. Our experiments show state-of-the-art reconstruction accuracy, with a ~82% reduction in Chamfer Distance and a ~88% increase in F-score compared to previous methods, and demonstrate the generation of high-resolution, detailed 3D shapes with arbitrary topology. By enabling high-resolution, differentiable mesh reconstruction and generation with rendering losses, SparseFlex significantly advances the state-of-the-art in 3D shape representation and modeling.