Speech Slytherin: Examining the Performance and Efficiency of Mamba for Speech Separation, Recognition, and Synthesis

Xilin Jiang, Yinghao Aaron Li, Adrian Nicolas Florea, Cong Han, Nima Mesgarani

2024-07-15

Summary

This paper examines the performance and efficiency of a new model called Mamba for tasks related to speech separation, recognition, and synthesis, comparing it to traditional transformer models.

What's the problem?

As technology advances, there's a need for better models that can handle speech tasks, such as separating different speakers in audio, recognizing spoken words, and generating synthetic speech. However, it's unclear whether Mamba is a better option than existing transformer models for these tasks, especially regarding how well they perform and how efficiently they use resources like memory and processing power.

What's the solution?

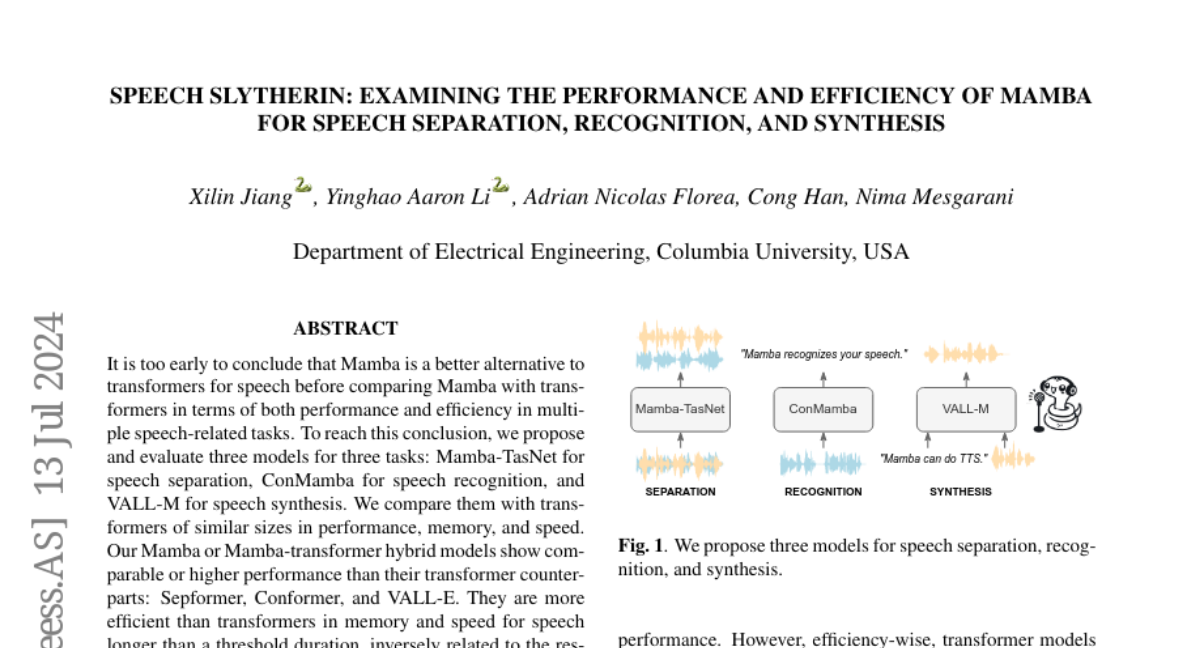

The researchers developed three different models based on Mamba: Mamba-TasNet for separating speech, ConMamba for recognizing speech, and VALL-M for synthesizing speech. They compared these models to similar-sized transformer models to see which performed better in terms of speed and memory usage. The results showed that Mamba models often performed as well or better than transformers for longer speech inputs, but they were not as effective for shorter speech segments or tasks that required combining text and speech inputs.

Why it matters?

This research is important because it helps determine the best models to use for various speech-related tasks. By understanding when Mamba outperforms transformers and vice versa, developers can choose the right tools for applications like virtual assistants and automated transcription services. This contributes to improving how machines understand and generate human speech.

Abstract

It is too early to conclude that Mamba is a better alternative to transformers for speech before comparing Mamba with transformers in terms of both performance and efficiency in multiple speech-related tasks. To reach this conclusion, we propose and evaluate three models for three tasks: Mamba-TasNet for speech separation, ConMamba for speech recognition, and VALL-M for speech synthesis. We compare them with transformers of similar sizes in performance, memory, and speed. Our Mamba or Mamba-transformer hybrid models show comparable or higher performance than their transformer counterparts: Sepformer, Conformer, and VALL-E. They are more efficient than transformers in memory and speed for speech longer than a threshold duration, inversely related to the resolution of a speech token. Mamba for separation is the most efficient, and Mamba for recognition is the least. Further, we show that Mamba is not more efficient than transformer for speech shorter than the threshold duration and performs worse in models that require joint modeling of text and speech, such as cross or masked attention of two inputs. Therefore, we argue that the superiority of Mamba or transformer depends on particular problems and models. Code available at https://github.com/xi-j/Mamba-TasNet and https://github.com/xi-j/Mamba-ASR.