SpreadsheetLLM: Encoding Spreadsheets for Large Language Models

Yuzhang Tian, Jianbo Zhao, Haoyu Dong, Junyu Xiong, Shiyu Xia, Mengyu Zhou, Yun Lin, José Cambronero, Yeye He, Shi Han, Dongmei Zhang

2024-07-15

Summary

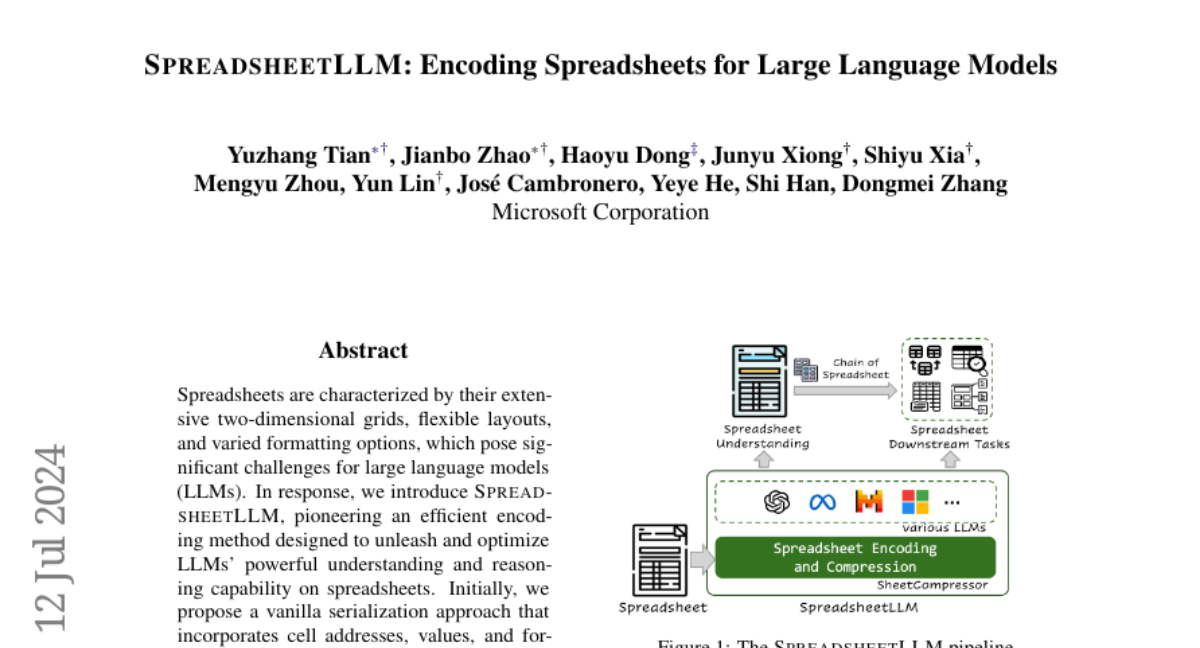

This paper introduces SpreadsheetLLM, a new method for helping large language models (LLMs) understand and process spreadsheets more effectively by using an innovative encoding technique.

What's the problem?

Spreadsheets are complex with their two-dimensional grids, various layouts, and different formatting options, which makes it challenging for LLMs to analyze and interpret them. Current methods often struggle because they rely on large amounts of data that exceed the token limits of LLMs, leading to poor performance in understanding spreadsheet data.

What's the solution?

To solve this problem, the researchers developed a new encoding framework called SheetCompressor, which compresses spreadsheet data efficiently. This framework includes three main components: structural-anchor-based compression (to understand the layout), inverse index translation (to improve data retrieval), and data-format-aware aggregation (to handle different formats). This approach allows LLMs to process spreadsheets more effectively while significantly reducing the amount of data they need to handle. The results showed that this method improved performance in detecting tables within spreadsheets by over 25% compared to previous methods.

Why it matters?

This research is important because it enhances how AI systems can work with spreadsheets, which are widely used in business, finance, and data analysis. By improving the understanding of spreadsheets, SpreadsheetLLM can lead to better automated tools for data management and analysis, making it easier for users to interact with complex information.

Abstract

Spreadsheets, with their extensive two-dimensional grids, various layouts, and diverse formatting options, present notable challenges for large language models (LLMs). In response, we introduce SpreadsheetLLM, pioneering an efficient encoding method designed to unleash and optimize LLMs' powerful understanding and reasoning capability on spreadsheets. Initially, we propose a vanilla serialization approach that incorporates cell addresses, values, and formats. However, this approach was limited by LLMs' token constraints, making it impractical for most applications. To tackle this challenge, we develop SheetCompressor, an innovative encoding framework that compresses spreadsheets effectively for LLMs. It comprises three modules: structural-anchor-based compression, inverse index translation, and data-format-aware aggregation. It significantly improves performance in spreadsheet table detection task, outperforming the vanilla approach by 25.6% in GPT4's in-context learning setting. Moreover, fine-tuned LLM with SheetCompressor has an average compression ratio of 25 times, but achieves a state-of-the-art 78.9% F1 score, surpassing the best existing models by 12.3%. Finally, we propose Chain of Spreadsheet for downstream tasks of spreadsheet understanding and validate in a new and demanding spreadsheet QA task. We methodically leverage the inherent layout and structure of spreadsheets, demonstrating that SpreadsheetLLM is highly effective across a variety of spreadsheet tasks.