Stronger Models are NOT Stronger Teachers for Instruction Tuning

Zhangchen Xu, Fengqing Jiang, Luyao Niu, Bill Yuchen Lin, Radha Poovendran

2024-11-13

Summary

This paper talks about the idea that larger language models (LLMs) are often assumed to be better at teaching smaller models how to follow instructions. However, the authors argue that this isn't always true and present a new way to measure how well these models teach each other.

What's the problem?

The main problem is that many researchers believe larger language models (LLMs) are better teachers for smaller models when it comes to instruction tuning. This assumption can lead to ineffective training because it overlooks how well the teacher model works with the student model being trained.

What's the solution?

To address this issue, the authors conducted experiments with five different base models and twenty response generators to test the effectiveness of larger models as teachers. They found that larger models do not necessarily improve the performance of smaller models. To tackle this, they developed a new metric called Compatibility-Adjusted Reward (CAR) to measure how effective a teacher model is for training a student model. Their experiments showed that CAR provided more accurate results than previous methods.

Why it matters?

This research is significant because it challenges a common belief in AI development that bigger is always better. By understanding the limitations of larger models as teachers, researchers can improve instruction tuning methods, leading to better-performing AI systems that are more aligned with user needs.

Abstract

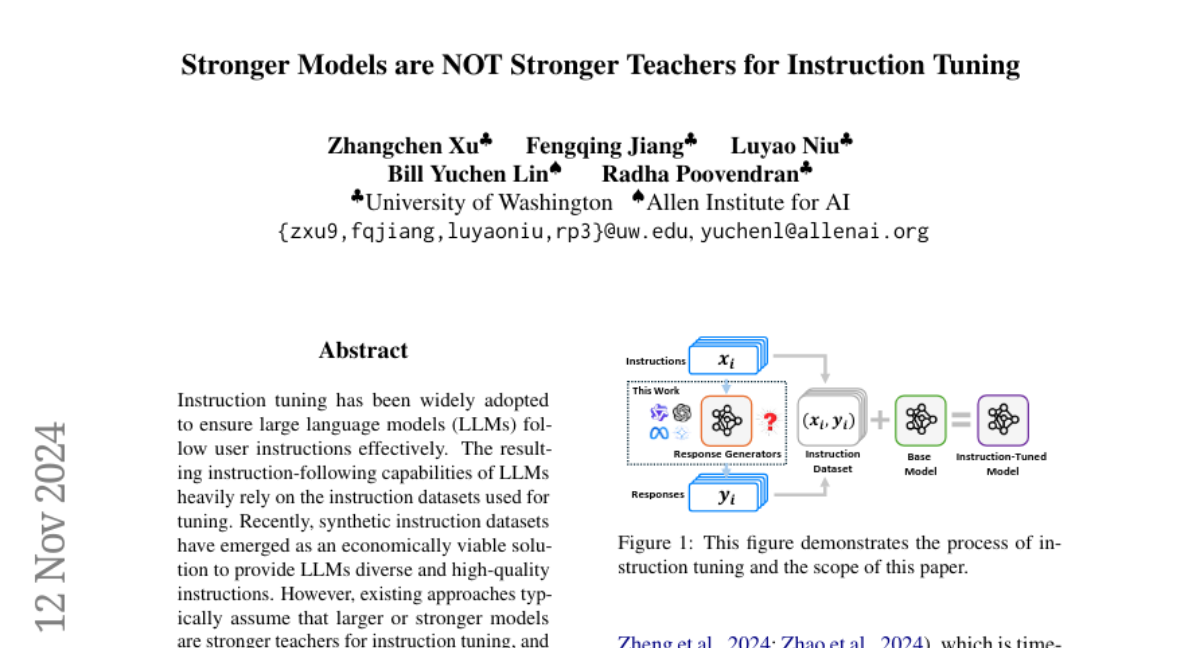

Instruction tuning has been widely adopted to ensure large language models (LLMs) follow user instructions effectively. The resulting instruction-following capabilities of LLMs heavily rely on the instruction datasets used for tuning. Recently, synthetic instruction datasets have emerged as an economically viable solution to provide LLMs diverse and high-quality instructions. However, existing approaches typically assume that larger or stronger models are stronger teachers for instruction tuning, and hence simply adopt these models as response generators to the synthetic instructions. In this paper, we challenge this commonly-adopted assumption. Our extensive experiments across five base models and twenty response generators reveal that larger and stronger models are not necessarily stronger teachers of smaller models. We refer to this phenomenon as the Larger Models' Paradox. We observe that existing metrics cannot precisely predict the effectiveness of response generators since they ignore the compatibility between teachers and base models being fine-tuned. We thus develop a novel metric, named as Compatibility-Adjusted Reward (CAR) to measure the effectiveness of response generators. Our experiments across five base models demonstrate that CAR outperforms almost all baselines.