StyleRemix: Interpretable Authorship Obfuscation via Distillation and Perturbation of Style Elements

Jillian Fisher, Skyler Hallinan, Ximing Lu, Mitchell Gordon, Zaid Harchaoui, Yejin Choi

2024-08-30

Summary

This paper introduces StyleRemix, a method for changing the style of written text to hide the original author's identity while keeping the text understandable.

What's the problem?

Authorship obfuscation, or rewriting text to make it hard to tell who wrote it, is important but challenging. Current methods often use large language models that don’t allow for much control over how the text is changed, leading to results that might not accurately reflect the author's unique style.

What's the solution?

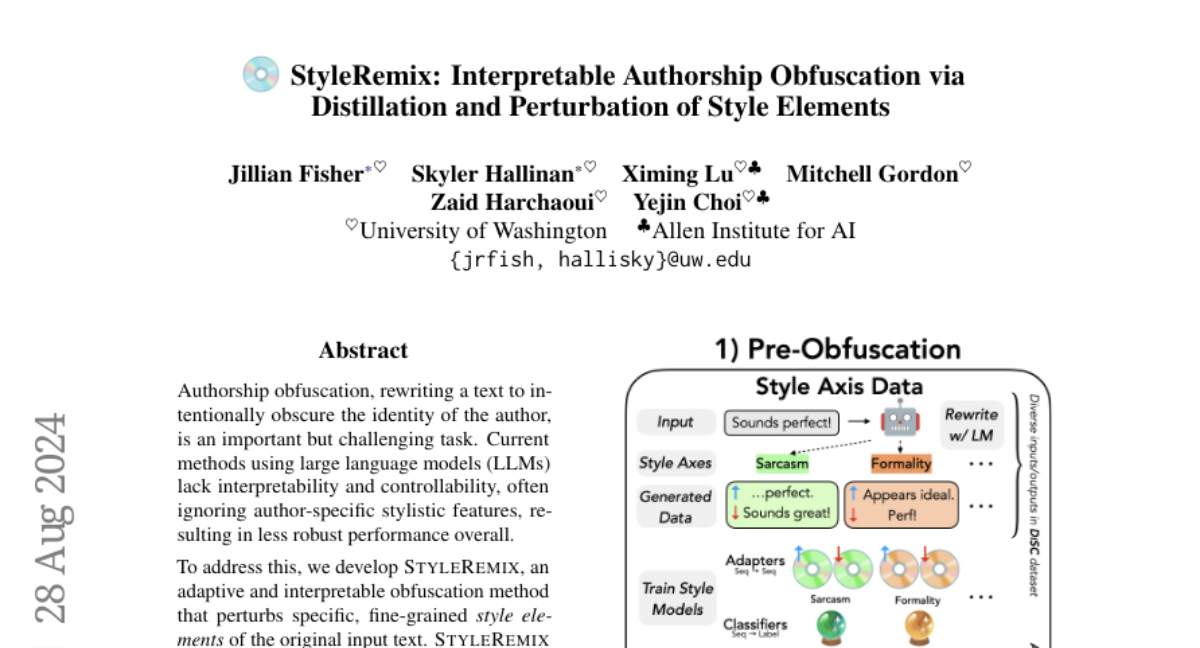

StyleRemix improves this process by focusing on specific style elements of the original text, such as formality and length. It uses a technique called Low Rank Adaptation (LoRA) to rewrite the text while keeping costs low. This method has shown better results than existing models by allowing for more precise control over how the text is altered, making it easier to maintain clarity and quality.

Why it matters?

This research matters because it enhances the ability to protect authors' identities in various contexts, such as academic writing or online content creation. By improving how we can change writing styles, StyleRemix can help ensure that original ideas are shared without revealing who created them.

Abstract

Authorship obfuscation, rewriting a text to intentionally obscure the identity of the author, is an important but challenging task. Current methods using large language models (LLMs) lack interpretability and controllability, often ignoring author-specific stylistic features, resulting in less robust performance overall. To address this, we develop StyleRemix, an adaptive and interpretable obfuscation method that perturbs specific, fine-grained style elements of the original input text. StyleRemix uses pre-trained Low Rank Adaptation (LoRA) modules to rewrite an input specifically along various stylistic axes (e.g., formality and length) while maintaining low computational cost. StyleRemix outperforms state-of-the-art baselines and much larger LLMs in a variety of domains as assessed by both automatic and human evaluation. Additionally, we release AuthorMix, a large set of 30K high-quality, long-form texts from a diverse set of 14 authors and 4 domains, and DiSC, a parallel corpus of 1,500 texts spanning seven style axes in 16 unique directions