SUPER: Evaluating Agents on Setting Up and Executing Tasks from Research Repositories

Ben Bogin, Kejuan Yang, Shashank Gupta, Kyle Richardson, Erin Bransom, Peter Clark, Ashish Sabharwal, Tushar Khot

2024-09-12

Summary

This paper talks about SUPER, a new benchmark created to evaluate how well large language models (LLMs) can set up and execute tasks from research repositories.

What's the problem?

While LLMs have improved in writing code, they still struggle to reproduce results from research papers. This is a problem because being able to automatically validate and extend previous research would greatly help scientists and researchers in their work.

What's the solution?

To address this issue, the authors developed SUPER, which includes three sets of problems: 45 complete tasks with expert solutions, 152 specific sub-problems focusing on particular challenges, and 602 automatically generated problems for larger-scale testing. They created evaluation measures to assess how well the models perform on these tasks. Their findings showed that even the best model, GPT-4o, could only solve a small percentage of the problems, highlighting the challenges involved.

Why it matters?

This research is important because it provides a structured way to test and improve LLMs in real-world scenarios. By identifying the limitations of current models, SUPER can help guide future developments in AI that could make research more efficient and accessible.

Abstract

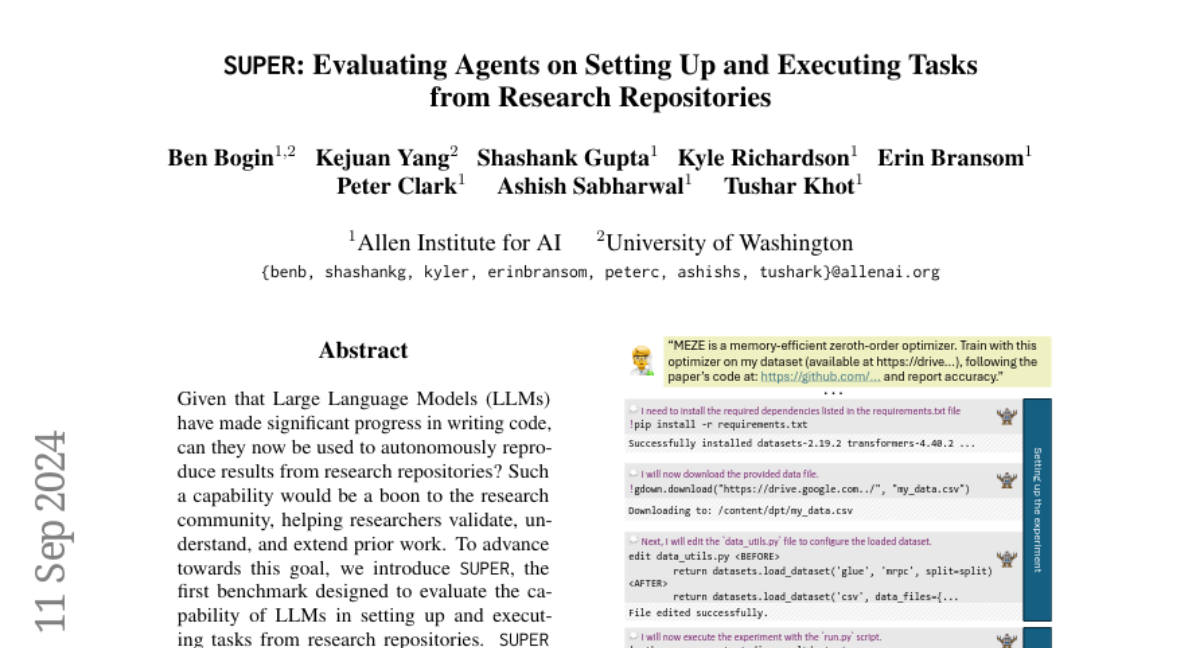

Given that Large Language Models (LLMs) have made significant progress in writing code, can they now be used to autonomously reproduce results from research repositories? Such a capability would be a boon to the research community, helping researchers validate, understand, and extend prior work. To advance towards this goal, we introduce SUPER, the first benchmark designed to evaluate the capability of LLMs in setting up and executing tasks from research repositories. SUPERaims to capture the realistic challenges faced by researchers working with Machine Learning (ML) and Natural Language Processing (NLP) research repositories. Our benchmark comprises three distinct problem sets: 45 end-to-end problems with annotated expert solutions, 152 sub problems derived from the expert set that focus on specific challenges (e.g., configuring a trainer), and 602 automatically generated problems for larger-scale development. We introduce various evaluation measures to assess both task success and progress, utilizing gold solutions when available or approximations otherwise. We show that state-of-the-art approaches struggle to solve these problems with the best model (GPT-4o) solving only 16.3% of the end-to-end set, and 46.1% of the scenarios. This illustrates the challenge of this task, and suggests that SUPER can serve as a valuable resource for the community to make and measure progress.