Synthesizing Text-to-SQL Data from Weak and Strong LLMs

Jiaxi Yang, Binyuan Hui, Min Yang, Jian Yang, Junyang Lin, Chang Zhou

2024-08-07

Summary

This paper discusses a new method for creating synthetic data to improve text-to-SQL tasks, which involve converting natural language questions into SQL queries, by combining outputs from both strong and weak large language models (LLMs).

What's the problem?

There is a performance gap between powerful closed-source LLMs and open-source models when it comes to converting natural language into SQL queries. This makes it challenging for developers using open-source tools to achieve the same level of accuracy and effectiveness as those using more advanced, proprietary models.

What's the solution?

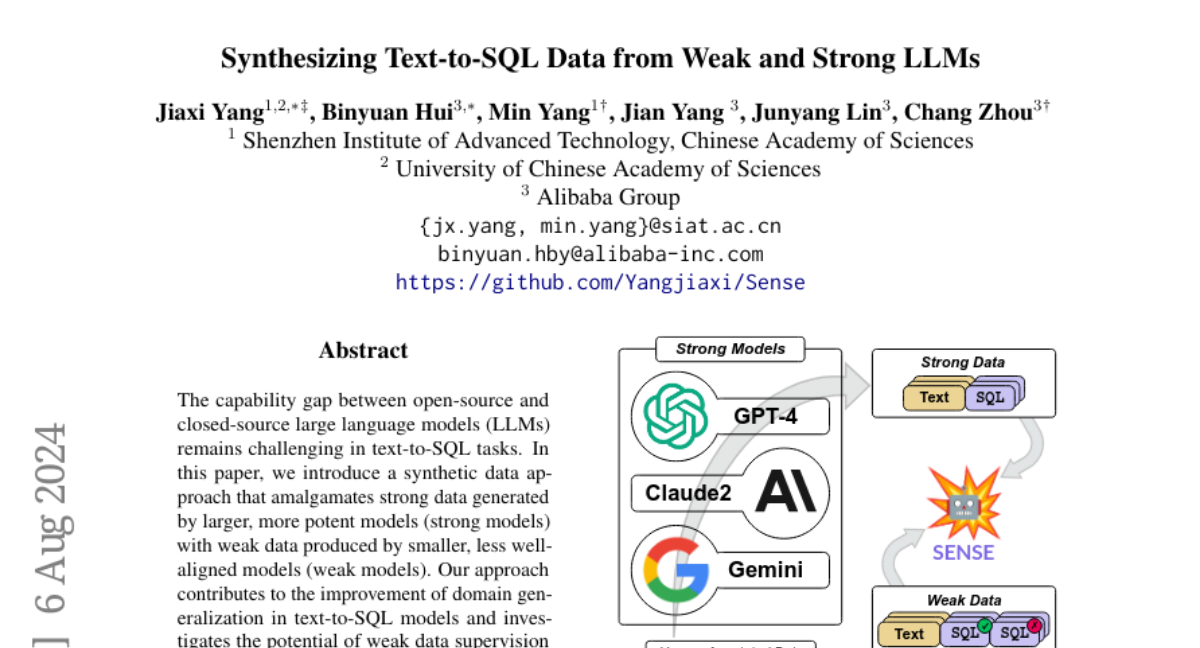

The authors propose a synthetic data approach that merges high-quality data generated by strong models with error information from weaker models. This combination helps enhance the overall performance of text-to-SQL systems. They also create a specialized model called SENSE, which is fine-tuned using this synthetic data. The effectiveness of SENSE is tested on popular benchmarks like SPIDER and BIRD, showing significant improvements in performance.

Why it matters?

This research is important because it helps bridge the gap between different types of language models, making it easier for developers to use open-source tools effectively. By improving the accuracy of text-to-SQL tasks, this work can democratize access to database querying, allowing more people to interact with data without needing extensive technical knowledge.

Abstract

The capability gap between open-source and closed-source large language models (LLMs) remains a challenge in text-to-SQL tasks. In this paper, we introduce a synthetic data approach that combines data produced by larger, more powerful models (strong models) with error information data generated by smaller, not well-aligned models (weak models). The method not only enhances the domain generalization of text-to-SQL models but also explores the potential of error data supervision through preference learning. Furthermore, we employ the synthetic data approach for instruction tuning on open-source LLMs, resulting SENSE, a specialized text-to-SQL model. The effectiveness of SENSE is demonstrated through state-of-the-art results on the SPIDER and BIRD benchmarks, bridging the performance gap between open-source models and methods prompted by closed-source models.