TableBench: A Comprehensive and Complex Benchmark for Table Question Answering

Xianjie Wu, Jian Yang, Linzheng Chai, Ge Zhang, Jiaheng Liu, Xinrun Du, Di Liang, Daixin Shu, Xianfu Cheng, Tianzhen Sun, Guanglin Niu, Tongliang Li, Zhoujun Li

2024-08-21

Summary

This paper presents TableBench, a new benchmark designed to evaluate how well language models can answer questions based on table data.

What's the problem?

While language models have improved in handling text and data, they still struggle with complex reasoning needed for real-world tables. Most existing tests do not reflect the challenges faced in practical applications, making it hard to know how well these models would perform in real situations.

What's the solution?

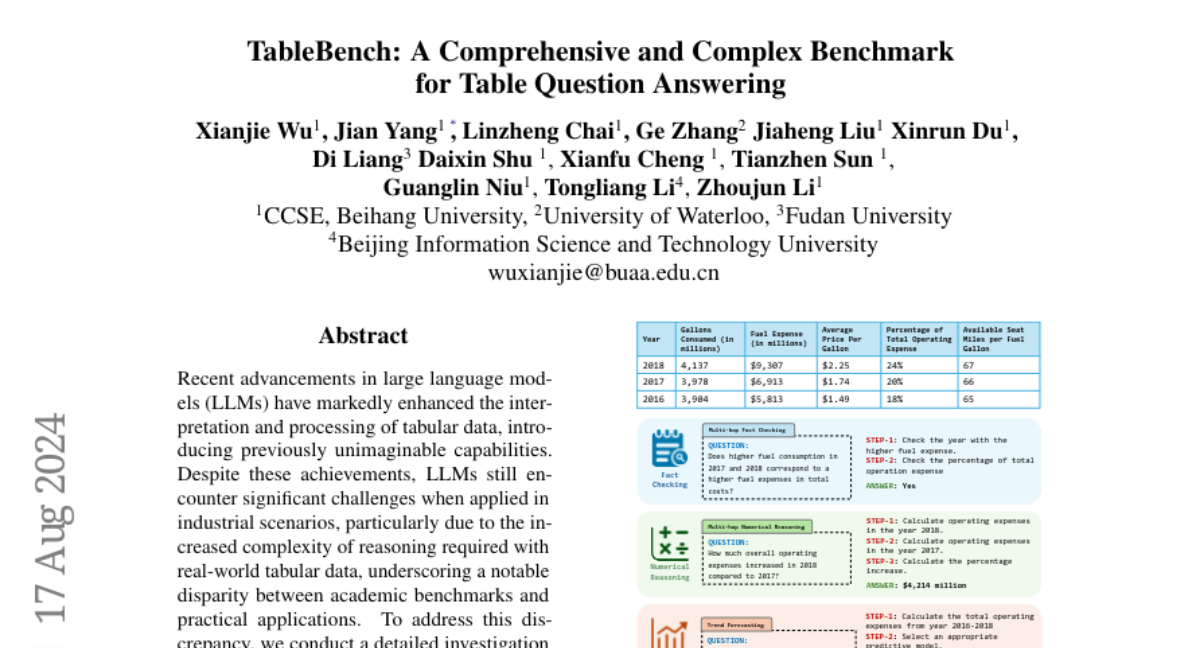

To tackle this issue, the authors created TableBench, which includes 18 different tasks across four main categories related to table question answering. They also developed a new model called TableLLM, trained on a specially designed dataset called TableInstruct. This setup allows for better evaluation of how language models can handle various table-related questions.

Why it matters?

This research is important because it helps improve the understanding of how language models can be used in real-world scenarios involving tabular data. By providing a more accurate way to assess their capabilities, TableBench can lead to advancements in AI applications that rely on data from tables, such as finance, research, and data analysis.

Abstract

Recent advancements in Large Language Models (LLMs) have markedly enhanced the interpretation and processing of tabular data, introducing previously unimaginable capabilities. Despite these achievements, LLMs still encounter significant challenges when applied in industrial scenarios, particularly due to the increased complexity of reasoning required with real-world tabular data, underscoring a notable disparity between academic benchmarks and practical applications. To address this discrepancy, we conduct a detailed investigation into the application of tabular data in industrial scenarios and propose a comprehensive and complex benchmark TableBench, including 18 fields within four major categories of table question answering (TableQA) capabilities. Furthermore, we introduce TableLLM, trained on our meticulously constructed training set TableInstruct, achieving comparable performance with GPT-3.5. Massive experiments conducted on TableBench indicate that both open-source and proprietary LLMs still have significant room for improvement to meet real-world demands, where the most advanced model, GPT-4, achieves only a modest score compared to humans.