Tailor3D: Customized 3D Assets Editing and Generation with Dual-Side Images

Zhangyang Qi, Yunhan Yang, Mengchen Zhang, Long Xing, Xiaoyang Wu, Tong Wu, Dahua Lin, Xihui Liu, Jiaqi Wang, Hengshuang Zhao

2024-07-09

Summary

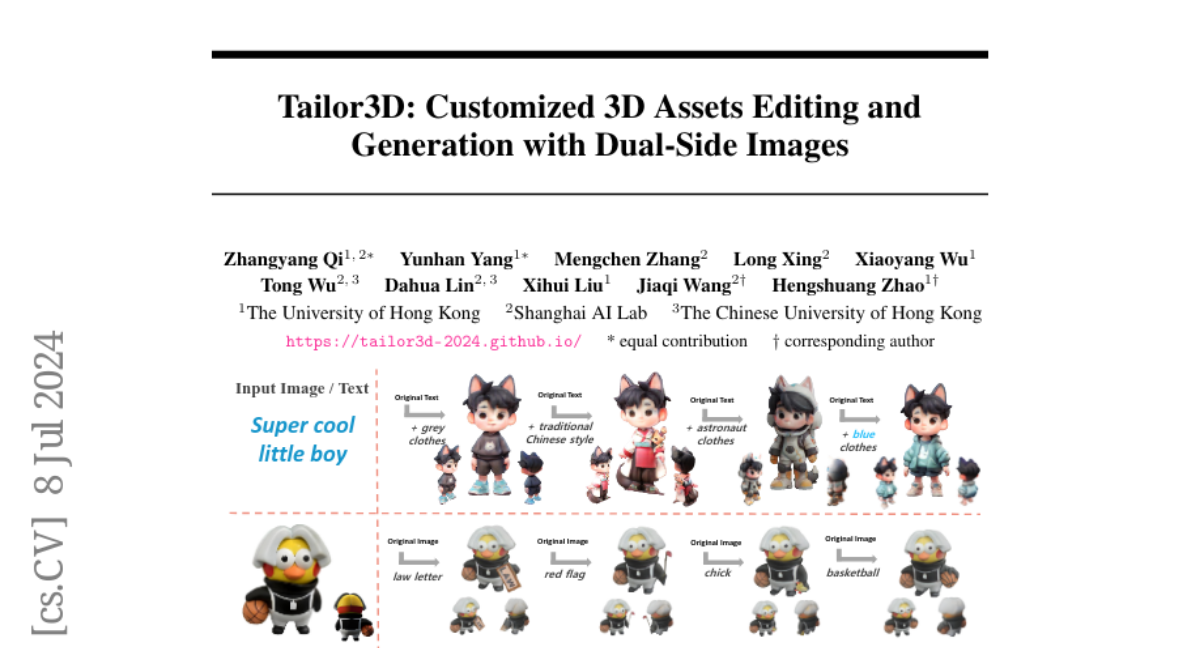

This paper talks about Tailor3D, a new method for creating and editing 3D objects quickly and easily using dual-side images. It aims to improve how we customize 3D assets by making the process more efficient and user-friendly.

What's the problem?

The main problem is that while recent advancements in technology allow for the creation of 3D objects from text and images, customizing these objects in detail is still very challenging. Existing methods often struggle to follow detailed instructions as accurately as 2D image editing does. For example, if you wanted to create a toy but it came with unwanted accessories or features, it would be hard to fix that using current 3D generation techniques.

What's the solution?

To solve this issue, the authors developed Tailor3D, which uses a novel approach to create customized 3D assets from images that show both the front and back of an object. First, users edit the front view of the object, then the system generates the back view using a technique called multi-view diffusion. After that, users can edit the back view as well. A special model called Dual-sided LRM helps combine the front and back views smoothly, fixing any inconsistencies between them. This process is fast and allows for detailed customization without requiring a lot of memory.

Why it matters?

This research is important because it makes 3D editing more accessible and efficient for designers and creators. By allowing for quick and precise modifications to 3D objects, Tailor3D can significantly benefit industries like animation, gaming, and product design, where high-quality custom 3D assets are essential.

Abstract

Recent advances in 3D AIGC have shown promise in directly creating 3D objects from text and images, offering significant cost savings in animation and product design. However, detailed edit and customization of 3D assets remains a long-standing challenge. Specifically, 3D Generation methods lack the ability to follow finely detailed instructions as precisely as their 2D image creation counterparts. Imagine you can get a toy through 3D AIGC but with undesired accessories and dressing. To tackle this challenge, we propose a novel pipeline called Tailor3D, which swiftly creates customized 3D assets from editable dual-side images. We aim to emulate a tailor's ability to locally change objects or perform overall style transfer. Unlike creating 3D assets from multiple views, using dual-side images eliminates conflicts on overlapping areas that occur when editing individual views. Specifically, it begins by editing the front view, then generates the back view of the object through multi-view diffusion. Afterward, it proceeds to edit the back views. Finally, a Dual-sided LRM is proposed to seamlessly stitch together the front and back 3D features, akin to a tailor sewing together the front and back of a garment. The Dual-sided LRM rectifies imperfect consistencies between the front and back views, enhancing editing capabilities and reducing memory burdens while seamlessly integrating them into a unified 3D representation with the LoRA Triplane Transformer. Experimental results demonstrate Tailor3D's effectiveness across various 3D generation and editing tasks, including 3D generative fill and style transfer. It provides a user-friendly, efficient solution for editing 3D assets, with each editing step taking only seconds to complete.