TaoAvatar: Real-Time Lifelike Full-Body Talking Avatars for Augmented Reality via 3D Gaussian Splatting

Jianchuan Chen, Jingchuan Hu, Gaige Wang, Zhonghua Jiang, Tiansong Zhou, Zhiwen Chen, Chengfei Lv

2025-03-24

Summary

This paper is about creating realistic, moving 3D avatars that can talk and be used in augmented reality on devices like the Apple Vision Pro.

What's the problem?

Existing methods for creating these avatars either don't look realistic enough, can't show detailed facial expressions and body movements, or are too slow to run in real-time on mobile devices.

What's the solution?

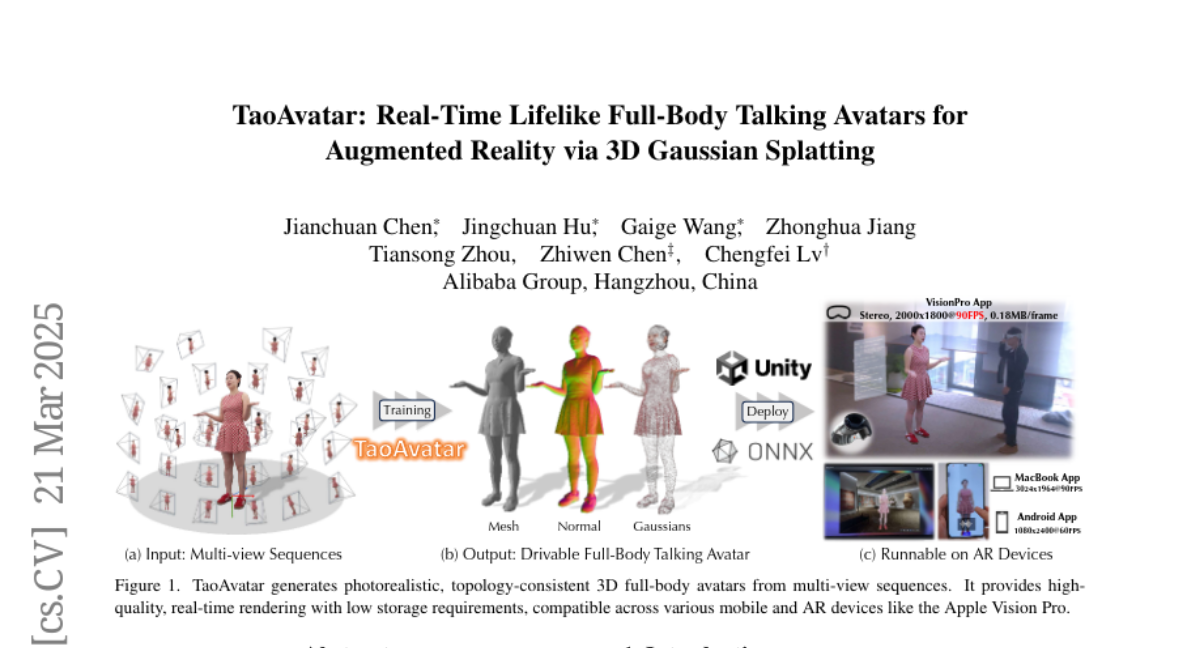

The researchers created a new method called TaoAvatar that uses a combination of techniques to create high-quality, detailed avatars that can run smoothly on mobile devices. They start with a basic 3D model, add realistic clothing and appearance details, and then use AI to make the avatar move and talk realistically.

Why it matters?

This work matters because it could lead to more realistic and engaging AR experiences, such as live shopping, holographic communication, and virtual meetings.

Abstract

Realistic 3D full-body talking avatars hold great potential in AR, with applications ranging from e-commerce live streaming to holographic communication. Despite advances in 3D Gaussian Splatting (3DGS) for lifelike avatar creation, existing methods struggle with fine-grained control of facial expressions and body movements in full-body talking tasks. Additionally, they often lack sufficient details and cannot run in real-time on mobile devices. We present TaoAvatar, a high-fidelity, lightweight, 3DGS-based full-body talking avatar driven by various signals. Our approach starts by creating a personalized clothed human parametric template that binds Gaussians to represent appearances. We then pre-train a StyleUnet-based network to handle complex pose-dependent non-rigid deformation, which can capture high-frequency appearance details but is too resource-intensive for mobile devices. To overcome this, we "bake" the non-rigid deformations into a lightweight MLP-based network using a distillation technique and develop blend shapes to compensate for details. Extensive experiments show that TaoAvatar achieves state-of-the-art rendering quality while running in real-time across various devices, maintaining 90 FPS on high-definition stereo devices such as the Apple Vision Pro.