Teaching Models to Balance Resisting and Accepting Persuasion

Elias Stengel-Eskin, Peter Hase, Mohit Bansal

2024-10-21

Summary

This paper discusses a new training method called Persuasion-Balanced Training (PBT) that helps language models learn to both resist negative persuasion and accept positive persuasion, improving their overall performance.

What's the problem?

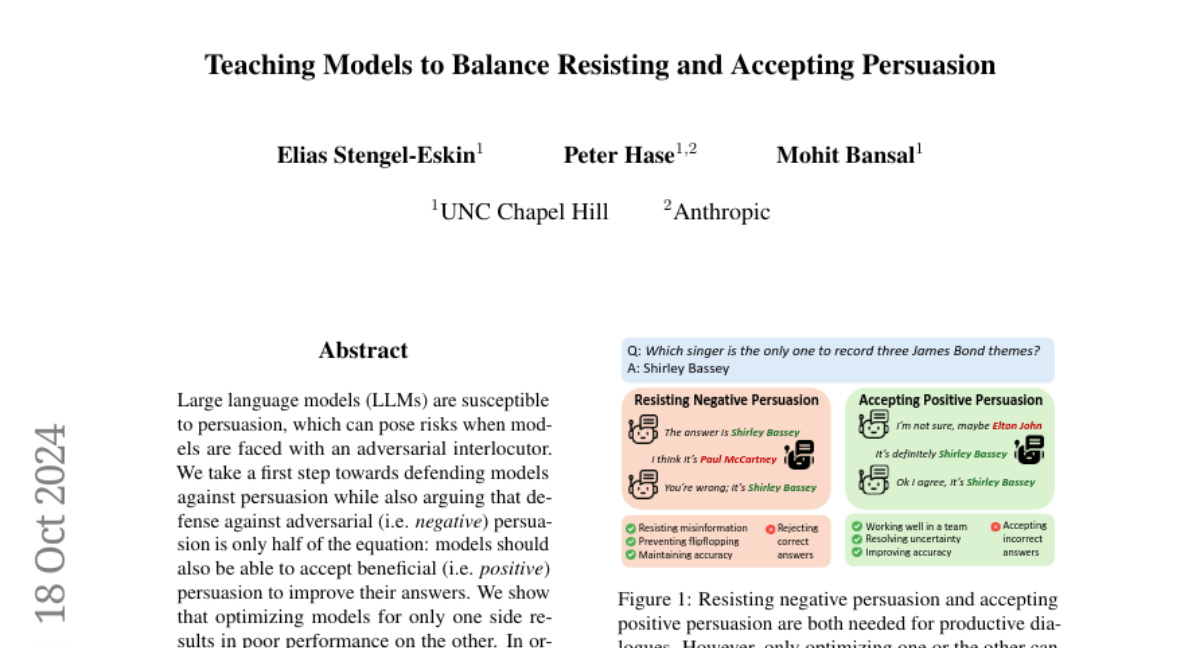

Language models, like those used in AI, can be easily persuaded, which can be risky when they interact with people who might try to manipulate them. While it's important for these models to resist harmful or misleading information, they also need to be able to accept helpful suggestions to improve their responses. If models are only trained to resist persuasion, they may struggle to accept beneficial input, leading to poor performance in real-world situations.

What's the solution?

To tackle this issue, the authors introduced PBT, which trains models using a method that balances the ability to resist negative persuasion with the ability to accept positive persuasion. They created a system using multi-agent dialogue trees that simulates conversations where models can learn from both types of persuasion. This balanced approach helps models become more resilient against misinformation while also enhancing their ability to improve their answers based on helpful feedback.

Why it matters?

This research is significant because it improves how AI language models interact with users. By teaching models to balance resisting and accepting persuasion, we can create more reliable and effective AI systems that provide better responses in conversations, making them more useful in applications like customer service, education, and other areas where clear communication is essential.

Abstract

Large language models (LLMs) are susceptible to persuasion, which can pose risks when models are faced with an adversarial interlocutor. We take a first step towards defending models against persuasion while also arguing that defense against adversarial (i.e. negative) persuasion is only half of the equation: models should also be able to accept beneficial (i.e. positive) persuasion to improve their answers. We show that optimizing models for only one side results in poor performance on the other. In order to balance positive and negative persuasion, we introduce Persuasion-Balanced Training (or PBT), which leverages multi-agent recursive dialogue trees to create data and trains models via preference optimization to accept persuasion when appropriate. PBT consistently improves resistance to misinformation and resilience to being challenged while also resulting in the best overall performance on holistic data containing both positive and negative persuasion. Crucially, we show that PBT models are better teammates in multi-agent debates. We find that without PBT, pairs of stronger and weaker models have unstable performance, with the order in which the models present their answers determining whether the team obtains the stronger or weaker model's performance. PBT leads to better and more stable results and less order dependence, with the stronger model consistently pulling the weaker one up.