TexGen: Text-Guided 3D Texture Generation with Multi-view Sampling and Resampling

Dong Huo, Zixin Guo, Xinxin Zuo, Zhihao Shi, Juwei Lu, Peng Dai, Songcen Xu, Li Cheng, Yee-Hong Yang

2024-08-05

Summary

This paper introduces TexGen, a new method for generating 3D textures based on text descriptions. It uses advanced techniques to create high-quality textures that fit well on 3D models, reducing common issues like visible seams and blurriness.

What's the problem?

Generating textures for 3D models from text descriptions can be challenging. Existing methods often lead to problems such as noticeable seams where textures don't match up or overly smooth areas that lack detail. These issues can make the final 3D object look unrealistic or poorly made.

What's the solution?

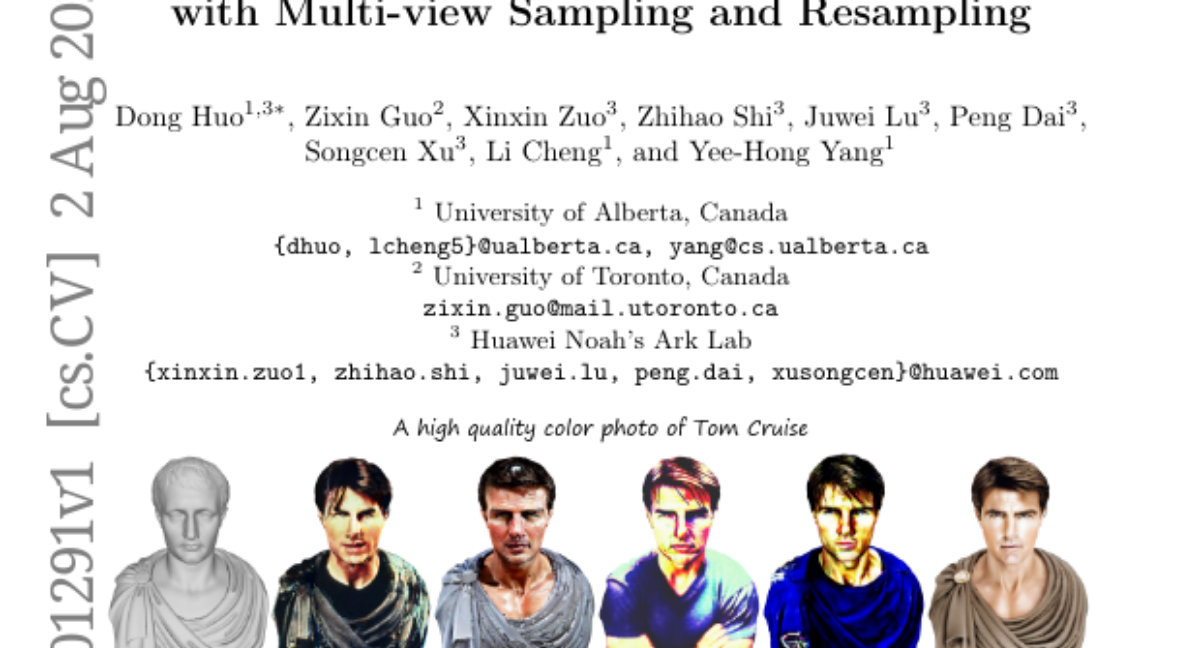

TexGen tackles these problems by using a multi-view sampling and resampling approach. It leverages a pre-trained text-to-image model to ensure that the generated textures are consistent across different views of the 3D object. The method maintains a texture map that gets updated with each sampling step, helping to reduce discrepancies in appearance. Additionally, TexGen includes a noise resampling technique that helps keep texture details intact while following the text prompts. This allows it to produce better quality textures with rich details and consistency across various angles of the 3D model.

Why it matters?

This research is significant because it improves the process of creating realistic textures for 3D models, which is important in fields like video game design, animation, and virtual reality. By enhancing texture quality and consistency, TexGen can help artists and developers create more visually appealing and believable digital content.

Abstract

Given a 3D mesh, we aim to synthesize 3D textures that correspond to arbitrary textual descriptions. Current methods for generating and assembling textures from sampled views often result in prominent seams or excessive smoothing. To tackle these issues, we present TexGen, a novel multi-view sampling and resampling framework for texture generation leveraging a pre-trained text-to-image diffusion model. For view consistent sampling, first of all we maintain a texture map in RGB space that is parameterized by the denoising step and updated after each sampling step of the diffusion model to progressively reduce the view discrepancy. An attention-guided multi-view sampling strategy is exploited to broadcast the appearance information across views. To preserve texture details, we develop a noise resampling technique that aids in the estimation of noise, generating inputs for subsequent denoising steps, as directed by the text prompt and current texture map. Through an extensive amount of qualitative and quantitative evaluations, we demonstrate that our proposed method produces significantly better texture quality for diverse 3D objects with a high degree of view consistency and rich appearance details, outperforming current state-of-the-art methods. Furthermore, our proposed texture generation technique can also be applied to texture editing while preserving the original identity. More experimental results are available at https://dong-huo.github.io/TexGen/