The Impact of Hyperparameters on Large Language Model Inference Performance: An Evaluation of vLLM and HuggingFace Pipelines

Matias Martinez

2024-08-06

Summary

This paper examines how different settings, called hyperparameters, affect the performance of large language models (LLMs) when generating responses, using two specific libraries: vLLM and HuggingFace.

What's the problem?

As more open-source LLMs become available, developers need to ensure that these models work efficiently in real-time applications. However, the speed at which these models generate responses can vary greatly depending on how the hyperparameters are set. Without proper configuration, the models may not perform well, leading to slower response times and less effective applications.

What's the solution?

The authors analyzed the performance of 20 different LLMs using vLLM and HuggingFace pipelines to see how different hyperparameters impact their speed. They found that the relationship between hyperparameters and performance is complex, with certain settings leading to better results. By optimizing these hyperparameters when changing GPU models, they demonstrated that throughput (the number of tokens generated per second) could be improved by an average of 9.16% when upgrading and 13.7% when downgrading the GPU.

Why it matters?

Understanding how to optimize hyperparameters is crucial for developers who want to create fast and efficient AI applications. This research helps improve the performance of LLMs, making them more effective for tasks like chatbots, translation, and other real-time applications where speed is essential.

Abstract

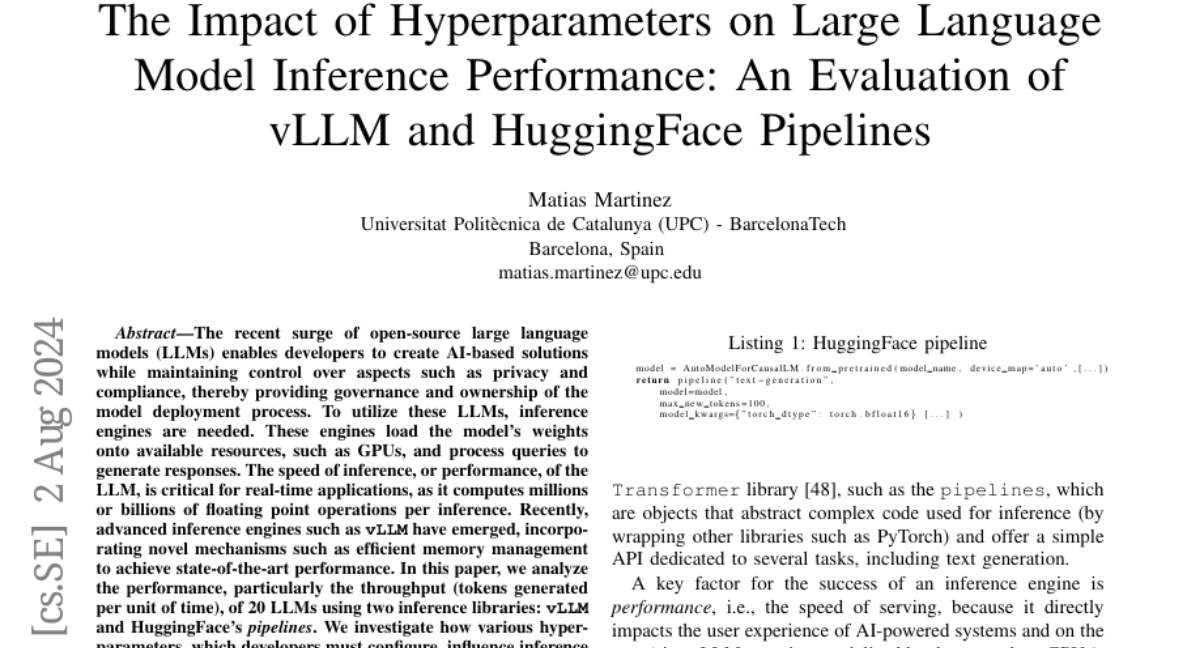

The recent surge of open-source large language models (LLMs) enables developers to create AI-based solutions while maintaining control over aspects such as privacy and compliance, thereby providing governance and ownership of the model deployment process. To utilize these LLMs, inference engines are needed. These engines load the model's weights onto available resources, such as GPUs, and process queries to generate responses. The speed of inference, or performance, of the LLM, is critical for real-time applications, as it computes millions or billions of floating point operations per inference. Recently, advanced inference engines such as vLLM have emerged, incorporating novel mechanisms such as efficient memory management to achieve state-of-the-art performance. In this paper, we analyze the performance, particularly the throughput (tokens generated per unit of time), of 20 LLMs using two inference libraries: vLLM and HuggingFace's pipelines. We investigate how various hyperparameters, which developers must configure, influence inference performance. Our results reveal that throughput landscapes are irregular, with distinct peaks, highlighting the importance of hyperparameter optimization to achieve maximum performance. We also show that applying hyperparameter optimization when upgrading or downgrading the GPU model used for inference can improve throughput from HuggingFace pipelines by an average of 9.16% and 13.7%, respectively.