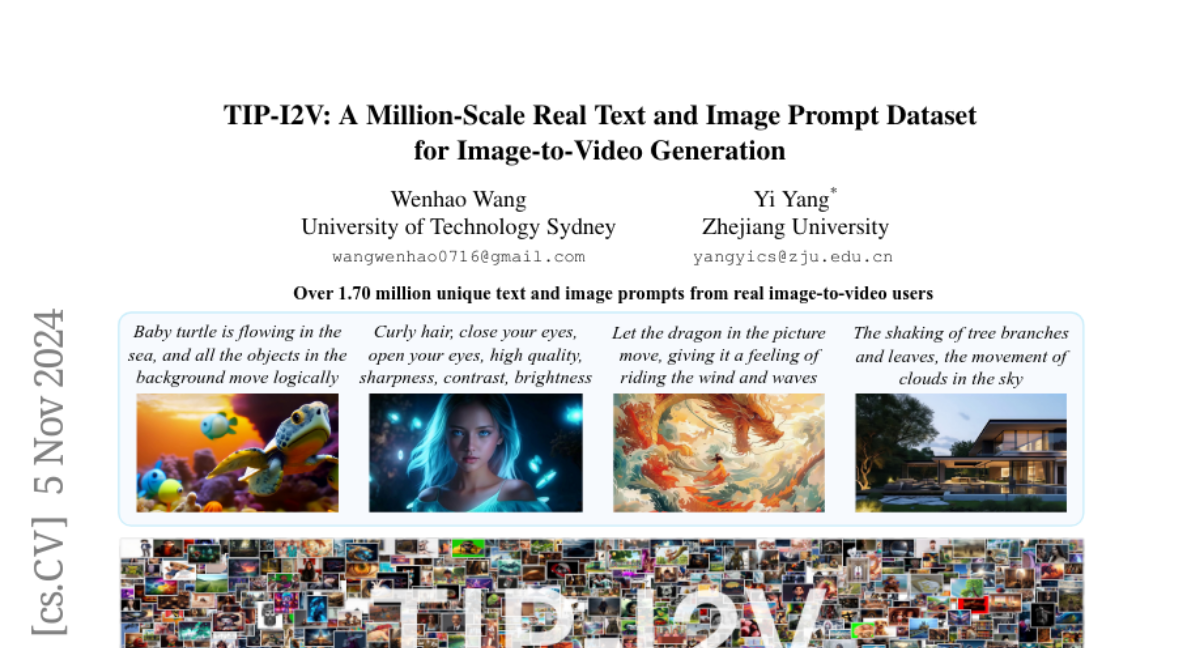

TIP-I2V: A Million-Scale Real Text and Image Prompt Dataset for Image-to-Video Generation

Wenhao Wang, Yi Yang

2024-11-08

Summary

This paper introduces TIP-I2V, a large dataset containing over 1.7 million text and image prompts specifically designed for generating videos from images.

What's the problem?

Video generation models need specific prompts (text and images) to create videos, but there hasn't been a dedicated dataset for studying these prompts. This limits researchers' ability to improve video generation models because they lack the necessary data to analyze how different prompts affect the quality of the generated videos.

What's the solution?

TIP-I2V provides a comprehensive dataset with a wide variety of user-generated text and image prompts. The researchers not only created this dataset but also generated corresponding videos using five advanced image-to-video models. They compared TIP-I2V with other existing datasets to show its unique features and advantages, highlighting how it can help researchers understand user preferences and improve model performance.

Why it matters?

This research is important because it fills a significant gap in the field of video generation. By providing a large-scale dataset, TIP-I2V enables researchers to develop better models that can create high-quality videos from images and text. This advancement can lead to more effective applications in areas like content creation, education, and entertainment.

Abstract

Video generation models are revolutionizing content creation, with image-to-video models drawing increasing attention due to their enhanced controllability, visual consistency, and practical applications. However, despite their popularity, these models rely on user-provided text and image prompts, and there is currently no dedicated dataset for studying these prompts. In this paper, we introduce TIP-I2V, the first large-scale dataset of over 1.70 million unique user-provided Text and Image Prompts specifically for Image-to-Video generation. Additionally, we provide the corresponding generated videos from five state-of-the-art image-to-video models. We begin by outlining the time-consuming and costly process of curating this large-scale dataset. Next, we compare TIP-I2V to two popular prompt datasets, VidProM (text-to-video) and DiffusionDB (text-to-image), highlighting differences in both basic and semantic information. This dataset enables advancements in image-to-video research. For instance, to develop better models, researchers can use the prompts in TIP-I2V to analyze user preferences and evaluate the multi-dimensional performance of their trained models; and to enhance model safety, they may focus on addressing the misinformation issue caused by image-to-video models. The new research inspired by TIP-I2V and the differences with existing datasets emphasize the importance of a specialized image-to-video prompt dataset. The project is publicly available at https://tip-i2v.github.io.