TOMG-Bench: Evaluating LLMs on Text-based Open Molecule Generation

Jiatong Li, Junxian Li, Yunqing Liu, Dongzhan Zhou, Qing Li

2024-12-20

Summary

This paper introduces TOMG-Bench, a new benchmark designed to evaluate how well large language models (LLMs) can generate molecules based on text descriptions. It includes various tasks related to molecule editing, optimization, and custom generation.

What's the problem?

Generating molecules from text is complex and requires precise understanding. Existing methods often rely on specific datasets that limit the models' ability to learn and adapt to new tasks, making it hard to evaluate their true capabilities in open-domain scenarios.

What's the solution?

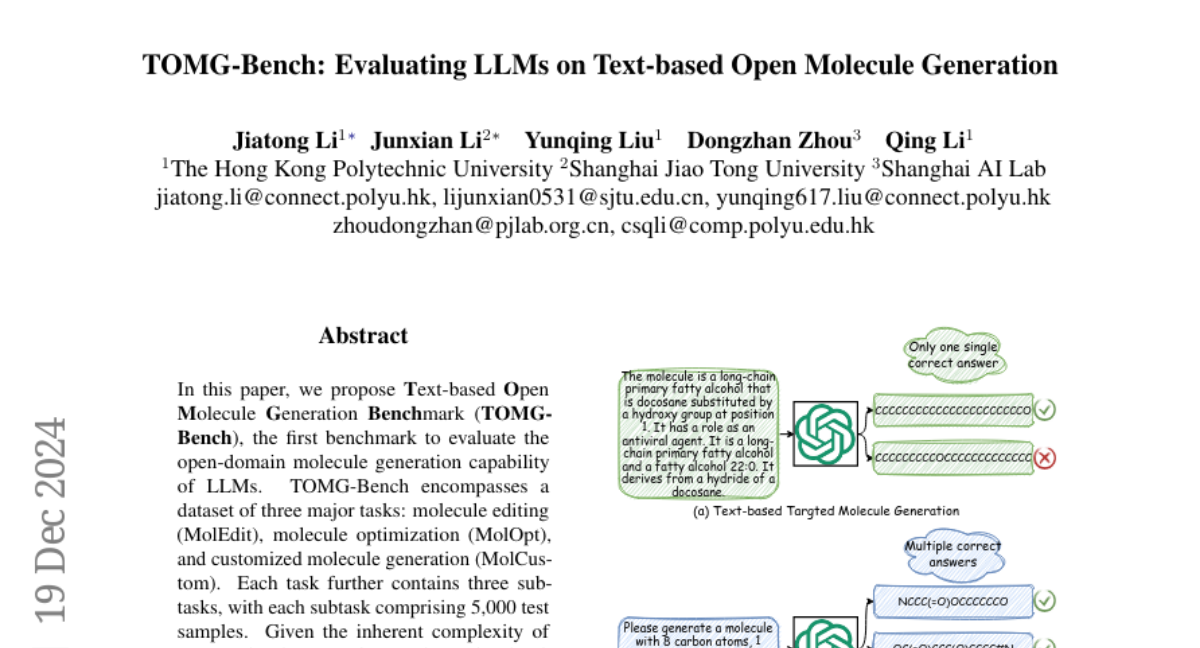

TOMG-Bench consists of three main tasks: molecule editing (MolEdit), molecule optimization (MolOpt), and customized molecule generation (MolCustom). Each task has several subtasks with thousands of test samples. The benchmark also features an automated evaluation system to measure the quality and accuracy of the generated molecules. Additionally, a specialized dataset called OpenMolIns was created to help improve the models' performance on these tasks.

Why it matters?

This research is significant because it provides a structured way to assess the abilities of LLMs in generating molecules from text. By identifying strengths and weaknesses in current models, TOMG-Bench can guide future improvements in AI-assisted chemistry, potentially leading to advancements in drug discovery and material science.

Abstract

In this paper, we propose Text-based Open Molecule Generation Benchmark (TOMG-Bench), the first benchmark to evaluate the open-domain molecule generation capability of LLMs. TOMG-Bench encompasses a dataset of three major tasks: molecule editing (MolEdit), molecule optimization (MolOpt), and customized molecule generation (MolCustom). Each task further contains three subtasks, with each subtask comprising 5,000 test samples. Given the inherent complexity of open molecule generation, we have also developed an automated evaluation system that helps measure both the quality and the accuracy of the generated molecules. Our comprehensive benchmarking of 25 LLMs reveals the current limitations and potential areas for improvement in text-guided molecule discovery. Furthermore, with the assistance of OpenMolIns, a specialized instruction tuning dataset proposed for solving challenges raised by TOMG-Bench, Llama3.1-8B could outperform all the open-source general LLMs, even surpassing GPT-3.5-turbo by 46.5\% on TOMG-Bench. Our codes and datasets are available through https://github.com/phenixace/TOMG-Bench.