Training Large Language Models to Reason in a Continuous Latent Space

Shibo Hao, Sainbayar Sukhbaatar, DiJia Su, Xian Li, Zhiting Hu, Jason Weston, Yuandong Tian

2024-12-10

Summary

This paper talks about a new approach called Coconut, which allows large language models (LLMs) to reason more effectively by using a continuous latent space instead of relying solely on natural language.

What's the problem?

Traditional LLMs typically use a 'chain-of-thought' method to solve complex problems, but this can be limiting. The language space they operate in often focuses on coherence rather than actual reasoning, making it difficult for them to handle complex tasks that require deep thinking and planning. This can lead to challenges when the models need to consider multiple possible solutions or steps.

What's the solution?

The authors introduce Coconut, which changes how reasoning is done by using the last hidden state of the LLM as a representation of its reasoning process, referred to as 'continuous thought.' Instead of converting this state into words, they feed it back into the model as input. This allows the model to explore multiple reasoning paths at once, rather than sticking to one predetermined route. Experiments show that this method helps the LLM perform better on various reasoning tasks, especially those that require revisiting earlier steps or considering alternatives.

Why it matters?

This research is significant because it offers a new way for AI models to think and reason, potentially improving their performance on complex tasks. By enabling LLMs to explore different reasoning paths without being limited by language constraints, Coconut could lead to advancements in how AI systems understand and solve problems, making them more effective in real-world applications.

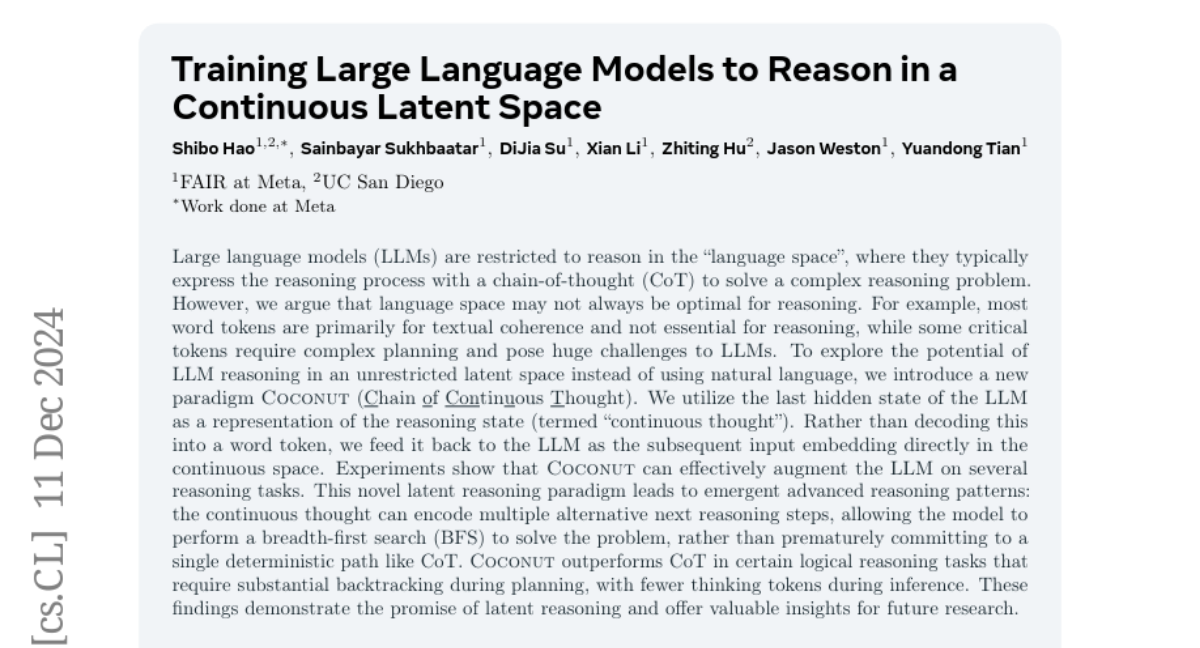

Abstract

Large language models (LLMs) are restricted to reason in the "language space", where they typically express the reasoning process with a chain-of-thought (CoT) to solve a complex reasoning problem. However, we argue that language space may not always be optimal for reasoning. For example, most word tokens are primarily for textual coherence and not essential for reasoning, while some critical tokens require complex planning and pose huge challenges to LLMs. To explore the potential of LLM reasoning in an unrestricted latent space instead of using natural language, we introduce a new paradigm Coconut (Chain of Continuous Thought). We utilize the last hidden state of the LLM as a representation of the reasoning state (termed "continuous thought"). Rather than decoding this into a word token, we feed it back to the LLM as the subsequent input embedding directly in the continuous space. Experiments show that Coconut can effectively augment the LLM on several reasoning tasks. This novel latent reasoning paradigm leads to emergent advanced reasoning patterns: the continuous thought can encode multiple alternative next reasoning steps, allowing the model to perform a breadth-first search (BFS) to solve the problem, rather than prematurely committing to a single deterministic path like CoT. Coconut outperforms CoT in certain logical reasoning tasks that require substantial backtracking during planning, with fewer thinking tokens during inference. These findings demonstrate the promise of latent reasoning and offer valuable insights for future research.