Training Task Experts through Retrieval Based Distillation

Jiaxin Ge, Xueying Jia, Vijay Viswanathan, Hongyin Luo, Graham Neubig

2024-07-09

Summary

This paper talks about Retrieval Based Distillation (ReBase), a new method for creating specialized models for specific tasks by retrieving high-quality data from online sources and transforming it into useful information for training.

What's the problem?

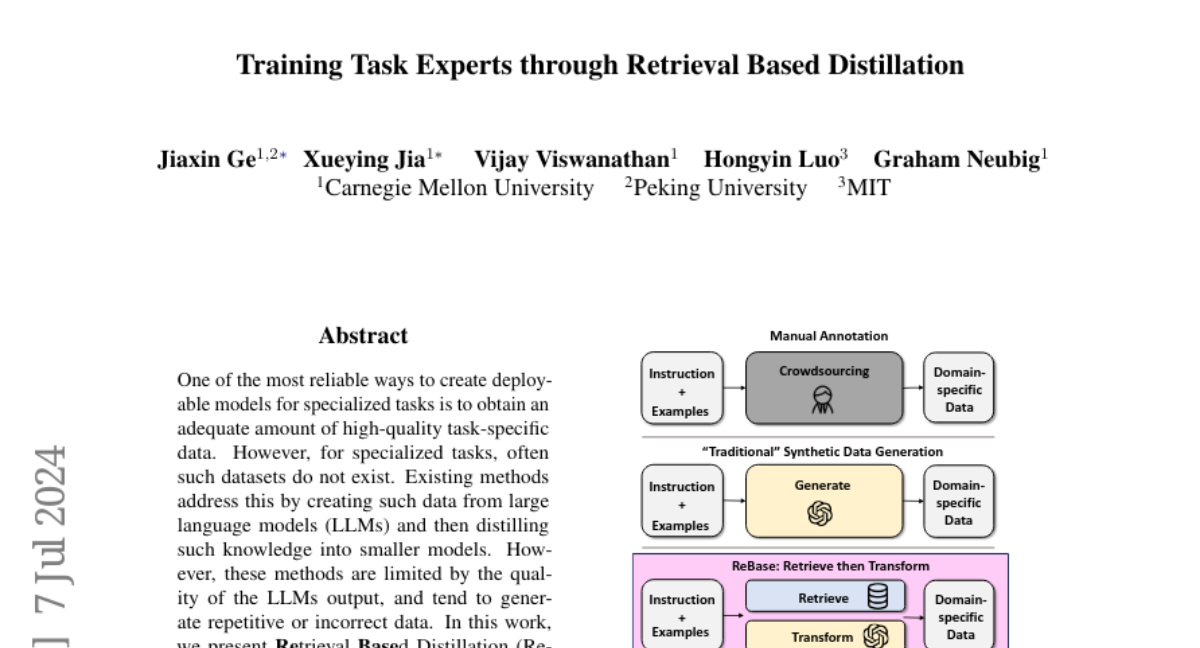

The main problem is that many specialized tasks lack sufficient high-quality datasets for training models. Existing methods try to generate this data using large language models (LLMs), but these models often produce repetitive or inaccurate information, which can lead to poor performance in specialized applications.

What's the solution?

To address this issue, the authors developed ReBase, which first retrieves relevant data from rich online sources instead of relying solely on LLMs. This approach increases the diversity and quality of the training data. Additionally, ReBase helps generate Chain-of-Thought reasoning, which allows the model to think through problems step-by-step. The authors tested ReBase on four different benchmarks and found that it improved performance significantly—by up to 7.8% on the SQuAD dataset, 1.37% on MNLI, and 1.94% on BigBench-Hard.

Why it matters?

This research is important because it provides a more effective way to train models for specialized tasks, ensuring they have access to high-quality data. By improving how models learn from diverse sources, ReBase can enhance their performance in real-world applications, making them more reliable and effective in areas like question answering and natural language understanding.

Abstract

One of the most reliable ways to create deployable models for specialized tasks is to obtain an adequate amount of high-quality task-specific data. However, for specialized tasks, often such datasets do not exist. Existing methods address this by creating such data from large language models (LLMs) and then distilling such knowledge into smaller models. However, these methods are limited by the quality of the LLMs output, and tend to generate repetitive or incorrect data. In this work, we present Retrieval Based Distillation (ReBase), a method that first retrieves data from rich online sources and then transforms them into domain-specific data. This method greatly enhances data diversity. Moreover, ReBase generates Chain-of-Thought reasoning and distills the reasoning capacity of LLMs. We test our method on 4 benchmarks and results show that our method significantly improves performance by up to 7.8% on SQuAD, 1.37% on MNLI, and 1.94% on BigBench-Hard.