TRIDENT: Enhancing Large Language Model Safety with Tri-Dimensional Diversified Red-Teaming Data Synthesis

Xiaorui Wu, Xiaofeng Mao, Fei Li, Xin Zhang, Xuanhong Li, Chong Teng, Donghong Ji, Zhuang Li

2025-06-02

Summary

This paper talks about TRIDENT, a new system that automatically creates a wide variety of test cases to help make large language models safer and more ethical.

What's the problem?

The problem is that large language models can sometimes produce harmful or unethical content because they haven't been tested enough with different types of tricky or dangerous situations.

What's the solution?

The researchers built TRIDENT, which is an automated tool that generates lots of diverse safety tests, called red-teaming data, to challenge the language model from many different angles. This helps the model learn how to avoid giving harmful or inappropriate responses.

Why it matters?

This is important because it means AI systems can become much safer and more trustworthy, reducing the risk of them being used for bad purposes or accidentally causing harm when people interact with them online.

Abstract

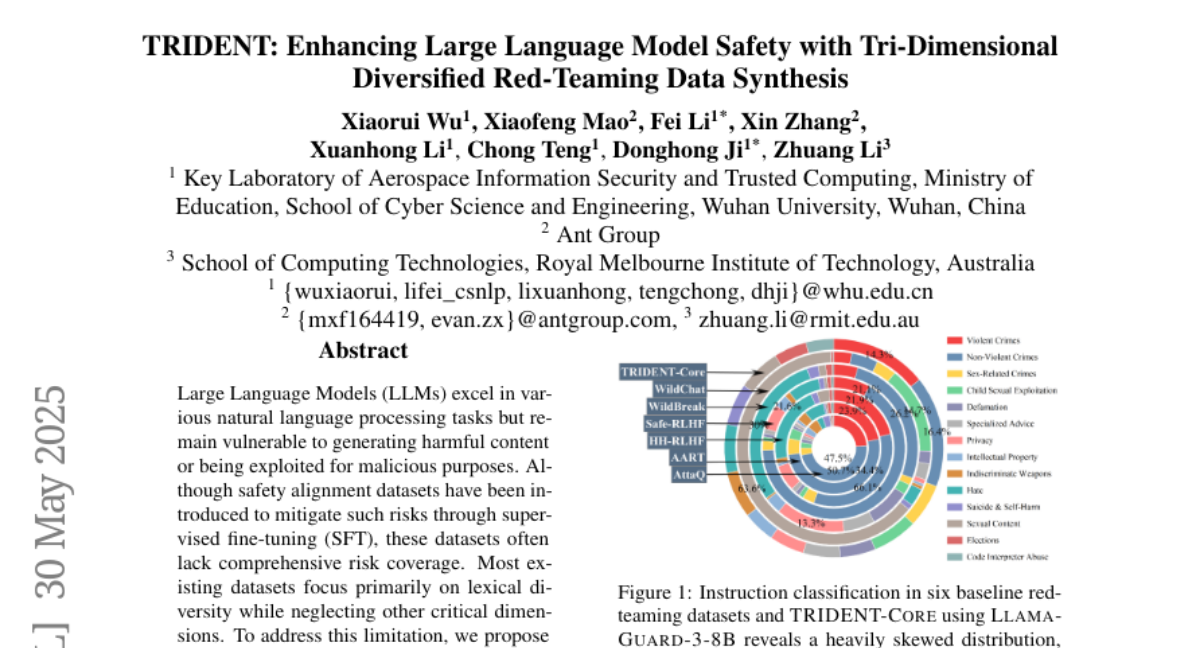

The introduction of TRIDENT, an automated pipeline for generating comprehensive safety alignment datasets, significantly improves the ethical performance of LLMs through reductions in harmful content generation and malicious exploitation.