TryOffDiff: Virtual-Try-Off via High-Fidelity Garment Reconstruction using Diffusion Models

Riza Velioglu, Petra Bevandic, Robin Chan, Barbara Hammer

2024-11-29

Summary

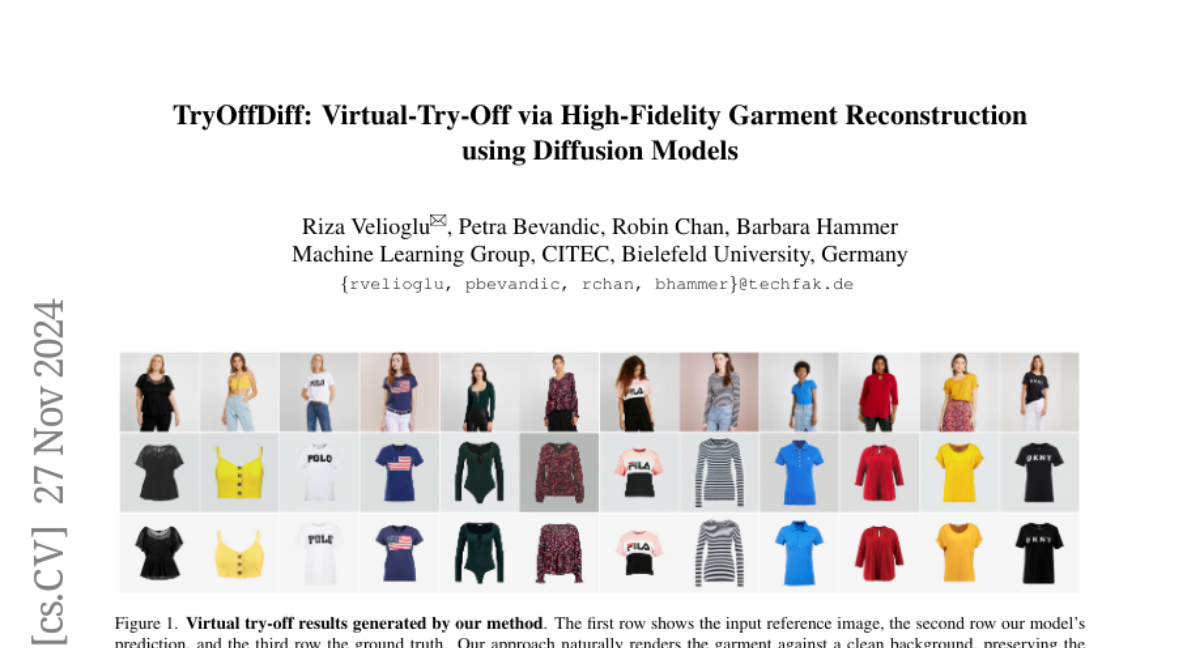

This paper introduces TryOffDiff, a new method for creating high-quality images of garments from single photos of people wearing them, focusing on accurately capturing the details of the clothing.

What's the problem?

Traditional methods for virtual try-on (VTON) involve digitally dressing models with clothes, but they often struggle to produce clear and accurate images of the garments. This can lead to issues like poor representation of garment shapes, textures, and patterns, making it hard to evaluate how well these models work.

What's the solution?

The authors propose a new task called Virtual Try-Off (VTOFF), which aims to generate standardized images of garments from photos. They developed TryOffDiff, a model that uses advanced techniques like Stable Diffusion and SigLIP-based visual conditioning to ensure that the generated garment images are detailed and high-quality. Their experiments show that this approach works better than previous methods with fewer steps needed for processing.

Why it matters?

This research is significant because it enhances how clothing is represented in digital formats, which is crucial for online shopping and fashion industries. By improving the accuracy of garment images, TryOffDiff can help customers make better purchasing decisions and reduce returns, ultimately benefiting both consumers and retailers.

Abstract

This paper introduces Virtual Try-Off (VTOFF), a novel task focused on generating standardized garment images from single photos of clothed individuals. Unlike traditional Virtual Try-On (VTON), which digitally dresses models, VTOFF aims to extract a canonical garment image, posing unique challenges in capturing garment shape, texture, and intricate patterns. This well-defined target makes VTOFF particularly effective for evaluating reconstruction fidelity in generative models. We present TryOffDiff, a model that adapts Stable Diffusion with SigLIP-based visual conditioning to ensure high fidelity and detail retention. Experiments on a modified VITON-HD dataset show that our approach outperforms baseline methods based on pose transfer and virtual try-on with fewer pre- and post-processing steps. Our analysis reveals that traditional image generation metrics inadequately assess reconstruction quality, prompting us to rely on DISTS for more accurate evaluation. Our results highlight the potential of VTOFF to enhance product imagery in e-commerce applications, advance generative model evaluation, and inspire future work on high-fidelity reconstruction. Demo, code, and models are available at: https://rizavelioglu.github.io/tryoffdiff/