UniT: Unified Tactile Representation for Robot Learning

Zhengtong Xu, Raghava Uppuluri, Xinwei Zhang, Cael Fitch, Philip Glen Crandall, Wan Shou, Dongyi Wang, Yu She

2024-08-14

Summary

This paper presents UniT, a new method for teaching robots to understand touch and interact with objects using advanced learning techniques.

What's the problem?

Teaching robots to recognize and manipulate objects based on touch is challenging, especially because existing methods often require a lot of training data and can struggle with different tasks. Robots need to be able to learn from simple examples and apply that knowledge to new situations without needing extra training.

What's the solution?

UniT uses a technique called VQVAE (Vector Quantized Variational Autoencoder) to create a compact representation of tactile information from objects. It learns from tactile images of just one type of object, which allows it to transfer that knowledge to other tasks without additional training. This means that UniT can recognize and manipulate various objects effectively, even if it hasn't seen them before. The model was tested on tasks like estimating the position of objects in hand and showed better performance than previous methods.

Why it matters?

This research is important because it helps improve how robots learn from touch, making them more versatile and capable in real-world applications. By enabling robots to understand and interact with their environment better, we can enhance their usefulness in areas like manufacturing, healthcare, and service industries.

Abstract

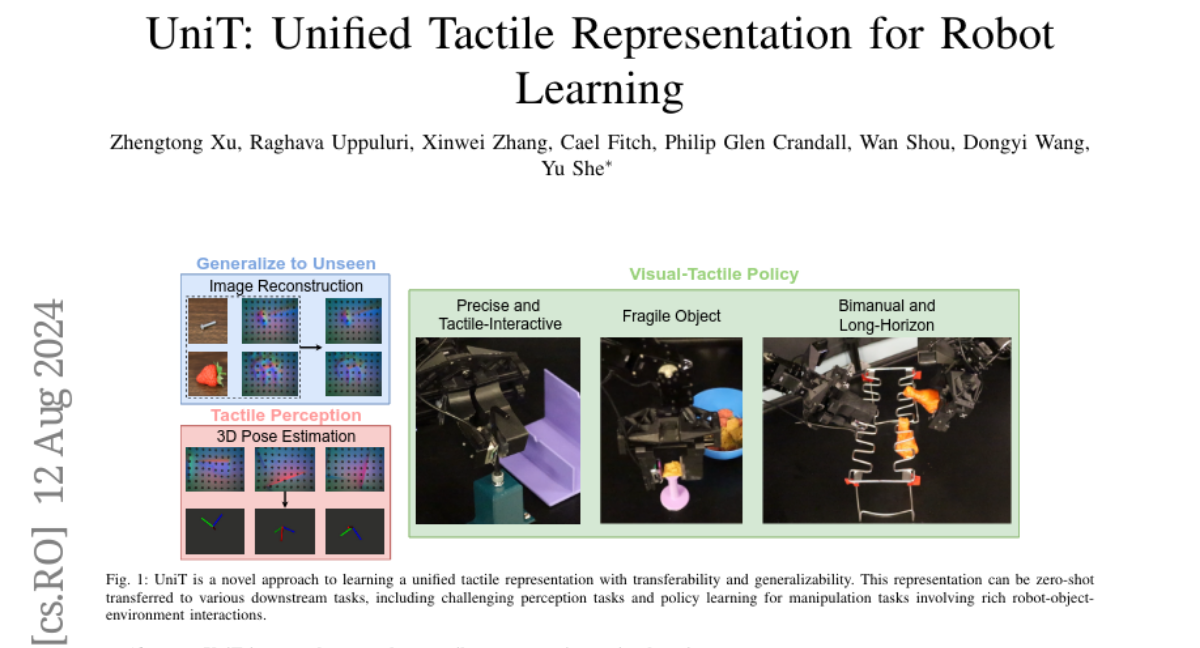

UniT is a novel approach to tactile representation learning, using VQVAE to learn a compact latent space and serve as the tactile representation. It uses tactile images obtained from a single simple object to train the representation with transferability and generalizability. This tactile representation can be zero-shot transferred to various downstream tasks, including perception tasks and manipulation policy learning. Our benchmarking on an in-hand 3D pose estimation task shows that UniT outperforms existing visual and tactile representation learning methods. Additionally, UniT's effectiveness in policy learning is demonstrated across three real-world tasks involving diverse manipulated objects and complex robot-object-environment interactions. Through extensive experimentation, UniT is shown to be a simple-to-train, plug-and-play, yet widely effective method for tactile representation learning. For more details, please refer to our open-source repository https://github.com/ZhengtongXu/UniT and the project website https://zhengtongxu.github.io/unifiedtactile.github.io/.