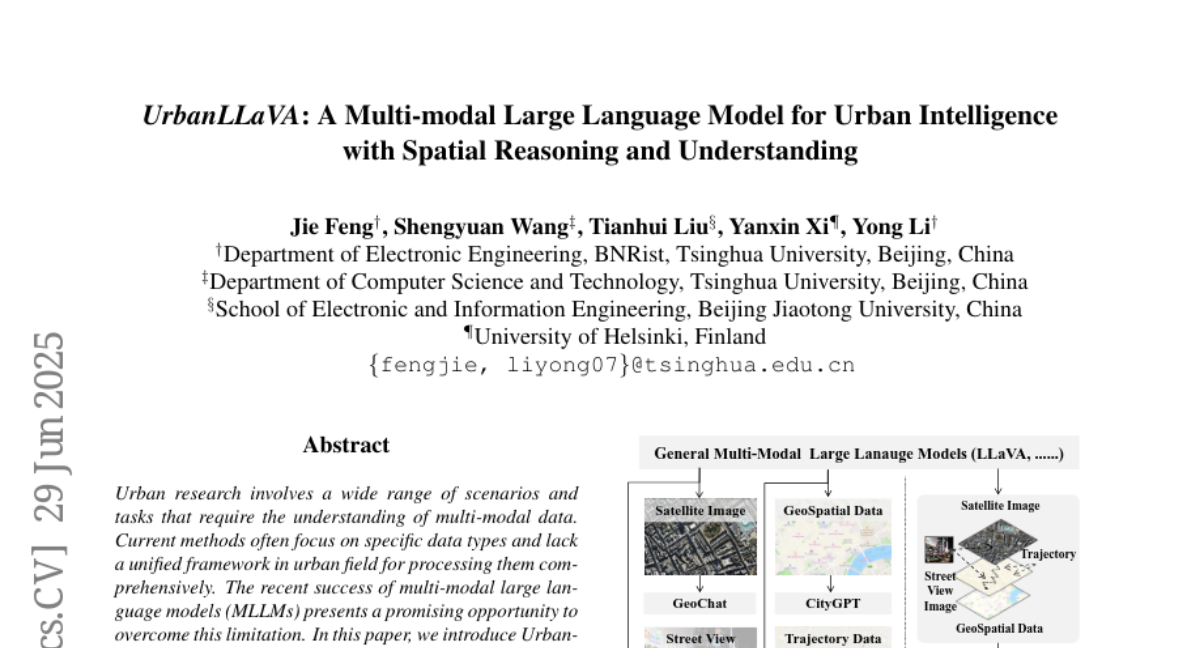

UrbanLLaVA: A Multi-modal Large Language Model for Urban Intelligence with Spatial Reasoning and Understanding

Jie Feng, Shengyuan Wang, Tianhui Liu, Yanxin Xi, Yong Li

2025-07-01

Summary

This paper talks about UrbanLLaVA, a special computer model designed to understand and work with lots of different types of data from cities. It combines images from streets and satellites, maps, and movement data to help solve various urban problems and tasks.

What's the problem?

The problem is that most current computer models either focus on just one type of city data or struggle to combine many data types well. Because of this, they can’t fully understand complex urban environments or perform many different tasks related to cities effectively.

What's the solution?

The researchers created UrbanLLaVA, which can process four major types of city data all at once. They developed new ways to collect and organize urban data and designed a special multi-step training system to help the model learn better spatial reasoning and knowledge about cities. This approach allowed UrbanLLaVA to perform better on many urban tasks and generalize well across different cities.

Why it matters?

This matters because being able to understand and analyze complex urban data helps city planners, researchers, and governments make smarter decisions. UrbanLLaVA’s ability to handle multiple data types and perform well in different cities shows promise for improving how we manage and improve urban environments.

Abstract

UrbanLLaVA, a multi-modal large language model, processes diverse urban data types and outperforms existing models in various urban tasks.