V^3: Viewing Volumetric Videos on Mobiles via Streamable 2D Dynamic Gaussians

Penghao Wang, Zhirui Zhang, Liao Wang, Kaixin Yao, Siyuan Xie, Jingyi Yu, Minye Wu, Lan Xu

2024-09-23

Summary

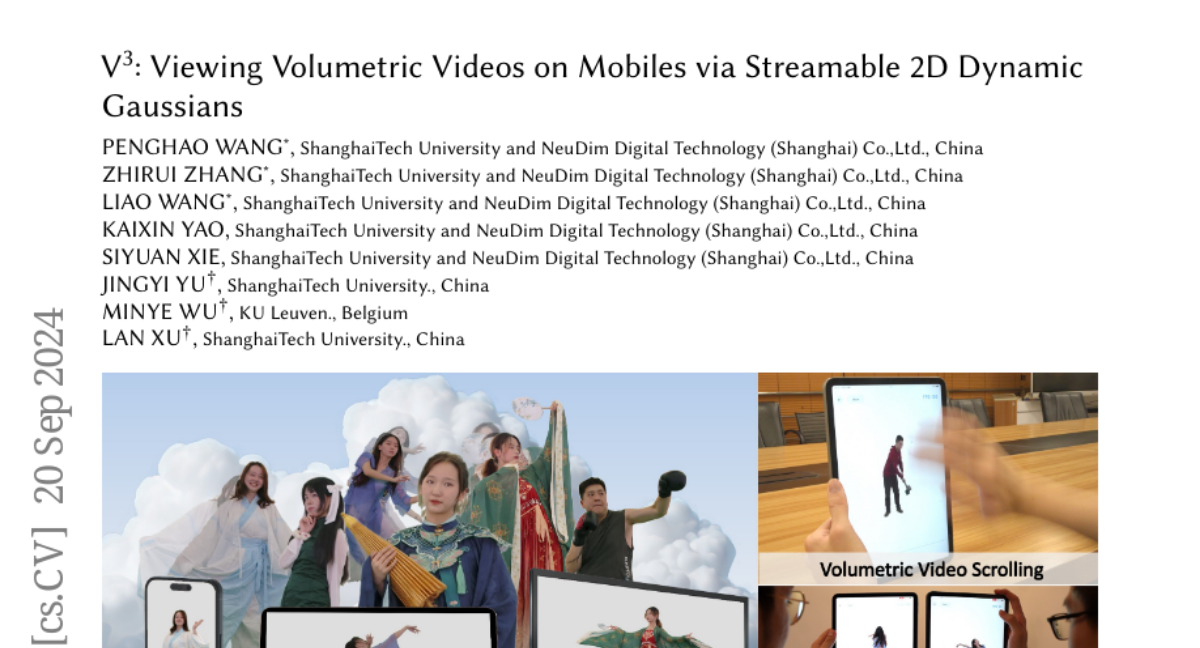

This paper introduces V^3, a new method for streaming and viewing high-quality volumetric videos on mobile devices. It allows users to experience immersive 3D videos as easily as watching regular 2D videos.

What's the problem?

Volumetric videos capture 3D scenes and provide a more immersive experience than traditional videos, but they are difficult to stream on mobile devices. The current methods for rendering these videos require a lot of computing power and bandwidth, which can slow down or hinder the viewing experience on smartphones and tablets.

What's the solution?

To solve this problem, the researchers developed V^3, which uses a technique that treats dynamic 3D video data as 2D images. This allows the system to use existing video compression technologies, making it easier to stream. They also created a two-step training process that reduces the amount of data needed while maintaining high video quality. This approach helps the system efficiently render and stream volumetric videos at high speeds, allowing for smooth playback on mobile devices.

Why it matters?

This research is important because it makes advanced 3D video technology accessible on everyday mobile devices. By improving how volumetric videos are streamed and rendered, V^3 opens up new possibilities for entertainment, education, and virtual experiences, enabling users to enjoy immersive content anytime and anywhere.

Abstract

Experiencing high-fidelity volumetric video as seamlessly as 2D videos is a long-held dream. However, current dynamic 3DGS methods, despite their high rendering quality, face challenges in streaming on mobile devices due to computational and bandwidth constraints. In this paper, we introduce V3(Viewing Volumetric Videos), a novel approach that enables high-quality mobile rendering through the streaming of dynamic Gaussians. Our key innovation is to view dynamic 3DGS as 2D videos, facilitating the use of hardware video codecs. Additionally, we propose a two-stage training strategy to reduce storage requirements with rapid training speed. The first stage employs hash encoding and shallow MLP to learn motion, then reduces the number of Gaussians through pruning to meet the streaming requirements, while the second stage fine tunes other Gaussian attributes using residual entropy loss and temporal loss to improve temporal continuity. This strategy, which disentangles motion and appearance, maintains high rendering quality with compact storage requirements. Meanwhile, we designed a multi-platform player to decode and render 2D Gaussian videos. Extensive experiments demonstrate the effectiveness of V3, outperforming other methods by enabling high-quality rendering and streaming on common devices, which is unseen before. As the first to stream dynamic Gaussians on mobile devices, our companion player offers users an unprecedented volumetric video experience, including smooth scrolling and instant sharing. Our project page with source code is available at https://authoritywang.github.io/v3/.