VD3D: Taming Large Video Diffusion Transformers for 3D Camera Control

Sherwin Bahmani, Ivan Skorokhodov, Aliaksandr Siarohin, Willi Menapace, Guocheng Qian, Michael Vasilkovsky, Hsin-Ying Lee, Chaoyang Wang, Jiaxu Zou, Andrea Tagliasacchi, David B. Lindell, Sergey Tulyakov

2024-07-18

Summary

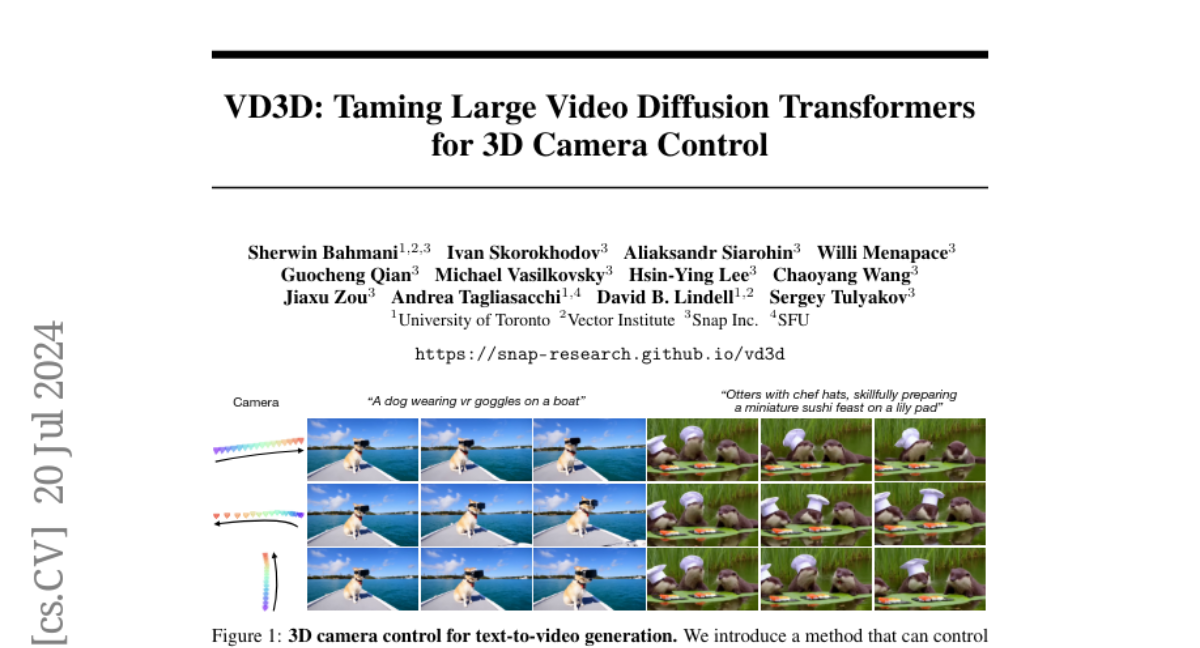

This paper introduces VD3D, a new method that allows for precise control over camera movements in videos generated by large video diffusion transformers.

What's the problem?

While modern models can create realistic videos from text descriptions, they often lack detailed control over how the camera moves during the video. This is important for applications like film-making and visual effects, where specific camera angles and movements can greatly enhance the storytelling and visual experience.

What's the solution?

VD3D addresses this issue by using a special conditioning mechanism that incorporates spatiotemporal camera embeddings based on Plucker coordinates. This allows the model to understand and manipulate camera movements more effectively. The researchers fine-tuned their model using a dataset called RealEstate10K, which helped improve its performance in generating videos with controllable camera poses. This approach is the first of its kind for transformer-based video diffusion models.

Why it matters?

This research is significant because it enhances the capabilities of video generation models, making them more useful for creators who need precise control over camera movements. By improving how these models work, VD3D can contribute to better content creation in various fields, including movies, games, and virtual reality experiences.

Abstract

Modern text-to-video synthesis models demonstrate coherent, photorealistic generation of complex videos from a text description. However, most existing models lack fine-grained control over camera movement, which is critical for downstream applications related to content creation, visual effects, and 3D vision. Recently, new methods demonstrate the ability to generate videos with controllable camera poses these techniques leverage pre-trained U-Net-based diffusion models that explicitly disentangle spatial and temporal generation. Still, no existing approach enables camera control for new, transformer-based video diffusion models that process spatial and temporal information jointly. Here, we propose to tame video transformers for 3D camera control using a ControlNet-like conditioning mechanism that incorporates spatiotemporal camera embeddings based on Plucker coordinates. The approach demonstrates state-of-the-art performance for controllable video generation after fine-tuning on the RealEstate10K dataset. To the best of our knowledge, our work is the first to enable camera control for transformer-based video diffusion models.