VerifyBench: Benchmarking Reference-based Reward Systems for Large Language Models

Yuchen Yan, Jin Jiang, Zhenbang Ren, Yijun Li, Xudong Cai, Yang Liu, Xin Xu, Mengdi Zhang, Jian Shao, Yongliang Shen, Jun Xiao, Yueting Zhuang

2025-05-22

Summary

This paper talks about VerifyBench, a new set of tests designed to see how well AI models can use reference-based reward systems to improve their reasoning skills.

What's the problem?

It's challenging to measure if AI models are actually learning to reason better when they get feedback based on comparing their answers to correct examples, and current tools don't test this thoroughly.

What's the solution?

The researchers created two benchmarks, VerifyBench and VerifyBench-Hard, which are collections of reasoning tasks that help evaluate how accurately these reward systems guide AI models during training.

Why it matters?

This matters because better testing tools lead to smarter AI that can reason more reliably, which is important for applications like tutoring, problem-solving, and decision-making.

Abstract

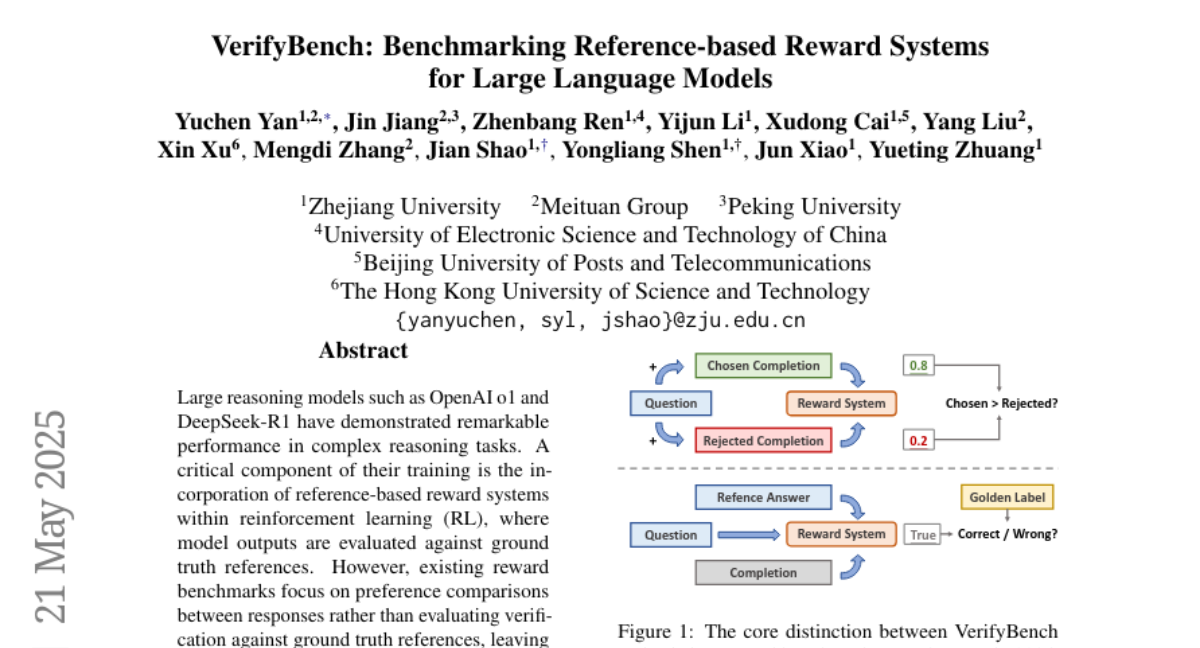

Two new benchmarks, VerifyBench and VerifyBench-Hard, are introduced to evaluate the accuracy of reference-based reward systems in reinforcement learning for reasoning tasks.