VidPanos: Generative Panoramic Videos from Casual Panning Videos

Jingwei Ma, Erika Lu, Roni Paiss, Shiran Zada, Aleksander Holynski, Tali Dekel, Brian Curless, Michael Rubinstein, Forrester Cole

2024-10-18

Summary

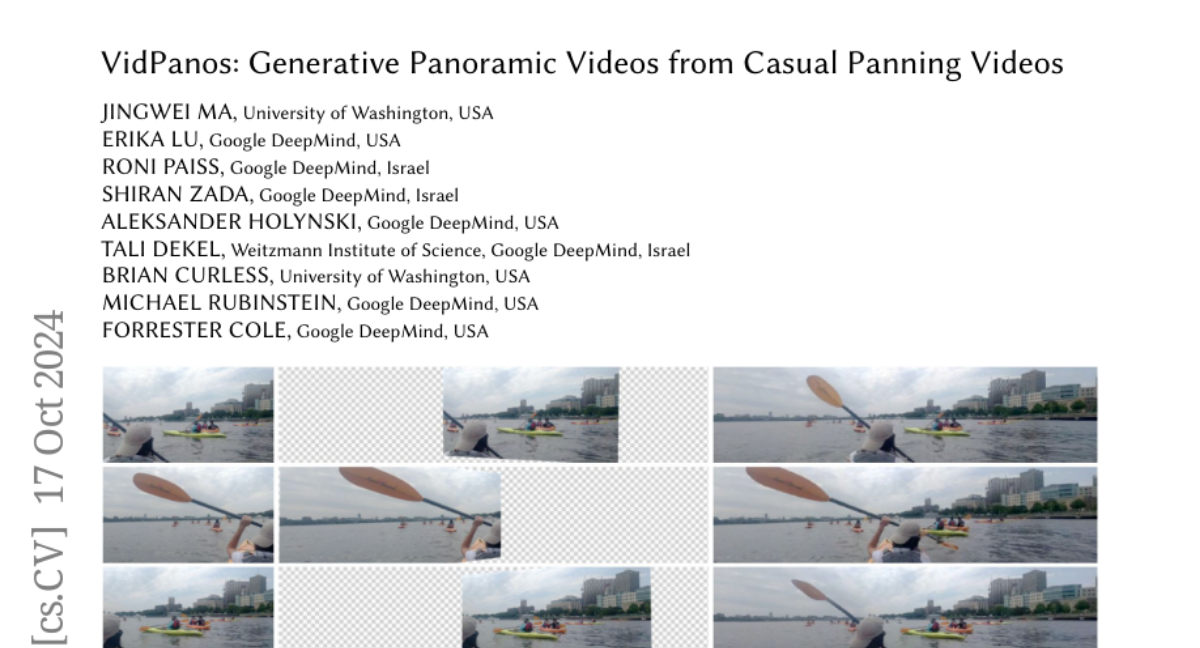

This paper presents VidPanos, a method that creates panoramic videos from regular panning videos, allowing for a wide-angle view of dynamic scenes.

What's the problem?

When people capture videos by moving their cameras around (panning), it can be difficult to create a panoramic view that includes everything happening in the scene, especially if there are moving objects. Traditional methods for making panoramas work well only with still images, so they can't effectively represent dynamic scenes.

What's the solution?

To solve this problem, the authors developed VidPanos, which synthesizes a panoramic video from a standard panning video. They treat this process as a 'space-time outpainting' problem, meaning they fill in the missing parts of the panorama over time. VidPanos uses advanced generative video models to predict and create the missing sections of the video, ensuring that the final product looks realistic and captures all the action in the scene. The system is designed to handle various types of scenes, including those with moving people and vehicles.

Why it matters?

This research is important because it makes it easier for anyone to create immersive panoramic videos without needing special equipment. By allowing users to transform simple panning videos into engaging panoramic experiences, VidPanos could enhance storytelling in areas like travel videos, documentaries, and virtual reality applications. This advancement could democratize access to high-quality video production techniques.

Abstract

Panoramic image stitching provides a unified, wide-angle view of a scene that extends beyond the camera's field of view. Stitching frames of a panning video into a panoramic photograph is a well-understood problem for stationary scenes, but when objects are moving, a still panorama cannot capture the scene. We present a method for synthesizing a panoramic video from a casually-captured panning video, as if the original video were captured with a wide-angle camera. We pose panorama synthesis as a space-time outpainting problem, where we aim to create a full panoramic video of the same length as the input video. Consistent completion of the space-time volume requires a powerful, realistic prior over video content and motion, for which we adapt generative video models. Existing generative models do not, however, immediately extend to panorama completion, as we show. We instead apply video generation as a component of our panorama synthesis system, and demonstrate how to exploit the strengths of the models while minimizing their limitations. Our system can create video panoramas for a range of in-the-wild scenes including people, vehicles, and flowing water, as well as stationary background features.