VisualCloze: A Universal Image Generation Framework via Visual In-Context Learning

Zhong-Yu Li, Ruoyi Du, Juncheng Yan, Le Zhuo, Zhen Li, Peng Gao, Zhanyu Ma, Ming-Ming Cheng

2025-04-11

Summary

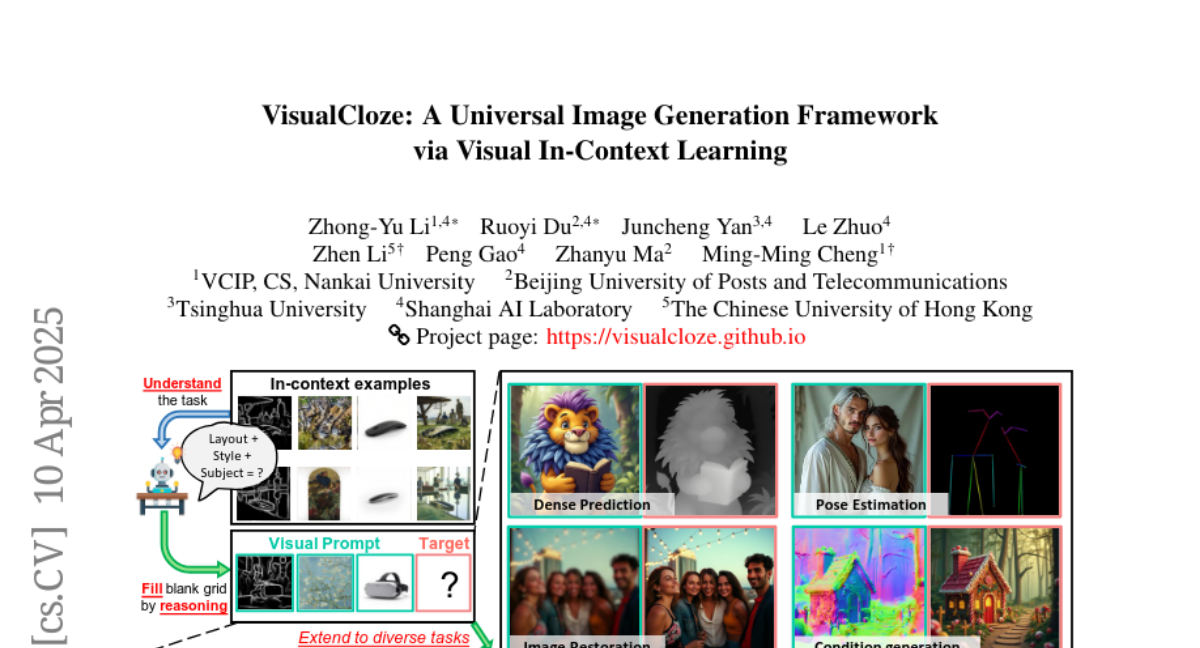

This paper introduces VisualCloze, a new system for generating images that can handle many different types of image tasks using a single model. Instead of needing a separate model for each kind of image generation job, VisualCloze can understand what to do by looking at examples, making it much more flexible and efficient.

What's the problem?

The main issue is that most current image generation models are built for specific tasks, so if you want to do something new or different, you usually need to train a whole new model. Universal models that try to do everything face problems like unclear instructions, not enough related examples to learn from, and difficulties in designing a model that works for all kinds of tasks.

What's the solution?

VisualCloze solves this by letting the model learn from visual examples instead of just written instructions, which helps it figure out what kind of image to make without confusion. The researchers also created a special dataset called Graph200K, which is organized like a network of related tasks, so the model can learn to handle lots of different situations and transfer what it learns from one task to another. On top of that, they found a way to use existing models that are really good at filling in missing parts of images, so VisualCloze can generate images without needing to change the basic design.

Why it matters?

This work is important because it makes image generation much more flexible and powerful. With VisualCloze, you can teach the model new tasks just by showing it a few examples, without retraining or making new models. This opens up creative possibilities for artists, designers, and anyone who wants to generate images for different needs, all with less effort and greater accuracy.

Abstract

Recent progress in diffusion models significantly advances various image generation tasks. However, the current mainstream approach remains focused on building task-specific models, which have limited efficiency when supporting a wide range of different needs. While universal models attempt to address this limitation, they face critical challenges, including generalizable task instruction, appropriate task distributions, and unified architectural design. To tackle these challenges, we propose VisualCloze, a universal image generation framework, which supports a wide range of in-domain tasks, generalization to unseen ones, unseen unification of multiple tasks, and reverse generation. Unlike existing methods that rely on language-based task instruction, leading to task ambiguity and weak generalization, we integrate visual in-context learning, allowing models to identify tasks from visual demonstrations. Meanwhile, the inherent sparsity of visual task distributions hampers the learning of transferable knowledge across tasks. To this end, we introduce Graph200K, a graph-structured dataset that establishes various interrelated tasks, enhancing task density and transferable knowledge. Furthermore, we uncover that our unified image generation formulation shared a consistent objective with image infilling, enabling us to leverage the strong generative priors of pre-trained infilling models without modifying the architectures.