What If We Recaption Billions of Web Images with LLaMA-3?

Xianhang Li, Haoqin Tu, Mude Hui, Zeyu Wang, Bingchen Zhao, Junfei Xiao, Sucheng Ren, Jieru Mei, Qing Liu, Huangjie Zheng, Yuyin Zhou, Cihang Xie

2024-06-13

Summary

This paper explores how to improve the quality of image descriptions on the internet by using a powerful AI model called LLaMA-3. The authors aim to enhance the training of AI systems that work with both images and text by creating a new dataset from billions of web images.

What's the problem?

Many image-text pairs collected from the web are noisy and inaccurate, which makes it hard for AI models to learn effectively. Previous research has shown that improving the text descriptions can help AI models perform better in tasks like generating images from text. However, most large-scale efforts to improve these datasets are not publicly available, limiting access for researchers.

What's the solution?

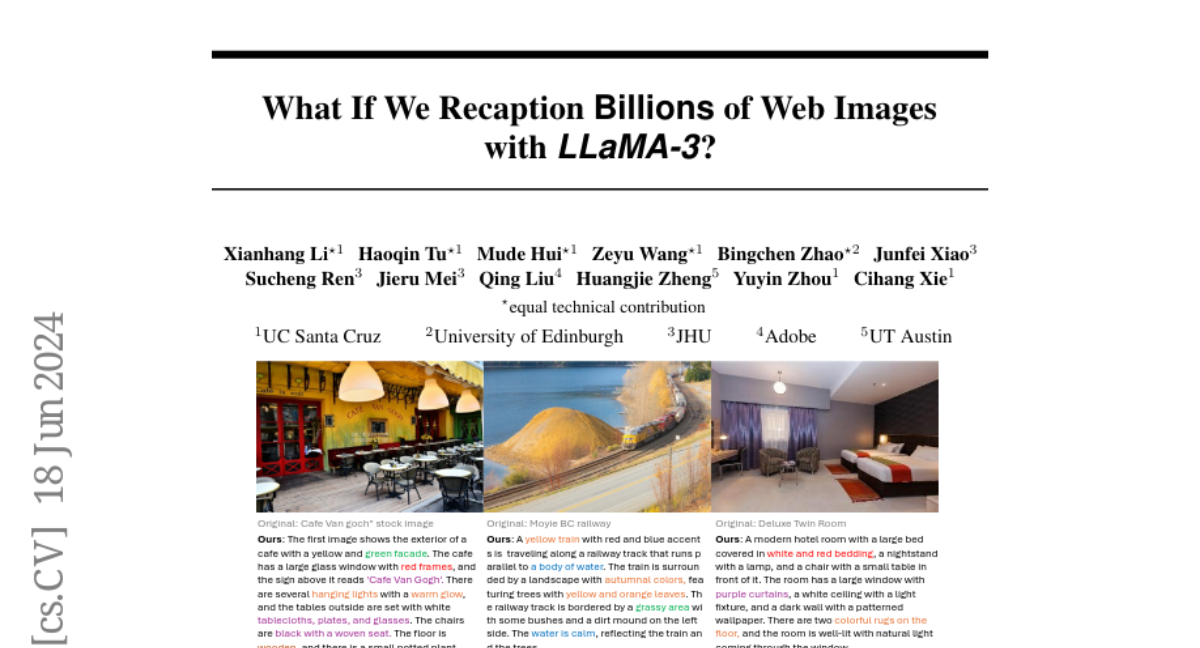

The authors developed a method to recaption 1.3 billion images from a curated dataset called DataComp-1B using the LLaMA-3 model. They fine-tuned this model to generate better captions for the images, resulting in a new dataset called Recap-DataComp-1B. This enhanced dataset significantly improves the training of vision-language models, leading to better performance in tasks like cross-modal retrieval and generating images that closely match user instructions.

Why it matters?

This work is important because it makes high-quality training data accessible to researchers and developers, which can lead to better AI systems that understand and generate visual content based on text. By releasing the Recap-DataComp-1B dataset, the authors hope to encourage further advancements in AI research and application, particularly in open-source communities.

Abstract

Web-crawled image-text pairs are inherently noisy. Prior studies demonstrate that semantically aligning and enriching textual descriptions of these pairs can significantly enhance model training across various vision-language tasks, particularly text-to-image generation. However, large-scale investigations in this area remain predominantly closed-source. Our paper aims to bridge this community effort, leveraging the powerful and open-sourced LLaMA-3, a GPT-4 level LLM. Our recaptioning pipeline is simple: first, we fine-tune a LLaMA-3-8B powered LLaVA-1.5 and then employ it to recaption 1.3 billion images from the DataComp-1B dataset. Our empirical results confirm that this enhanced dataset, Recap-DataComp-1B, offers substantial benefits in training advanced vision-language models. For discriminative models like CLIP, we observe enhanced zero-shot performance in cross-modal retrieval tasks. For generative models like text-to-image Diffusion Transformers, the generated images exhibit a significant improvement in alignment with users' text instructions, especially in following complex queries. Our project page is https://www.haqtu.me/Recap-Datacomp-1B/