What the Harm? Quantifying the Tangible Impact of Gender Bias in Machine Translation with a Human-centered Study

Beatrice Savoldi, Sara Papi, Matteo Negri, Ana Guerberof, Luisa Bentivogli

2024-10-02

Summary

This paper explores the issue of gender bias in machine translation (MT) and its real-world impacts, emphasizing the need for human-centered assessments to understand how bias affects users.

What's the problem?

Gender bias in MT can lead to unfair translations that reinforce stereotypes and create disparities between how different genders are represented. Current evaluations often rely on automated methods that do not accurately reflect the experiences of real users, making it difficult to understand the true impact of these biases on people.

What's the solution?

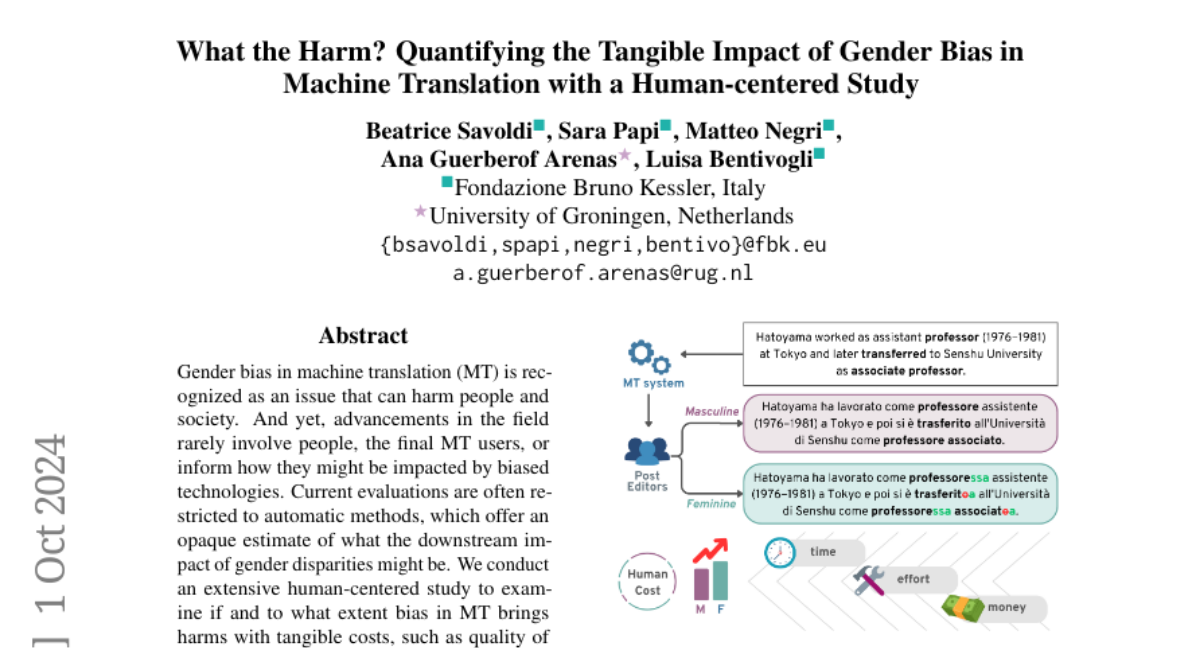

The researchers conducted a human-centered study involving 90 participants who corrected machine-generated translations to ensure accurate gender representation. They found that translating feminine terms required significantly more effort and time, which also translated into higher costs. This study highlighted that existing methods for measuring bias do not capture these real-world disparities effectively.

Why it matters?

This research is important because it sheds light on how gender bias in translation technologies can have tangible negative effects on users, particularly in terms of service quality and fairness. By advocating for more human-centered approaches, the study encourages improvements in MT systems to make them more equitable and reflective of diverse gender identities.

Abstract

Gender bias in machine translation (MT) is recognized as an issue that can harm people and society. And yet, advancements in the field rarely involve people, the final MT users, or inform how they might be impacted by biased technologies. Current evaluations are often restricted to automatic methods, which offer an opaque estimate of what the downstream impact of gender disparities might be. We conduct an extensive human-centered study to examine if and to what extent bias in MT brings harms with tangible costs, such as quality of service gaps across women and men. To this aim, we collect behavioral data from 90 participants, who post-edited MT outputs to ensure correct gender translation. Across multiple datasets, languages, and types of users, our study shows that feminine post-editing demands significantly more technical and temporal effort, also corresponding to higher financial costs. Existing bias measurements, however, fail to reflect the found disparities. Our findings advocate for human-centered approaches that can inform the societal impact of bias.