Which Agent Causes Task Failures and When? On Automated Failure Attribution of LLM Multi-Agent Systems

Shaokun Zhang, Ming Yin, Jieyu Zhang, Jiale Liu, Zhiguang Han, Jingyang Zhang, Beibin Li, Chi Wang, Huazheng Wang, Yiran Chen, Qingyun Wu

2025-05-07

Summary

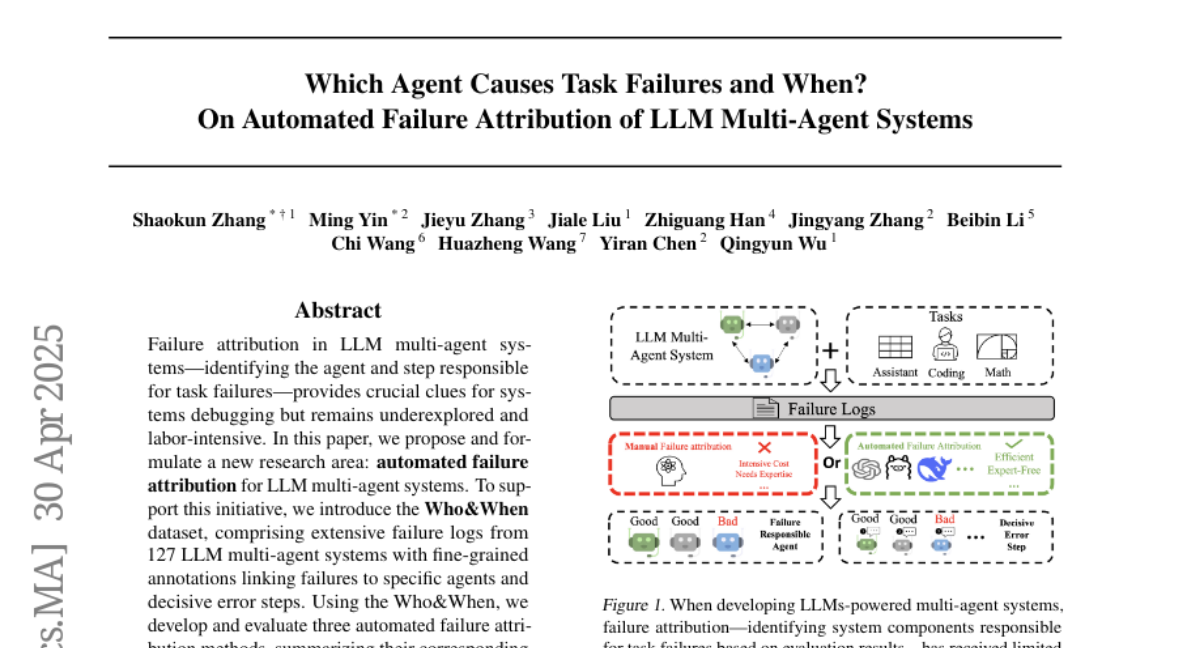

This paper looks at how to figure out which AI agent in a team of large language models (LLMs) is responsible for a task failing, and at what point the failure happens. The researchers use a special dataset called Who&When to test different methods for automatically identifying the agent and the exact step where things go wrong. The best method they found can sometimes identify the right agent, but it struggles to accurately pinpoint the step where the failure occurs.

What's the problem?

When multiple LLM agents work together on a complex task, it's hard to tell which agent caused a mistake or failure and when that mistake happened. Without this information, it's difficult to improve these systems or fix errors, because you don't know exactly where things broke down.

What's the solution?

The researchers created and used the Who&When dataset, which is designed to help track both which agent caused a failure and at what step it happened. They tested different automated methods on this dataset to see how well they could identify the responsible agent and the timing of the failure. Their best method was able to get a moderate score for identifying the agent, but it didn't do well at figuring out the exact step.

Why it matters?

Understanding which agent is responsible for a failure and when it happens is crucial for making multi-agent LLM systems more reliable. If developers can pinpoint the source of errors, they can improve the design, training, and coordination of these AI agents, leading to better performance and fewer mistakes in real-world applications.

Abstract

Automated failure attribution in LLM multi-agent systems is proposed using the Who&When dataset, with the best method achieving moderate agent accuracy but poor step accuracy.