X-Fusion: Introducing New Modality to Frozen Large Language Models

Sicheng Mo, Thao Nguyen, Xun Huang, Siddharth Srinivasan Iyer, Yijun Li, Yuchen Liu, Abhishek Tandon, Eli Shechtman, Krishna Kumar Singh, Yong Jae Lee, Bolei Zhou, Yuheng Li

2025-04-30

Summary

This paper talks about X-Fusion, a new method that lets large language models, which usually only understand text, also work with images without changing how the language part of the AI works.

What's the problem?

Most big language models are really good at understanding and generating text, but they can't handle images or other types of information without being retrained, which can mess up their original abilities.

What's the solution?

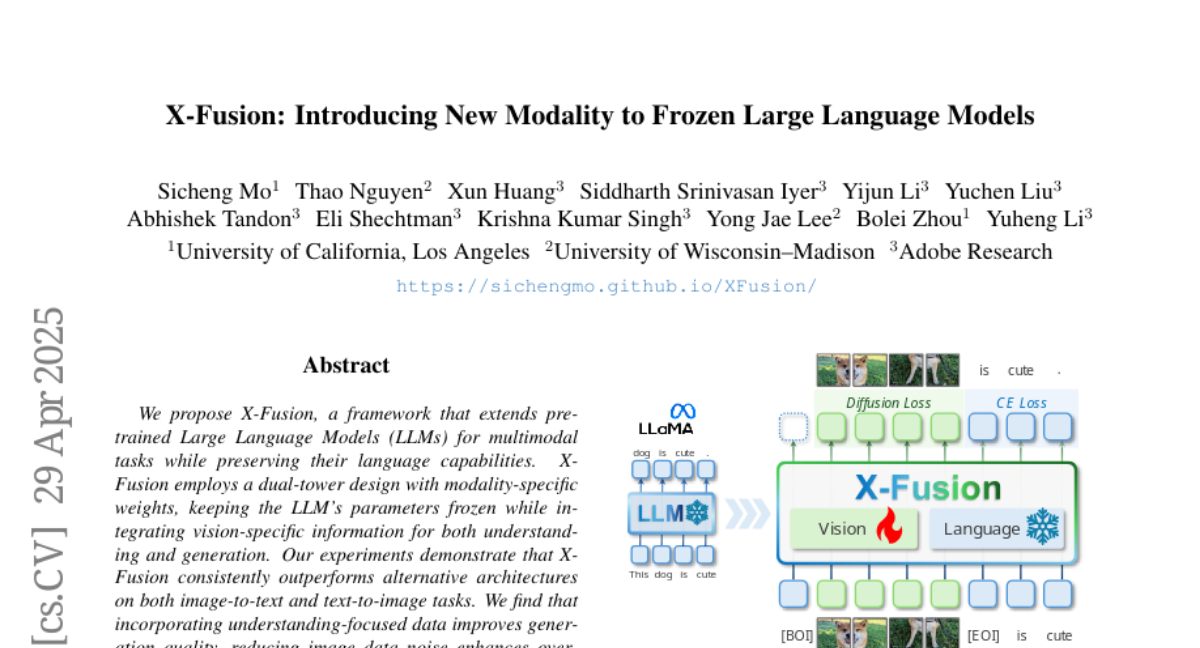

The researchers designed a system that adds a new part specifically for images, called a dual-tower design, which works alongside the language model. They keep the language model frozen, meaning its original skills stay the same, while the new part helps it understand and use visual information.

Why it matters?

This matters because it allows powerful text-based AI to handle more types of tasks, like answering questions about pictures, without losing what it already knows about language. This could make AI much more versatile and useful in everyday life.

Abstract

X-Fusion enhances pretrained LLMs for multimodal tasks by integrating vision-specific information through a dual-tower design and freezes the LLM's parameters for consistency in understanding and generation.