XMusic: Towards a Generalized and Controllable Symbolic Music Generation Framework

Sida Tian, Can Zhang, Wei Yuan, Wei Tan, Wenjie Zhu

2025-01-16

Summary

This paper talks about XMusic, a new AI system that can create high-quality music based on various inputs like images, videos, or even humming. It's designed to make music that matches specific emotions and styles, which is something previous AI music generators struggled with.

What's the problem?

While AI has gotten really good at creating realistic images and text, it's been harder to make AI that can compose music that sounds as good as what humans create. The main challenge has been making AI understand and control the emotions in music, and ensuring the music it creates is high quality.

What's the solution?

The researchers created XMusic, which has two main parts: XProjector and XComposer. XProjector takes different types of input (like pictures or humming) and figures out what kind of music it should make. XComposer then creates the music and checks if it's good enough. They also made a huge collection of music files called XMIDI, with over 100,000 pieces labeled by their emotion and genre, to help train the AI.

Why it matters?

This matters because it could revolutionize how we create and use music. Imagine being able to hum a tune and have an AI turn it into a full song, or having AI create the perfect background music for a video just by analyzing the visuals. It could help musicians, filmmakers, and content creators make music more easily and quickly. Plus, by making AI-generated music that's emotionally expressive and high quality, it opens up new possibilities for personalized music experiences and could even help in fields like music therapy or education.

Abstract

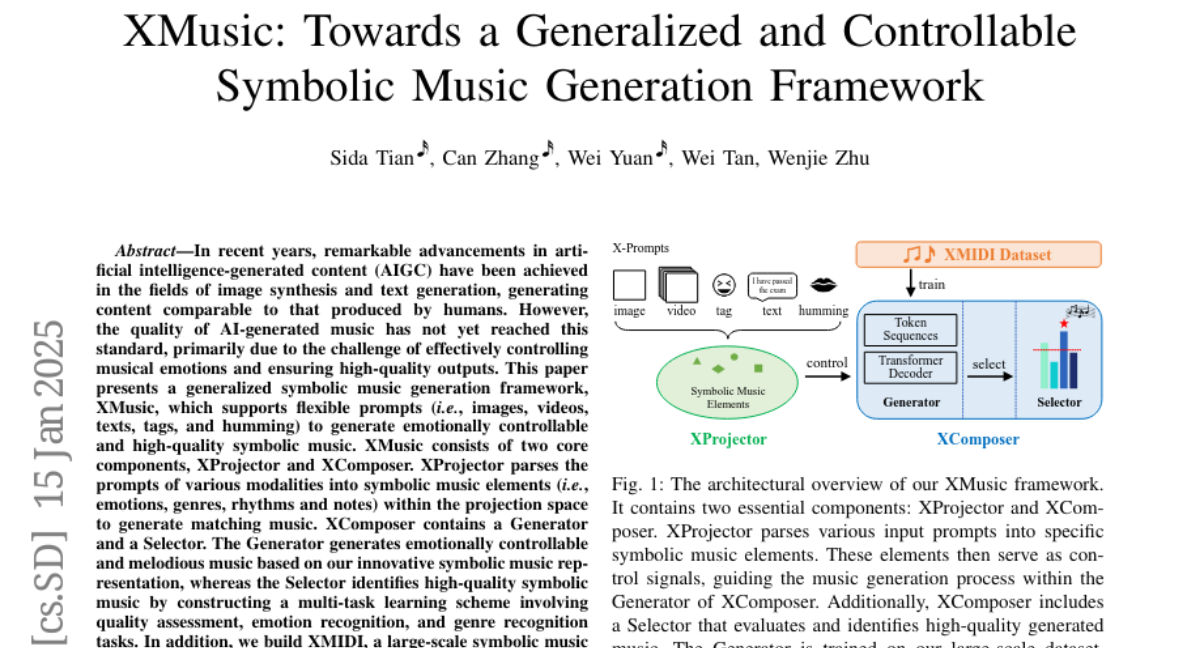

In recent years, remarkable advancements in artificial intelligence-generated content (AIGC) have been achieved in the fields of image synthesis and text generation, generating content comparable to that produced by humans. However, the quality of AI-generated music has not yet reached this standard, primarily due to the challenge of effectively controlling musical emotions and ensuring high-quality outputs. This paper presents a generalized symbolic music generation framework, XMusic, which supports flexible prompts (i.e., images, videos, texts, tags, and humming) to generate emotionally controllable and high-quality symbolic music. XMusic consists of two core components, XProjector and XComposer. XProjector parses the prompts of various modalities into symbolic music elements (i.e., emotions, genres, rhythms and notes) within the projection space to generate matching music. XComposer contains a Generator and a Selector. The Generator generates emotionally controllable and melodious music based on our innovative symbolic music representation, whereas the Selector identifies high-quality symbolic music by constructing a multi-task learning scheme involving quality assessment, emotion recognition, and genre recognition tasks. In addition, we build XMIDI, a large-scale symbolic music dataset that contains 108,023 MIDI files annotated with precise emotion and genre labels. Objective and subjective evaluations show that XMusic significantly outperforms the current state-of-the-art methods with impressive music quality. Our XMusic has been awarded as one of the nine Highlights of Collectibles at WAIC 2023. The project homepage of XMusic is https://xmusic-project.github.io.