Best GPU For AI and Machine Learning

In the dynamic realm of machine learning, where data-driven insights and AI-driven solutions reign supreme, the choice of hardware plays a pivotal role in achieving breakthrough results. At the heart of this hardware setup lies the Graphics Processing Unit (GPU), a workhorse that accelerates training times and empowers complex computations. With an array of options flooding the market, finding the ideal GPU for your machine-learning endeavors can be challenging. Fear not, for in this blog post, we will navigate through the top contenders in the GPU arena, equipping you with the knowledge to make an informed decision. So, first of all, what is a GPU? And how does it affect Machine Learning?

What is a GPU for Machine Learning?

A GPU, or Graphics Processing Unit, is a specialized electronic circuit originally designed to handle complex calculations required for rendering graphics in computer games and other visual applications. However, the capabilities of GPUs have extended far beyond graphics processing. In the realm of machine learning, GPUs have become indispensable tools due to their parallel processing capabilities, which make them highly efficient for performing the intense mathematical computations required by various machine learning algorithms.

Machine learning involves training models to recognize patterns in data and make predictions or decisions based on those patterns. This training process often involves working with large datasets and complex mathematical operations, such as matrix multiplications and convolutions. Traditional central processing units (CPUs) are optimized for sequential processing, making them less efficient for these types of highly parallel tasks. This is where GPUs come into play.

Modern GPUs are designed with thousands of smaller cores that can handle multiple calculations simultaneously. This architecture allows GPUs to perform tasks like matrix operations, which are fundamental to many machine learning algorithms, much faster than traditional CPUs. This parallel processing capability accelerates training times and enables researchers and practitioners to iterate and experiment more rapidly.

NVIDIA was one of the pioneers in recognizing the potential of GPUs for general-purpose computing beyond graphics. They introduced CUDA (Compute Unified Device Architecture), a programming platform that allows developers to harness the power of GPUs for various computational tasks, including machine learning. This move revolutionized the field by providing a way to leverage the parallelism of GPUs for accelerating complex calculations.

Over time, GPUs have evolved to include specialized hardware components, such as Tensor Cores, which are optimized for tensor operations commonly used in deep learning models. These enhancements have further boosted the performance of GPUs in machine-learning tasks.

GPUs have become a cornerstone in the development and deployment of machine learning models. They enable researchers and practitioners to train larger and more complex models in a reasonable amount of time, making it possible to tackle a wide range of problems, from image and speech recognition to natural language processing and drug discovery.

In summary, a GPU for machine learning is a powerful processing unit that accelerates the training and inference of machine learning models by performing complex mathematical computations in parallel. Its ability to handle massive amounts of data and calculations has been a game-changer in the field, enabling advancements and breakthroughs in artificial intelligence research and applications.

Now, let's find out how a GPU works.

How do GPUs work?

At their core, Graphics Processing Units (GPUs) are designed to handle a large number of simple calculations in parallel, making them exceptionally efficient for tasks that involve repetitive computations, such as rendering graphics or processing data in machine learning algorithms. To understand how GPUs work, let's delve into the key components and processes that enable their parallel processing prowess:

Architecture:

Modern GPUs consist of thousands of smaller processing cores, grouped into Streaming Multiprocessors (SMs) or Compute Units (CUs). Each core is capable of executing its own set of instructions independently. This architecture contrasts with the traditional Central Processing Units (CPUs), which typically have fewer cores optimized for sequential processing.

Parallelism:

The primary strength of GPUs lies in their ability to perform a vast number of calculations concurrently. This parallelism is achieved through the arrangement of cores, which enables the GPU to handle multiple tasks simultaneously. For example, while a CPU might handle a few tasks in sequence, a GPU can handle thousands of tasks concurrently.

SIMD Architecture:

GPUs often use a Single Instruction, Multiple Data (SIMD) architecture. This means that a single instruction is executed across multiple data points in parallel. In graphics rendering, this architecture is used to process a large number of pixels simultaneously. In the context of machine learning, it's used for operations on matrices, where each core operates on a portion of the data.

Memory Hierarchy:

GPUs have multiple levels of memory to accommodate the varying needs of different tasks. This hierarchy includes registers (fastest but smallest memory), local memory, shared memory (used for data sharing between threads in the same block), and global memory (largest but slower memory). Efficiently managing data movement between these memory types is crucial to GPU performance.

Task Division:

Tasks are divided into threads, which are the smallest units of work that a GPU can process. Threads are organized into thread blocks, and thread blocks are grouped into grids. This hierarchical organization allows for efficient scheduling and management of parallel tasks.

CUDA and APIs:

NVIDIA's CUDA is a programming platform that allows developers to write code that can be executed on GPUs. Other graphics APIs like OpenGL and DirectX can also be used to leverage GPU parallelism for specific tasks. In the context of machine learning, frameworks like TensorFlow and PyTorch provide high-level abstractions that utilize GPU acceleration for computations.

Specialized Units:

In addition to the general-purpose processing cores, modern GPUs may include specialized hardware units like Tensor Cores for deep learning operations or texture units for graphics tasks. These specialized units optimize specific calculations and enhance overall performance.

Data Parallelism:

Many computational tasks, including those in machine learning, involve the same operation applied to multiple data points. GPUs excel at this type of data parallelism, where a single instruction can be executed across multiple pieces of data simultaneously.

In essence, GPUs work by breaking down complex tasks into smaller, parallelizable subtasks and then executing those subtasks concurrently across a multitude of cores. This design is exceptionally suited for tasks that require heavy computational lifting, such as training neural networks in machine learning. As a result, GPUs have become a cornerstone of modern computing, accelerating a wide range of applications beyond just graphics rendering. So, have you ever wondered, why not CPU? Why GPU?

Why are GPUs better than CPUs for Machine Learning?

Graphics Processing Units (GPUs) have emerged as the go-to hardware for machine learning tasks, and their superiority over Central Processing Units (CPUs) in this domain can be attributed to several key factors:

Parallel Processing Power:

GPUs are designed with a massively parallel architecture, consisting of thousands of smaller cores optimized for parallelism. This architecture enables GPUs to handle a large number of calculations simultaneously, making them exceptionally well-suited for machine learning algorithms that involve repetitive mathematical operations on large datasets.

Acceleration of Matrix Operations:

Many machine learning algorithms, especially in deep learning, involve operations on matrices. GPUs excel at matrix multiplications and convolutions, which are fundamental to tasks like training neural networks. These operations can be parallelized across GPU cores, resulting in significant speedup compared to CPUs.

Speed and Throughput:

Due to their parallel nature, GPUs can perform tasks that involve massive amounts of computation much faster than CPUs. Training complex machine learning models can take days or weeks on a CPU, but with GPUs, the same tasks can be completed in a matter of hours or even minutes. This speedup allows researchers and practitioners to experiment more rapidly and iterate through different models and hyperparameters.

Deep Learning and Neural Networks:

Deep learning models, particularly neural networks, have numerous layers and connections, requiring immense computational power for training. GPUs, with their parallelism and specialized hardware like Tensor Cores, can significantly speed up the training process for these models.

Specialized Hardware:

Modern GPUs often come equipped with specialized hardware units tailored for specific tasks, such as Tensor Cores for deep learning operations. These hardware components are optimized for tasks commonly encountered in machine learning workloads, providing a further performance boost.

GPU-Accelerated Libraries and Frameworks:

Several popular machine learning libraries and frameworks, such as TensorFlow, PyTorch, and CUDA, have been developed to leverage GPU acceleration. These libraries provide high-level abstractions that allow developers to harness the power of GPUs without delving into the low-level details of parallel programming.

Cost-Effectiveness:

GPUs provide an efficient way to achieve high-performance computing without breaking the bank. While dedicated hardware like ASICs (Application-Specific Integrated Circuits) can outperform GPUs for specific tasks, GPUs offer a versatile solution that can handle a wide range of machine-learning workloads.

Availability and Accessibility:

GPUs are widely available, both in terms of consumer-grade GPUs and data center GPUs. Cloud service providers also offer GPU instances, allowing individuals and organizations to access powerful hardware without the need for significant upfront investments.

Flexibility and Research:

GPUs offer flexibility for researchers to experiment with various machine-learning algorithms and models. Their general-purpose nature allows them to adapt to different tasks, making them a valuable tool for both experimentation and production.

In summary, GPUs outshine CPUs in machine learning due to their parallel processing capabilities, efficiency in handling matrix operations, specialized hardware for deep learning, speed, and the support of GPU-accelerated libraries and frameworks. This combination of factors empowers machine learning practitioners to tackle more complex and data-intensive problems, leading to faster training times and breakthroughs in the field. Let's see how you can choose the best GPU for your needs.

How to Choose the Best GPU for Machine Learning

Selecting the best GPU for machine learning involves careful consideration of various factors to ensure that the chosen GPU aligns with your specific needs, budget, and the scale of your machine learning tasks. Here's a step-by-step guide to help you make an informed decision:

Task Requirements:

Understand the nature of your machine learning tasks. Are you working on deep learning with large neural networks? Do you require substantial memory for handling large datasets? Are you involved in real-time inference or batch processing? Defining your specific task requirements will guide your GPU selection.

Performance:

Evaluate the performance metrics that matter most to your tasks, such as FLOPs (Floating-Point Operations Per Second), memory bandwidth, and memory capacity. Benchmarks and comparisons can provide insights into how different GPUs perform in real-world machine-learning workloads.

Memory Capacity and Type:

Machine learning tasks often involve working with large datasets. Consider the GPU's memory capacity (VRAM) and type (e.g., GDDR6) to ensure that your chosen GPU can comfortably handle your data. Deep learning models, especially those with numerous parameters, benefit from GPUs with ample memory.

GPU Architecture:

Stay up-to-date with the latest GPU architectures, as newer architectures often bring improved performance and efficiency. Familiarize yourself with the features and enhancements of each architecture and assess how they align with your machine-learning tasks.

Framework Compatibility:

Ensure that the GPU you choose is compatible with the machine learning frameworks you intend to use, such as TensorFlow, PyTorch, or Keras. Many frameworks have GPU-accelerated versions that can significantly enhance training speeds.

Price-to-Performance Ratio:

Consider the budget you have available and look for GPUs that offer a favorable price-to-performance ratio. While the most powerful GPUs can be expensive, there are often mid-tier options that strike a balance between cost and performance.

CUDA Cores and Tensor Cores:

Modern GPUs come with varying numbers of CUDA cores, which are essential for general-purpose parallel computing. For deep learning, GPUs with Tensor Cores are particularly valuable, as they accelerate matrix operations commonly used in neural networks.

GPU Manufacturer and Model:

Both NVIDIA and AMD offer GPUs suitable for machine learning. Research the specifications and reviews of various models within your budget range. Check for user experiences, community discussions, and benchmarks to gauge real-world performance.

Future Scalability:

Consider whether you plan to scale your machine learning tasks in the future. If scalability is important, look into GPUs that can be easily integrated into multi-GPU setups, either within your workstation or in cloud-based instances.

Cooling and Power Requirements:

Power consumption and cooling solutions can impact the practicality of using certain GPUs. Make sure that your system's power supply and cooling capacity can handle the GPU you intend to use.

Cloud Services:

If purchasing a physical GPU isn't feasible or necessary, you can leverage cloud services that offer GPU instances. Providers like Amazon AWS, Google Cloud, and Microsoft Azure offer scalable GPU resources for machine learning tasks.

Reviews and Recommendations:

Read reviews, user experiences, and recommendations from other machine learning practitioners. Their insights can provide valuable information about real-world performance and issues.

In summary, the best GPU for machine learning depends on your specific requirements, budget, and intended tasks. Carefully assess the GPU's performance, memory capacity, architecture, compatibility with frameworks, and other factors to make a well-informed decision that will empower you to efficiently tackle your machine-learning projects.

15 Best GPUs for Deep Learning

Deep learning, a subset of machine learning, demands substantial computational power for training complex neural networks. Here are 15 GPUs that have proven to be among the best choices for deep learning tasks, considering factors like performance, memory capacity, and price-to-performance ratio. Please note that availability and specifications may have changed since my last knowledge update in September 2021, so it's recommended to verify the latest information before making a purchase:

With its impressive 24GB of GDDR6X memory and high CUDA core count, the RTX 3090 is a powerhouse for large-scale deep learning tasks, offering top-tier performance for training complex models.

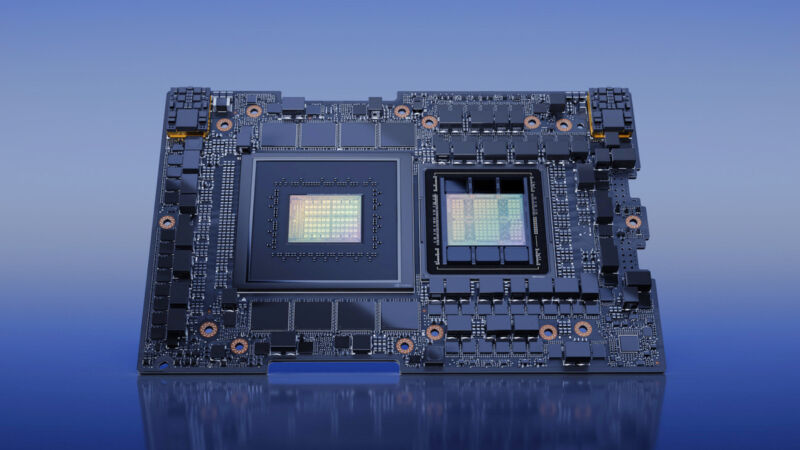

Built on the Ampere architecture, the A100 is NVIDIA's flagship data center GPU. Its 40GB of HBM2 memory and Tensor Cores make it a leading choice for AI and deep learning workloads, though it's more suited for data center environments.

A strong performer with 10GB of GDDR6X memory and a substantial number of CUDA cores, the RTX 3080 is an excellent choice for high-performance deep learning tasks.

Offering good value for its price, the RTX 3070 has 8GB of GDDR6 memory and solid performance for medium-scale deep learning tasks.

Sitting between the gaming and professional lines, the Titan RTX provides 24GB of GDDR6 memory and high computational power, suitable for a range of deep learning applications.

A professional-grade GPU with 48GB of GDDR6 memory, the Quadro RTX 8000 excels in handling large datasets and complex neural network architectures.

A compact AI supercomputer designed for edge applications, the Jetson Xavier NX provides powerful performance in a small form factor.

Although AMD GPUs are less common for deep learning, the Radeon RX 6900 XT offers 16GB of GDDR6 memory and respectable performance for certain workloads.

A previous-generation data center GPU, the Tesla V100 is still widely used for its 16GB or 32GB of HBM2 memory and exceptional computing performance.

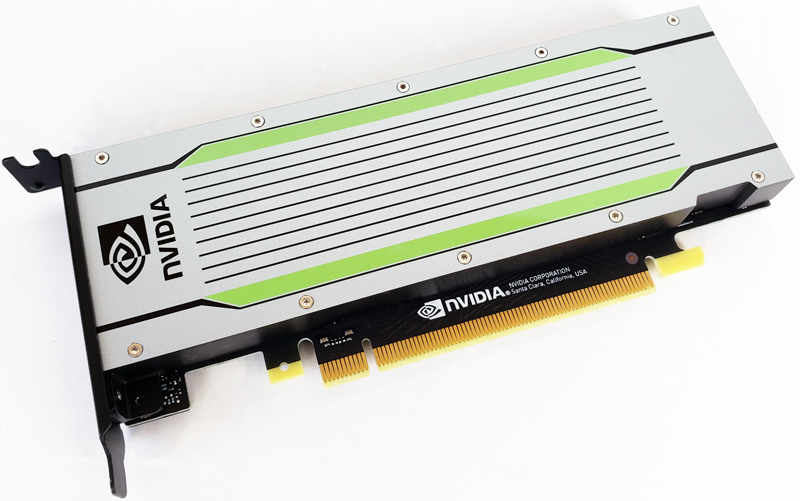

Designed for inference and smaller-scale training tasks, the Tesla T4 offers 16GB of GDDR6 memory and is optimized for efficiency.

Built on the Ampere architecture, the Quadro A6000 features 48GB of GDDR6 memory and substantial CUDA cores, making it a strong choice for AI and deep learning.

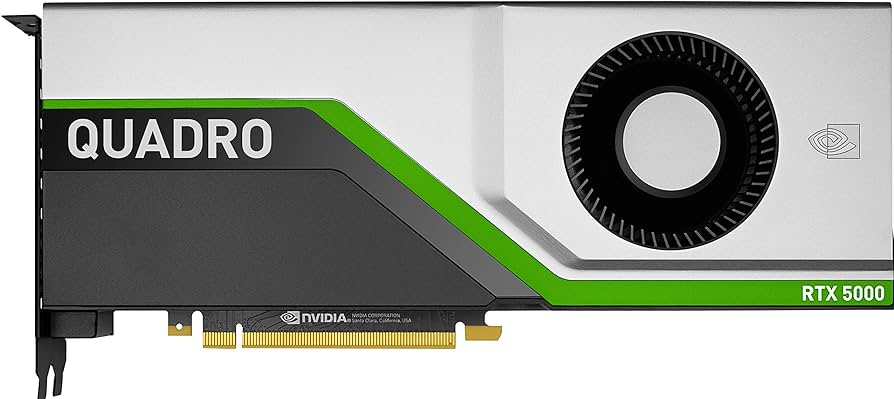

With 16GB of GDDR6 memory, the Quadro RTX 5000 provides a balance between performance and cost for deep learning workloads.

With 24GB of GDDR6 memory, the Quadro RTX 6000 is well-suited for various professional tasks, including deep learning.

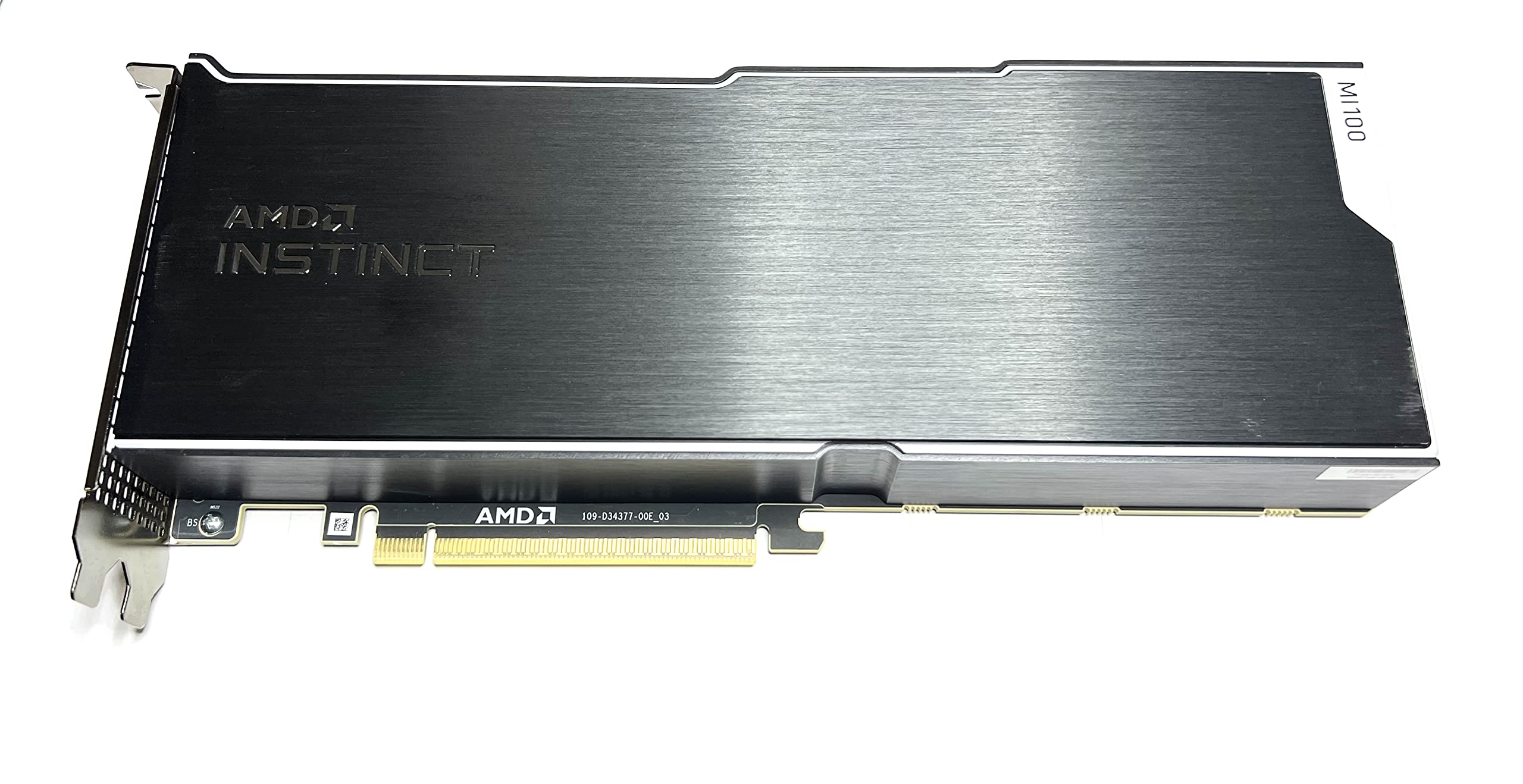

With its CDNA architecture, the Radeon Instinct MI100 provides 32GB of HBM2 memory and competes as an alternative for deep learning tasks.

The Titan V features 12GB of HBM2 memory and is known for its high computational power, making it suitable for both research and applications.

When choosing a GPU for deep learning, consider the size of your datasets, the complexity of your models, your budget, and your long-term goals. It's also important to stay updated with the latest GPU releases and reviews to make an informed decision based on the most current information available.

Conclusion: Picking Your Powerhouse

In the ever-evolving landscape of machine learning, the choice of GPU can significantly impact your research, development, and deployment processes. NVIDIA's GeForce RTX 30 Series and A100 GPUs stand as leaders in delivering unmatched performance, while AMD's Radeon Instinct MI100 and Intel's Xe-HP GPUs offer compelling alternatives. For those willing to venture into the unknown, crafting a custom GPU solution may unlock untapped potential.

Before making a decision, consider your budget, the scale of your projects, and the specific requirements of your machine learning tasks. Regardless of your choice, these GPUs are sure to fuel your journey toward groundbreaking discoveries in the realm of artificial intelligence.

Subscribe to the AI Search Newsletter

Get top updates in AI to your inbox every weekend. It's free!